Compare commits

76 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

fa22647acd | ||

|

|

dd5d82fe7a | ||

|

|

98b179aeb5 | ||

|

|

e1f1c005a0 | ||

|

|

6e226c5a4f | ||

|

|

7440fa5a37 | ||

|

|

4fe204605a | ||

|

|

4446b42b82 | ||

|

|

4b6cd17d0a | ||

|

|

1a6e74271c | ||

|

|

6ba3719031 | ||

|

|

dd95e3df7e | ||

|

|

69fd7853c8 | ||

|

|

c01c478ffe | ||

|

|

f8be1da83a | ||

|

|

3a7625486e | ||

|

|

fdc3b6c573 | ||

|

|

76939ed51f | ||

|

|

b9cf761f4a | ||

|

|

4c515ba541 | ||

|

|

d7c3595bf1 | ||

|

|

1fbd6a0824 | ||

|

|

ccb59c7f02 | ||

|

|

04bef3e82a | ||

|

|

17105b98ed | ||

|

|

4bff1515a9 | ||

|

|

0a75893346 | ||

|

|

2ed92467f9 | ||

|

|

634ac122d9 | ||

|

|

44640b7e53 | ||

|

|

47e7b22a7e | ||

|

|

918928d4bb | ||

|

|

69fc172779 | ||

|

|

d84dabbe4d | ||

|

|

23114210c4 | ||

|

|

ea80e5a223 | ||

|

|

6087f31d41 | ||

|

|

30ee292a32 | ||

|

|

705a9319f5 | ||

|

|

c789d9d87c | ||

|

|

a7681b5505 | ||

|

|

9e74d8af0b | ||

|

|

b52061f849 | ||

|

|

01b875c283 | ||

|

|

4cc3b78321 | ||

|

|

6205db87e6 | ||

|

|

518633b153 | ||

|

|

988ee7b7e7 | ||

|

|

cdadde60ce | ||

|

|

4bb01d86d9 | ||

|

|

4cac43520f | ||

|

|

d6dddd16f1 | ||

|

|

c0da054635 | ||

|

|

2b4d94ca55 | ||

|

|

e8e564738a | ||

|

|

d48fbd8b62 | ||

|

|

c1f80f209e | ||

|

|

ed6b32c827 | ||

|

|

fc436fd352 | ||

|

|

ee6fdb1ca1 | ||

|

|

988db30355 | ||

|

|

ea98ee5e99 | ||

|

|

b8d1d43822 | ||

|

|

0d017c6d14 | ||

|

|

2825e9a003 | ||

|

|

6e9ddfcbf2 | ||

|

|

378689be39 | ||

|

|

31858fad12 | ||

|

|

60351d629d | ||

|

|

715a97159a | ||

|

|

b48ce28b35 | ||

|

|

7ab0448cd3 | ||

|

|

5f6642fa63 | ||

|

|

5a0d1ed408 | ||

|

|

131e8fb6be | ||

|

|

1c7fb8ef93 |

2

.github/ISSUE_TEMPLATE/bug_report.md

vendored

2

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -6,7 +6,7 @@ labels: bug

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

<!--Please be aware that GNOME Code of Conduct applies to Alpaca, https://conduct.gnome.org/-->

|

||||

**Describe the bug**

|

||||

A clear and concise description of what the bug is.

|

||||

|

||||

|

||||

2

.github/ISSUE_TEMPLATE/feature_request.md

vendored

2

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@@ -6,7 +6,7 @@ labels: enhancement

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

<!--Please be aware that GNOME Code of Conduct applies to Alpaca, https://conduct.gnome.org/-->

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

||||

|

||||

|

||||

18

.github/workflows/flatpak-builder.yml

vendored

Normal file

18

.github/workflows/flatpak-builder.yml

vendored

Normal file

@@ -0,0 +1,18 @@

|

||||

# .github/workflows/flatpak-build.yml

|

||||

on:

|

||||

workflow_dispatch:

|

||||

name: Flatpak Build

|

||||

jobs:

|

||||

flatpak:

|

||||

name: "Flatpak"

|

||||

runs-on: ubuntu-latest

|

||||

container:

|

||||

image: bilelmoussaoui/flatpak-github-actions:gnome-46

|

||||

options: --privileged

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: flatpak/flatpak-github-actions/flatpak-builder@v6

|

||||

with:

|

||||

bundle: Alpaca.flatpak

|

||||

manifest-path: com.jeffser.Alpaca.json

|

||||

cache-key: flatpak-builder-${{ github.sha }}

|

||||

24

.github/workflows/pylint.yml

vendored

Normal file

24

.github/workflows/pylint.yml

vendored

Normal file

@@ -0,0 +1,24 @@

|

||||

name: Pylint

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: ["3.11"]

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v3

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install pylint

|

||||

- name: Analysing the code with pylint

|

||||

run: |

|

||||

pylint --rcfile=.pylintrc $(git ls-files '*.py' | grep -v 'src/available_models_descriptions.py')

|

||||

14

.pylintrc

Normal file

14

.pylintrc

Normal file

@@ -0,0 +1,14 @@

|

||||

[MASTER]

|

||||

|

||||

[MESSAGES CONTROL]

|

||||

disable=undefined-variable, line-too-long, missing-function-docstring, consider-using-f-string, import-error

|

||||

|

||||

[FORMAT]

|

||||

max-line-length=200

|

||||

|

||||

# Reasons for removing some checks:

|

||||

# undefined-variable: _() is used by the translator on build time but it is not defined on the scripts

|

||||

# line-too-long: I... I'm too lazy to make the lines shorter, maybe later

|

||||

# missing-function-docstring I'm not adding a docstring to all the functions, most are self explanatory

|

||||

# consider-using-f-string I can't use f-string because of the translator

|

||||

# import-error The linter doesn't have access to all the libraries that the project itself does

|

||||

34

Alpaca.doap

Normal file

34

Alpaca.doap

Normal file

@@ -0,0 +1,34 @@

|

||||

<Project xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

|

||||

xmlns:rdfs="http://www.w3.org/2000/01/rdf-schema#"

|

||||

xmlns:foaf="http://xmlns.com/foaf/0.1/"

|

||||

xmlns:gnome="http://api.gnome.org/doap-extensions#"

|

||||

xmlns="http://usefulinc.com/ns/doap#">

|

||||

|

||||

<name xml:lang="en">Alpaca</name>

|

||||

<shortdesc xml:lang="en">An Ollama client made with GTK4 and Adwaita</shortdesc>

|

||||

<homepage rdf:resource="https://jeffser.com/alpaca" />

|

||||

<bug-database rdf:resource="https://github.com/Jeffser/Alpaca/issues"/>

|

||||

<programming-language>Python</programming-language>

|

||||

|

||||

<platform>GTK 4</platform>

|

||||

<platform>Libadwaita</platform>

|

||||

|

||||

<maintainer>

|

||||

<foaf:Person>

|

||||

<foaf:name>Jeffry Samuel</foaf:name>

|

||||

<foaf:mbox rdf:resource="mailto:jeffrysamuer@gmail.com"/>

|

||||

<foaf:account>

|

||||

<foaf:OnlineAccount>

|

||||

<foaf:accountServiceHomepage rdf:resource="https://github.com"/>

|

||||

<foaf:accountName>jeffser</foaf:accountName>

|

||||

</foaf:OnlineAccount>

|

||||

</foaf:account>

|

||||

<foaf:account>

|

||||

<foaf:OnlineAccount>

|

||||

<foaf:accountServiceHomepage rdf:resource="https://gitlab.gnome.org"/>

|

||||

<foaf:accountName>jeffser</foaf:accountName>

|

||||

</foaf:OnlineAccount>

|

||||

</foaf:account>

|

||||

</foaf:Person>

|

||||

</maintainer>

|

||||

</Project>

|

||||

54

README.md

54

README.md

@@ -11,7 +11,11 @@ Alpaca is an [Ollama](https://github.com/ollama/ollama) client where you can man

|

||||

> [!WARNING]

|

||||

> This project is not affiliated at all with Ollama, I'm not responsible for any damages to your device or software caused by running code given by any AI models.

|

||||

|

||||

> [!IMPORTANT]

|

||||

> Please be aware that [GNOME Code of Conduct](https://conduct.gnome.org) applies to Alpaca before interacting with this repository.

|

||||

|

||||

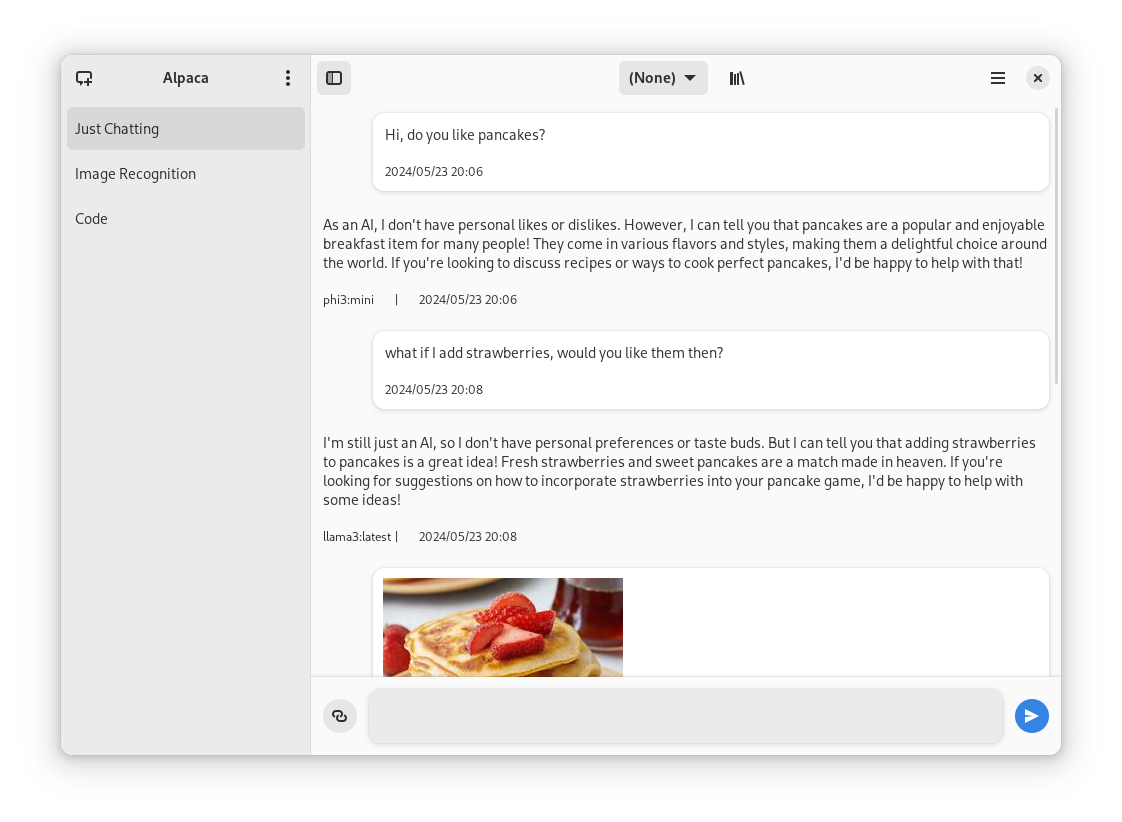

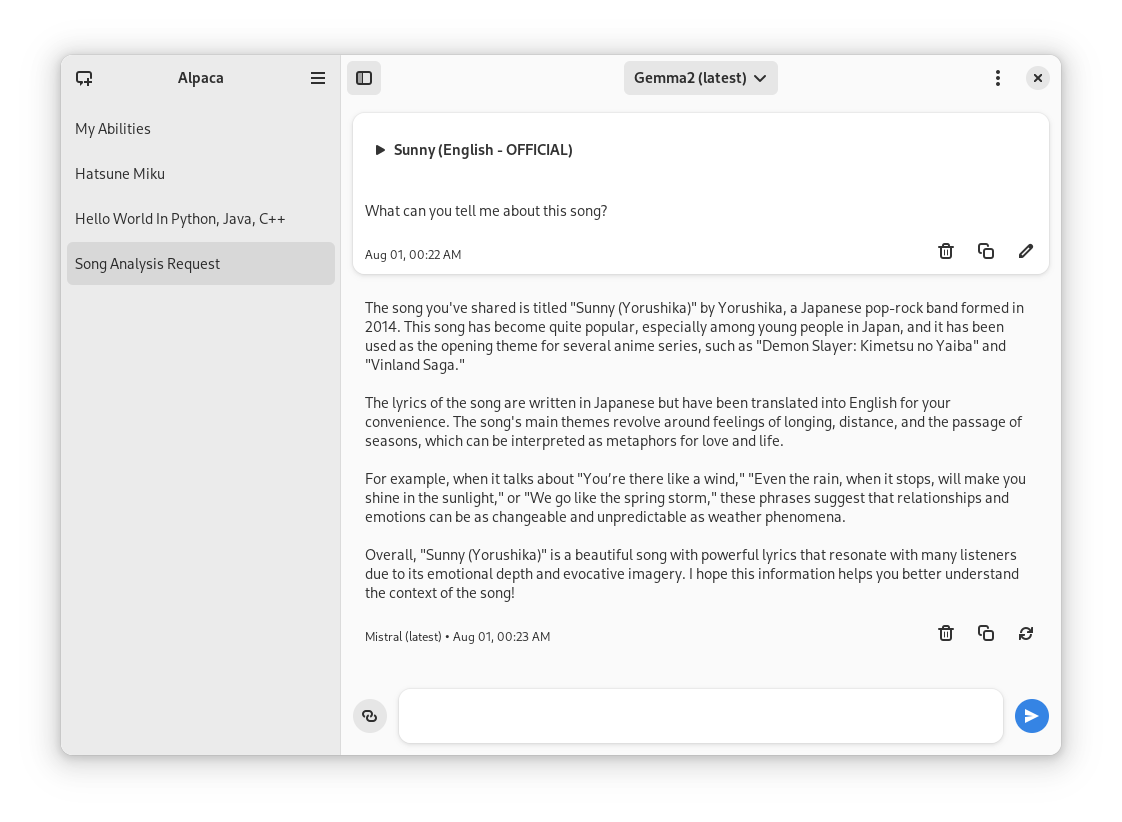

## Features!

|

||||

|

||||

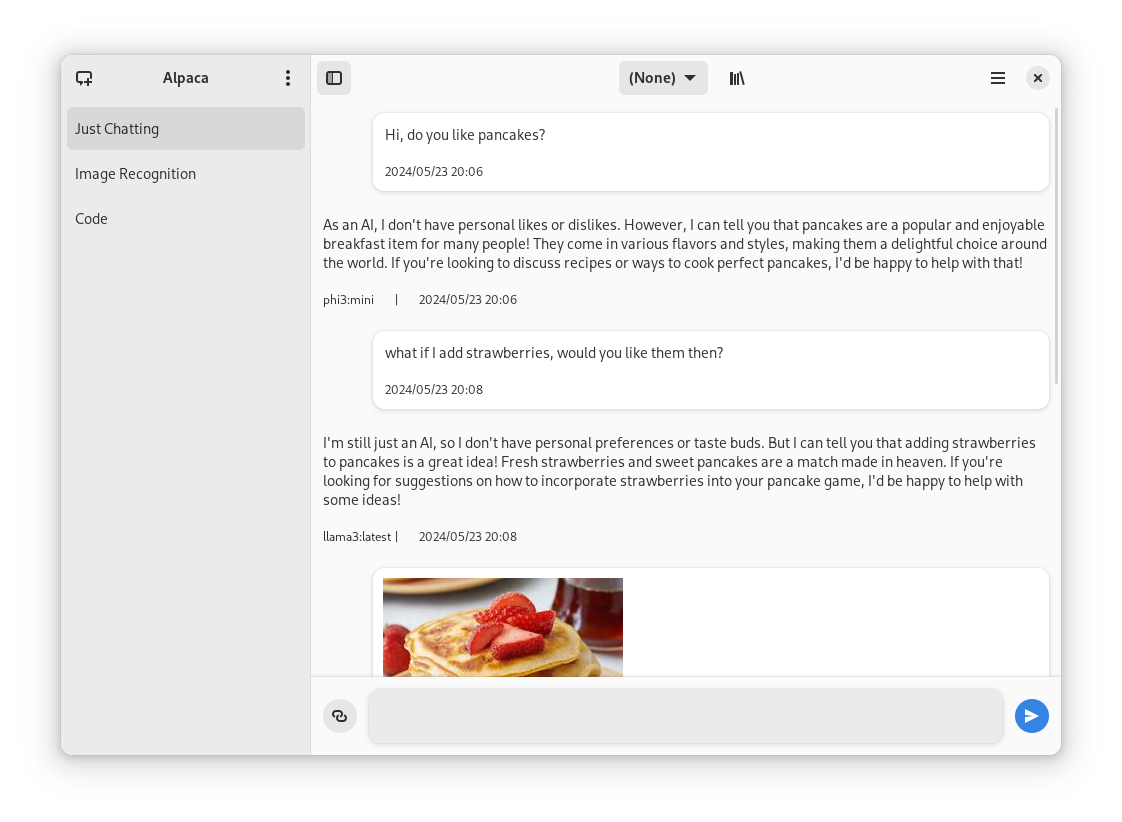

- Talk to multiple models in the same conversation

|

||||

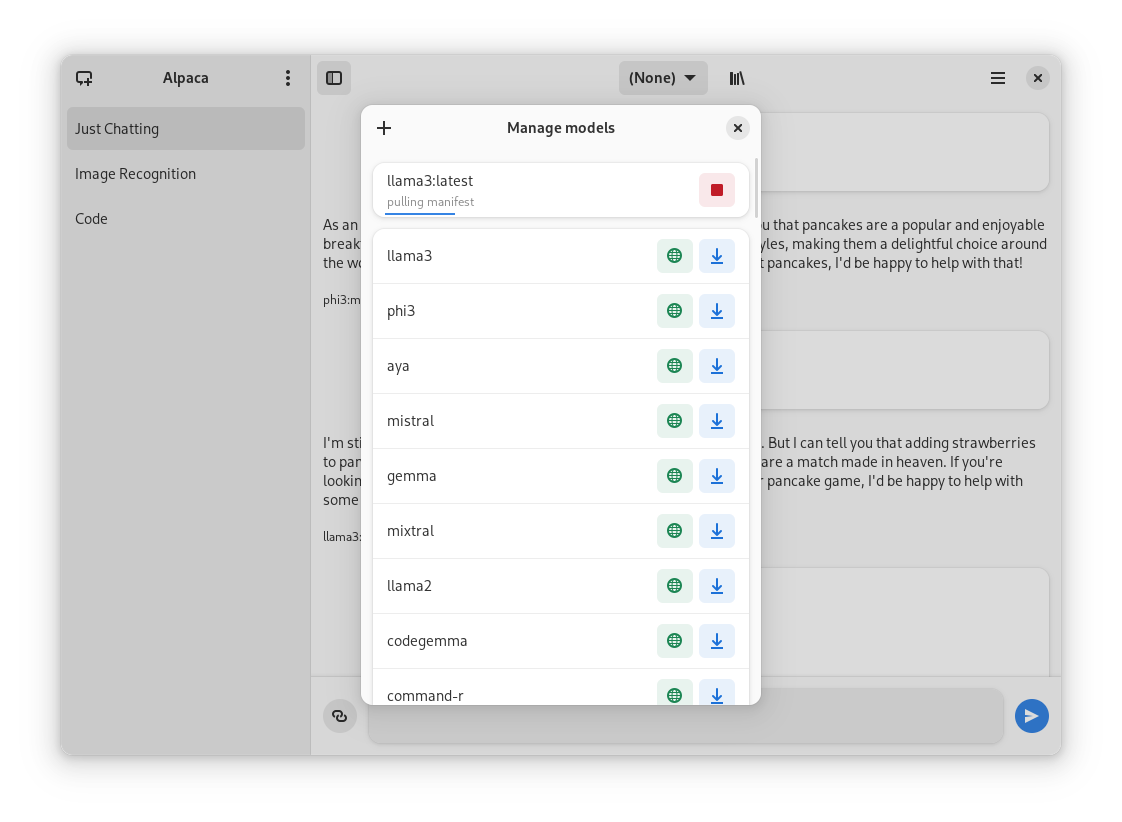

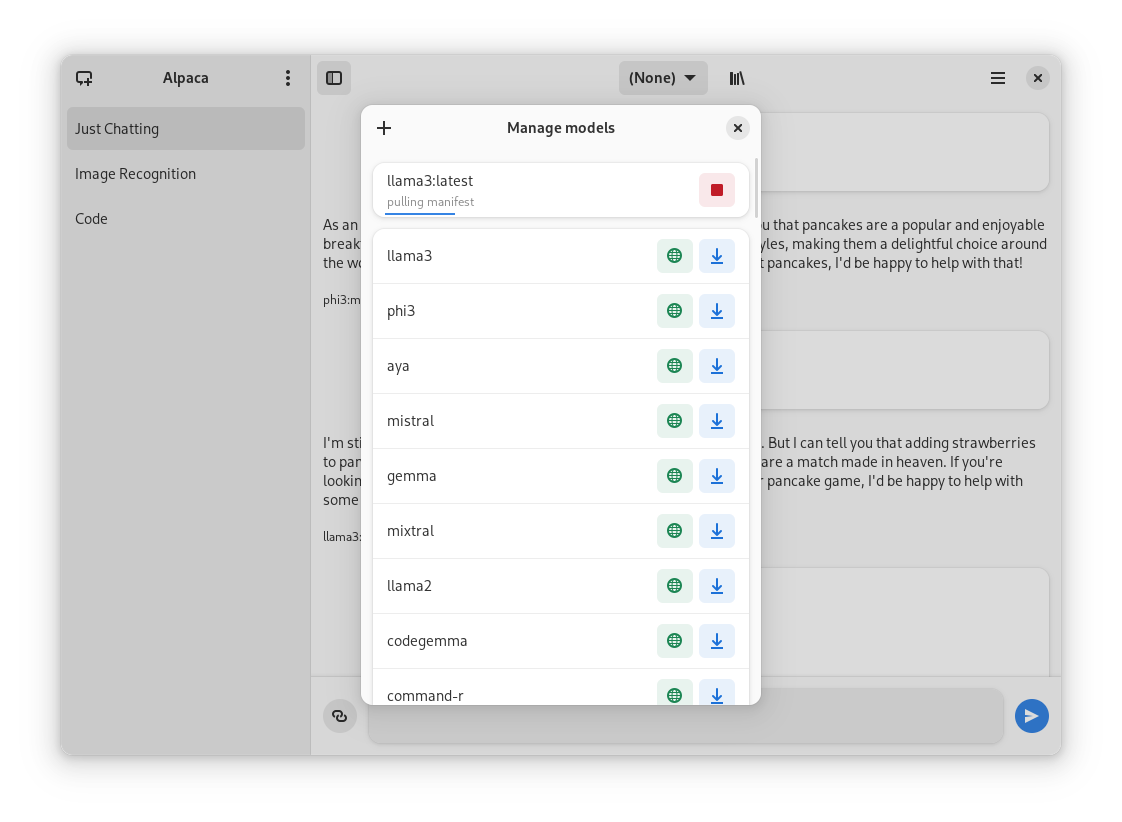

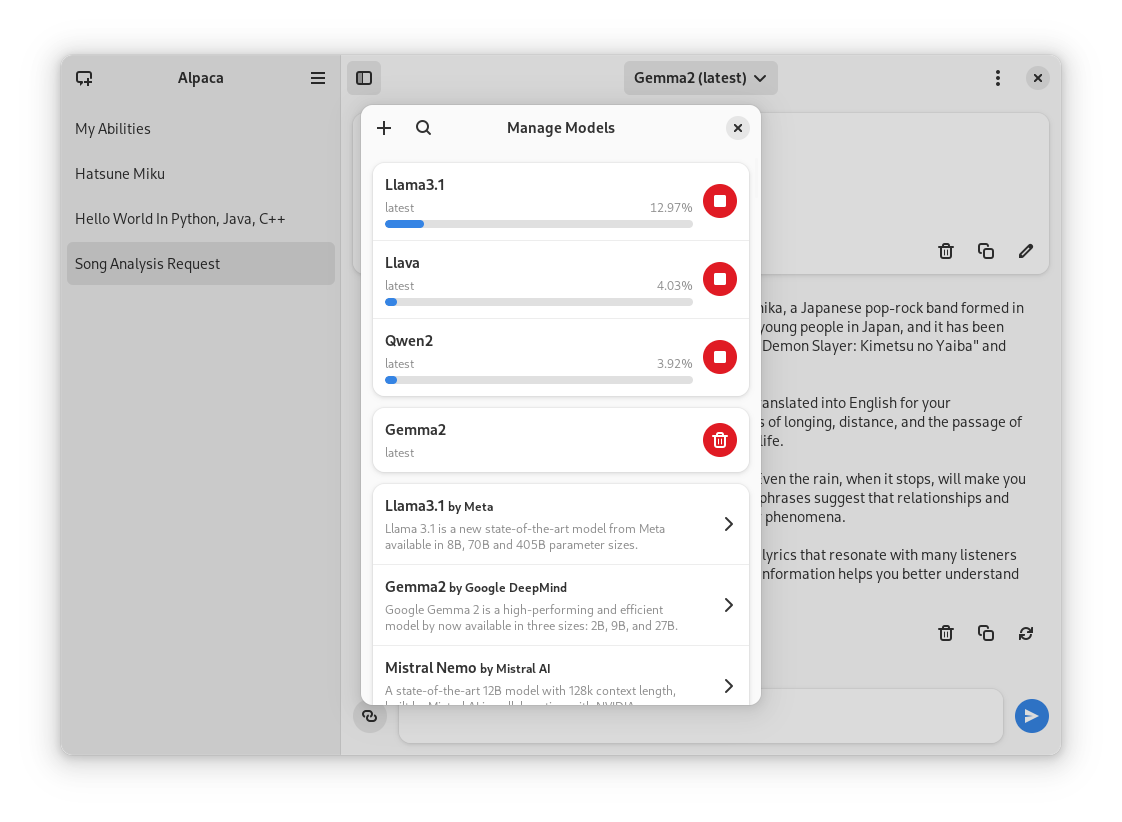

- Pull and delete models from the app

|

||||

- Image recognition

|

||||

@@ -21,47 +25,37 @@ Alpaca is an [Ollama](https://github.com/ollama/ollama) client where you can man

|

||||

- Notifications

|

||||

- Import / Export chats

|

||||

- Delete / Edit messages

|

||||

- Regenerate messages

|

||||

- YouTube recognition (Ask questions about a YouTube video using the transcript)

|

||||

- Website recognition (Ask questions about a certain website by parsing the url)

|

||||

|

||||

## Screenies

|

||||

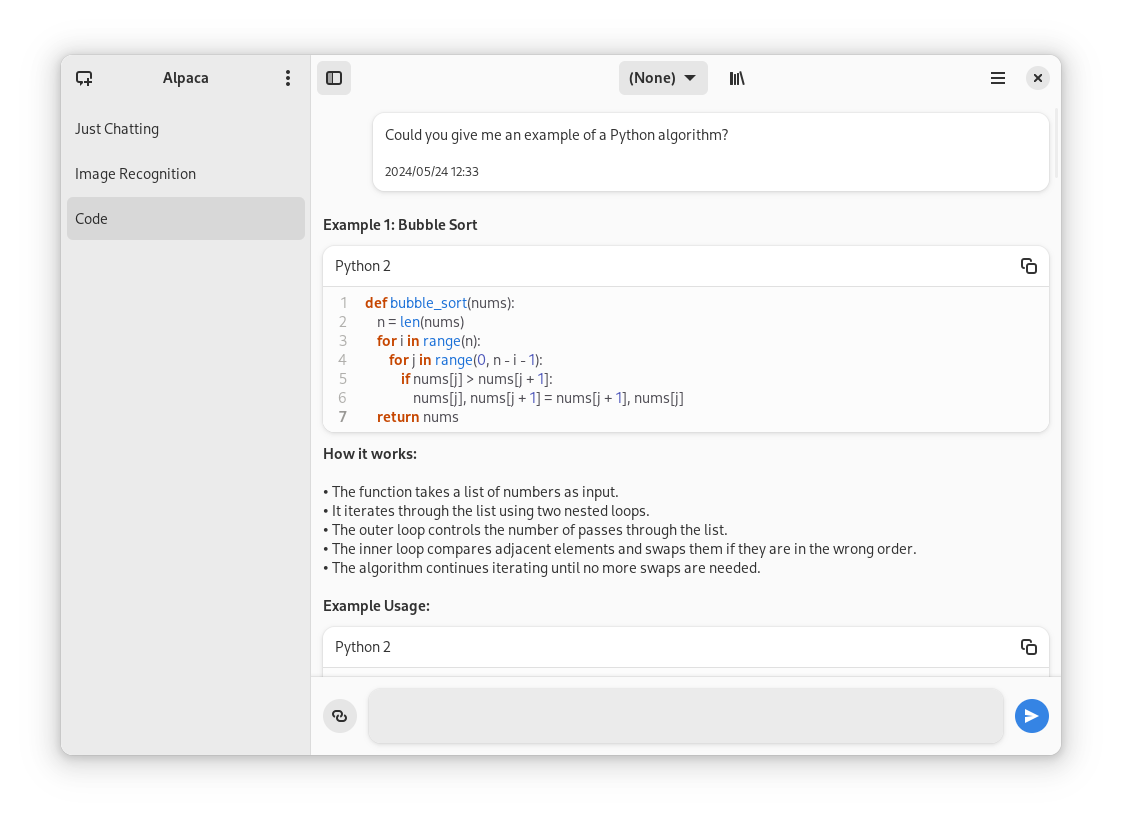

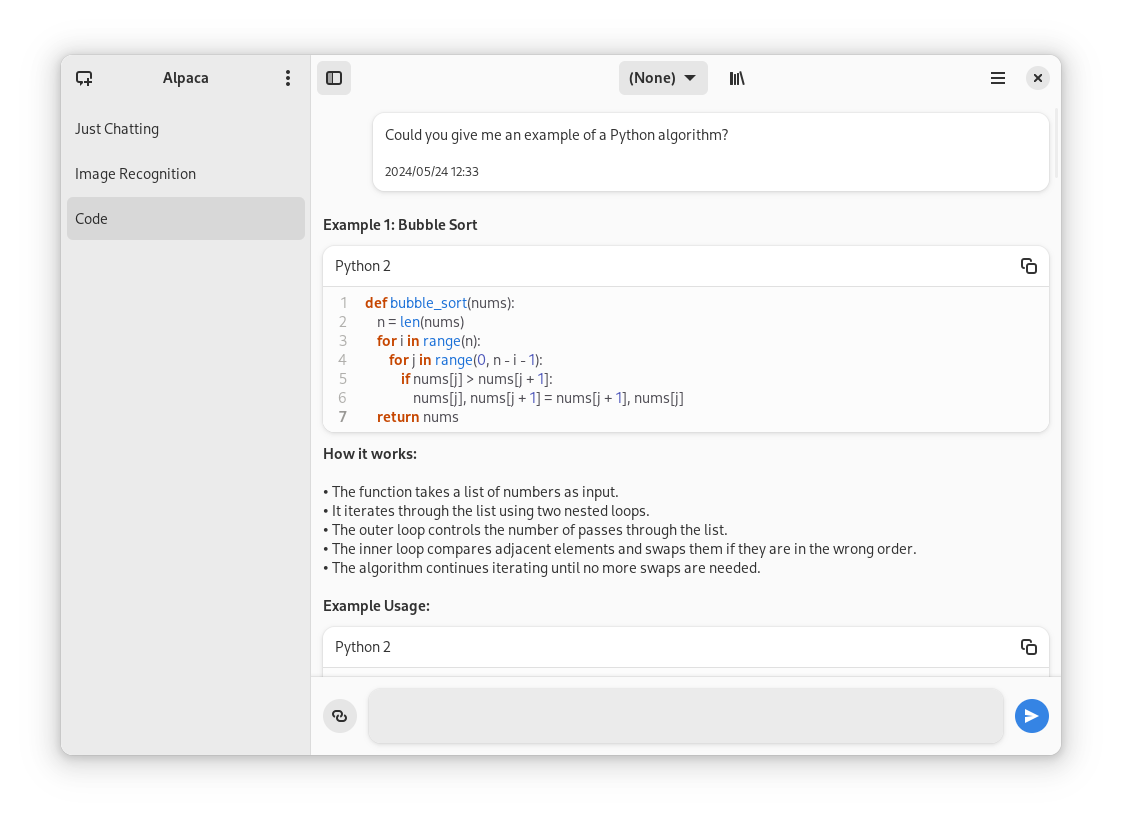

Chatting with a model | Image recognition | Code highlighting

|

||||

:--------------------:|:-----------------:|:----------------------:

|

||||

|  |

|

||||

|

||||

## Preview

|

||||

1. Clone repo using Gnome Builder

|

||||

2. Press the `run` button

|

||||

Normal conversation | Image recognition | Code highlighting | YouTube transcription | Model management

|

||||

:------------------:|:-----------------:|:-----------------:|:---------------------:|:----------------:

|

||||

|  |  |  |

|

||||

|

||||

## Instalation

|

||||

1. Go to the `releases` page

|

||||

2. Download the latest flatpak package

|

||||

3. Open it

|

||||

## Translators

|

||||

|

||||

## Ollama session tips

|

||||

|

||||

### Change the port of the integrated Ollama instance

|

||||

Go to `~/.var/app/com.jeffser.Alpaca/config/server.json` and change the `"local_port"` value, by default it is `11435`.

|

||||

|

||||

### Backup all the chats

|

||||

The chat data is located in `~/.var/app/com.jeffser.Alpaca/data/chats` you can copy that directory wherever you want to.

|

||||

|

||||

### Force showing the welcome dialog

|

||||

To do that you just need to delete the file `~/.var/app/com.jeffser.Alpaca/config/server.json`, this won't affect your saved chats or models.

|

||||

|

||||

### Add/Change environment variables for Ollama

|

||||

You can change anything except `$HOME` and `$OLLAMA_HOST`, to do this go to `~/.var/app/com.jeffser.Alpaca/config/server.json` and change `ollama_overrides` accordingly, some overrides are available to change on the GUI.

|

||||

Language | Contributors

|

||||

:----------------------|:-----------

|

||||

🇷🇺 Russian | [Alex K](https://github.com/alexkdeveloper)

|

||||

🇪🇸 Spanish | [Jeffry Samuel](https://github.com/jeffser)

|

||||

🇫🇷 French | [Louis Chauvet-Villaret](https://github.com/loulou64490) , [Théo FORTIN](https://github.com/topiga)

|

||||

🇧🇷 Brazilian Portuguese | [Daimar Stein](https://github.com/not-a-dev-stein)

|

||||

🇳🇴 Norwegian | [CounterFlow64](https://github.com/CounterFlow64)

|

||||

🇮🇳 Bengali | [Aritra Saha](https://github.com/olumolu)

|

||||

🇨🇳 Simplified Chinese | [Yuehao Sui](https://github.com/8ar10der) , [Aleksana](https://github.com/Aleksanaa)

|

||||

|

||||

---

|

||||

|

||||

## Thanks

|

||||

- [not-a-dev-stein](https://github.com/not-a-dev-stein) for their help with requesting a new icon, bug reports and the translation to Brazilian Portuguese

|

||||

|

||||

- [not-a-dev-stein](https://github.com/not-a-dev-stein) for their help with requesting a new icon and bug reports

|

||||

- [TylerLaBree](https://github.com/TylerLaBree) for their requests and ideas

|

||||

- [Alexkdeveloper](https://github.com/alexkdeveloper) for their help translating the app to Russian

|

||||

- [Imbev](https://github.com/imbev) for their reports and suggestions

|

||||

- [Nokse](https://github.com/Nokse22) for their contributions to the UI and table rendering

|

||||

- [Louis Chauvet-Villaret](https://github.com/loulou64490) for their suggestions and help translating the app to French

|

||||

- [CounterFlow64](https://github.com/CounterFlow64) for their help translating the app to Norwegian

|

||||

|

||||

## About forks

|

||||

If you want to fork this... I mean, I think it would be better if you start from scratch, my code isn't well documented at all, but if you really want to, please give me some credit, that's all I ask for... And maybe a donation (joke)

|

||||

- [Louis Chauvet-Villaret](https://github.com/loulou64490) for their suggestions

|

||||

- [Aleksana](https://github.com/Aleksanaa) for her help with better handling of directories

|

||||

- Sponsors for giving me enough money to be able to take a ride to my campus every time I need to <3

|

||||

- Everyone that has shared kind words of encouragement!

|

||||

|

||||

@@ -122,16 +122,16 @@

|

||||

"sources": [

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://github.com/ollama/ollama/releases/download/v0.3.0/ollama-linux-amd64",

|

||||

"sha256": "b8817c34882c7ac138565836ac1995a2c61261a79315a13a0aebbfe5435da855",

|

||||

"url": "https://github.com/ollama/ollama/releases/download/v0.3.3/ollama-linux-amd64",

|

||||

"sha256": "2b2a4ee4c86fa5b09503e95616bd1b3ee95238b1b3bf12488b9c27c66b84061a",

|

||||

"only-arches": [

|

||||

"x86_64"

|

||||

]

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://github.com/ollama/ollama/releases/download/v0.3.0/ollama-linux-arm64",

|

||||

"sha256": "64be908749212052146f1008dd3867359c776ac1766e8d86291886f53d294d4d",

|

||||

"url": "https://github.com/ollama/ollama/releases/download/v0.3.3/ollama-linux-arm64",

|

||||

"sha256": "28fddbea0c161bc539fd08a3dc78d51413cfe8da97386cb39420f4f30667e22c",

|

||||

"only-arches": [

|

||||

"aarch64"

|

||||

]

|

||||

@@ -145,7 +145,7 @@

|

||||

"sources" : [

|

||||

{

|

||||

"type" : "git",

|

||||

"url" : "file:///home/tentri/Documents/Alpaca",

|

||||

"url": "https://github.com/Jeffser/Alpaca.git",

|

||||

"branch" : "main"

|

||||

}

|

||||

]

|

||||

|

||||

@@ -80,6 +80,28 @@

|

||||

<url type="contribute">https://github.com/Jeffser/Alpaca/discussions/154</url>

|

||||

<url type="vcs-browser">https://github.com/Jeffser/Alpaca</url>

|

||||

<releases>

|

||||

<release version="1.0.6" date="2024-08-04">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.0.6</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Changed shortcuts to standards</li>

|

||||

<li>Moved 'Manage Models' button to primary menu</li>

|

||||

<li>Stable support for GGUF model files</li>

|

||||

<li>General optimizations</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Better handling of enter key (important for Japanese input)</li>

|

||||

<li>Removed sponsor dialog</li>

|

||||

<li>Added sponsor link in about dialog</li>

|

||||

<li>Changed window and elements dimensions</li>

|

||||

<li>Selected model changes when entering model manager</li>

|

||||

<li>Better image tooltips</li>

|

||||

<li>GGUF Support</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.0.5" date="2024-08-02">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.0.5</url>

|

||||

<description>

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

project('Alpaca', 'c',

|

||||

version: '1.0.5',

|

||||

version: '1.0.6',

|

||||

meson_version: '>= 0.62.0',

|

||||

default_options: [ 'warning_level=2', 'werror=false', ],

|

||||

)

|

||||

|

||||

948

po/alpaca.pot

948

po/alpaca.pot

File diff suppressed because it is too large

Load Diff

975

po/nb_NO.po

975

po/nb_NO.po

File diff suppressed because it is too large

Load Diff

960

po/pt_BR.po

960

po/pt_BR.po

File diff suppressed because it is too large

Load Diff

1025

po/zh_CN.po

1025

po/zh_CN.po

File diff suppressed because it is too large

Load Diff

@@ -1,15 +1,19 @@

|

||||

# connection_handler.py

|

||||

import json, requests

|

||||

"""

|

||||

Handles requests to remote and integrated instances of Ollama

|

||||

"""

|

||||

import json

|

||||

import requests

|

||||

#OK=200 response.status_code

|

||||

url = None

|

||||

bearer_token = None

|

||||

URL = None

|

||||

BEARER_TOKEN = None

|

||||

|

||||

def get_headers(include_json:bool) -> dict:

|

||||

headers = {}

|

||||

if include_json:

|

||||

headers["Content-Type"] = "application/json"

|

||||

if bearer_token:

|

||||

headers["Authorization"] = "Bearer {}".format(bearer_token)

|

||||

if BEARER_TOKEN:

|

||||

headers["Authorization"] = "Bearer {}".format(BEARER_TOKEN)

|

||||

return headers if len(headers.keys()) > 0 else None

|

||||

|

||||

def simple_get(connection_url:str) -> dict:

|

||||

|

||||

@@ -1,11 +1,15 @@

|

||||

# dialogs.py

|

||||

|

||||

from gi.repository import Adw, Gtk, Gdk, GLib, GtkSource, Gio, GdkPixbuf

|

||||

"""

|

||||

Handles UI dialogs

|

||||

"""

|

||||

import os

|

||||

import logging

|

||||

from pytube import YouTube

|

||||

from html2text import html2text

|

||||

from gi.repository import Adw, Gtk

|

||||

from . import connection_handler

|

||||

|

||||

logger = logging.getLogger(__name__)

|

||||

# CLEAR CHAT | WORKS

|

||||

|

||||

def clear_chat_response(self, dialog, task):

|

||||

@@ -54,9 +58,11 @@ def delete_chat(self, chat_name):

|

||||

# RENAME CHAT | WORKS

|

||||

|

||||

def rename_chat_response(self, dialog, task, old_chat_name, entry, label_element):

|

||||

if not entry: return

|

||||

if not entry:

|

||||

return

|

||||

new_chat_name = entry.get_text()

|

||||

if old_chat_name == new_chat_name: return

|

||||

if old_chat_name == new_chat_name:

|

||||

return

|

||||

if new_chat_name and (task is None or dialog.choose_finish(task) == "rename"):

|

||||

self.rename_chat(old_chat_name, new_chat_name, label_element)

|

||||

|

||||

@@ -82,7 +88,8 @@ def rename_chat(self, chat_name, label_element):

|

||||

|

||||

def new_chat_response(self, dialog, task, entry):

|

||||

chat_name = _("New Chat")

|

||||

if entry is not None and entry.get_text() != "": chat_name = entry.get_text()

|

||||

if entry is not None and entry.get_text() != "":

|

||||

chat_name = entry.get_text()

|

||||

if chat_name and (task is None or dialog.choose_finish(task) == "create"):

|

||||

self.new_chat(chat_name)

|

||||

|

||||

@@ -243,15 +250,15 @@ def create_model_from_existing(self):

|

||||

)

|

||||

|

||||

def create_model_from_file_response(self, file_dialog, result):

|

||||

try: file = file_dialog.open_finish(result)

|

||||

except:

|

||||

self.logger.error(e)

|

||||

return

|

||||

try:

|

||||

self.create_model(file.get_path(), True)

|

||||

file = file_dialog.open_finish(result)

|

||||

try:

|

||||

self.create_model(file.get_path(), True)

|

||||

except Exception as e:

|

||||

logger.error(e)

|

||||

self.show_toast(_("An error occurred while creating the model"), self.main_overlay)

|

||||

except Exception as e:

|

||||

self.logger.error(e)

|

||||

self.show_toast(_("An error occurred while creating the model"), self.main_overlay)

|

||||

logger.error(e)

|

||||

|

||||

def create_model_from_file(self):

|

||||

file_dialog = Gtk.FileDialog(default_filter=self.file_filter_gguf)

|

||||

@@ -286,24 +293,24 @@ def attach_file_response(self, file_dialog, result):

|

||||

"image": ["png", "jpeg", "jpg", "webp", "gif"],

|

||||

"pdf": ["pdf"]

|

||||

}

|

||||

try: file = file_dialog.open_finish(result)

|

||||

except:

|

||||

self.logger.error(e)

|

||||

try:

|

||||

file = file_dialog.open_finish(result)

|

||||

except Exception as e:

|

||||

logger.error(e)

|

||||

return

|

||||

extension = file.get_path().split(".")[-1]

|

||||

file_type = next(key for key, value in file_types.items() if extension in value)

|

||||

if not file_type: return

|

||||

if not file_type:

|

||||

return

|

||||

if file_type == 'image' and not self.verify_if_image_can_be_used():

|

||||

self.show_toast(_("Image recognition is only available on specific models"), self.main_overlay)

|

||||

return

|

||||

self.attach_file(file.get_path(), file_type)

|

||||

|

||||

|

||||

def attach_file(self, filter):

|

||||

file_dialog = Gtk.FileDialog(default_filter=filter)

|

||||

def attach_file(self, file_filter):

|

||||

file_dialog = Gtk.FileDialog(default_filter=file_filter)

|

||||

file_dialog.open(self, None, lambda file_dialog, result: attach_file_response(self, file_dialog, result))

|

||||

|

||||

|

||||

# YouTube caption | WORKS

|

||||

|

||||

def youtube_caption_response(self, dialog, task, video_url, caption_drop_down):

|

||||

@@ -321,7 +328,7 @@ def youtube_caption_response(self, dialog, task, video_url, caption_drop_down):

|

||||

if not os.path.exists(os.path.join(self.cache_dir, 'tmp/youtube')):

|

||||

os.makedirs(os.path.join(self.cache_dir, 'tmp/youtube'))

|

||||

file_path = os.path.join(os.path.join(self.cache_dir, 'tmp/youtube'), f'{yt.title} ({selected_caption.split(" | ")[0]})')

|

||||

with open(file_path, 'w+') as f:

|

||||

with open(file_path, 'w+', encoding="utf-8") as f:

|

||||

f.write(text)

|

||||

self.attach_file(file_path, 'youtube')

|

||||

|

||||

@@ -333,7 +340,8 @@ def youtube_caption(self, video_url):

|

||||

self.show_toast(_("This video does not have any transcriptions"), self.main_overlay)

|

||||

return

|

||||

caption_list = Gtk.StringList()

|

||||

for caption in captions: caption_list.append("{} | {}".format(caption.name, caption.code))

|

||||

for caption in captions:

|

||||

caption_list.append("{} | {}".format(caption.name, caption.code))

|

||||

caption_drop_down = Gtk.DropDown(

|

||||

enable_search=True,

|

||||

model=caption_list

|

||||

@@ -369,7 +377,7 @@ def attach_website_response(self, dialog, task, url):

|

||||

os.makedirs('/tmp/alpaca/websites/')

|

||||

md_name = self.generate_numbered_name('website.md', os.listdir('/tmp/alpaca/websites'))

|

||||

file_path = os.path.join('/tmp/alpaca/websites/', md_name)

|

||||

with open(file_path, 'w+') as f:

|

||||

with open(file_path, 'w+', encoding="utf-8") as f:

|

||||

f.write('{}\n\n{}'.format(url, md))

|

||||

self.attach_file(file_path, 'website')

|

||||

else:

|

||||

@@ -390,33 +398,3 @@ def attach_website(self, url):

|

||||

cancellable = None,

|

||||

callback = lambda dialog, task, url=url: attach_website_response(self, dialog, task, url)

|

||||

)

|

||||

|

||||

# Begging for money :3

|

||||

|

||||

def support_response(self, dialog, task):

|

||||

res = dialog.choose_finish(task)

|

||||

if res == 'later': return

|

||||

elif res == 'support':

|

||||

self.show_toast(_("Thank you!"), self.main_overlay)

|

||||

os.system('xdg-open https://github.com/sponsors/Jeffser')

|

||||

elif res == 'nope':

|

||||

self.show_toast(_("Visit Alpaca's website if you change your mind!"), self.main_overlay)

|

||||

self.show_support = False

|

||||

self.save_server_config()

|

||||

|

||||

def support(self):

|

||||

dialog = Adw.AlertDialog(

|

||||

heading=_("Support"),

|

||||

body=_("Are you enjoying Alpaca? Consider sponsoring the project!"),

|

||||

close_response="nope"

|

||||

)

|

||||

dialog.add_response("nope", _("Don't show again"))

|

||||

dialog.set_response_appearance("nope", Adw.ResponseAppearance.DESTRUCTIVE)

|

||||

dialog.add_response("later", _("Later"))

|

||||

dialog.add_response("support", _("Support"))

|

||||

dialog.set_response_appearance("support", Adw.ResponseAppearance.SUGGESTED)

|

||||

dialog.choose(

|

||||

parent = self,

|

||||

cancellable = None,

|

||||

callback = lambda dialog, task: support_response(self, dialog, task)

|

||||

)

|

||||

|

||||

25

src/internal.py

Normal file

25

src/internal.py

Normal file

@@ -0,0 +1,25 @@

|

||||

# internal.py

|

||||

"""

|

||||

Handles paths, they can be different if the app is running as a Flatpak

|

||||

"""

|

||||

import os

|

||||

|

||||

APP_ID = "com.jeffser.Alpaca"

|

||||

|

||||

IN_FLATPAK = bool(os.getenv("FLATPAK_ID"))

|

||||

|

||||

def get_xdg_home(env, default):

|

||||

if IN_FLATPAK:

|

||||

return os.getenv(env)

|

||||

base = os.getenv(env) or os.path.expanduser(default)

|

||||

path = os.path.join(base, APP_ID)

|

||||

if not os.path.exists(path):

|

||||

os.makedirs(path)

|

||||

return path

|

||||

|

||||

|

||||

data_dir = get_xdg_home("XDG_DATA_HOME", "~/.local/share")

|

||||

config_dir = get_xdg_home("XDG_CONFIG_HOME", "~/.config")

|

||||

cache_dir = get_xdg_home("XDG_CACHE_HOME", "~/.cache")

|

||||

|

||||

source_dir = os.path.abspath(os.path.dirname(__file__))

|

||||

@@ -1,29 +1,35 @@

|

||||

# local_instance.py

|

||||

import subprocess, os, threading

|

||||

"""

|

||||

Handles running, stopping and resetting the integrated Ollama instance

|

||||

"""

|

||||

import subprocess

|

||||

import os

|

||||

from time import sleep

|

||||

from logging import getLogger

|

||||

from .internal import data_dir, cache_dir

|

||||

|

||||

|

||||

logger = getLogger(__name__)

|

||||

|

||||

instance = None

|

||||

port = 11435

|

||||

data_dir = os.getenv("XDG_DATA_HOME")

|

||||

overrides = {}

|

||||

|

||||

def start():

|

||||

if not os.path.isdir(os.path.join(os.getenv("XDG_CACHE_HOME"), 'tmp/ollama')):

|

||||

os.mkdir(os.path.join(os.getenv("XDG_CACHE_HOME"), 'tmp/ollama'))

|

||||

global instance, overrides

|

||||

if not os.path.isdir(os.path.join(cache_dir, 'tmp/ollama')):

|

||||

os.mkdir(os.path.join(cache_dir, 'tmp/ollama'))

|

||||

global instance

|

||||

params = overrides.copy()

|

||||

params["OLLAMA_HOST"] = f"127.0.0.1:{port}" # You can't change this directly sorry :3

|

||||

params["HOME"] = data_dir

|

||||

params["TMPDIR"] = os.path.join(os.getenv("XDG_CACHE_HOME"), 'tmp/ollama')

|

||||

instance = subprocess.Popen(["/app/bin/ollama", "serve"], env={**os.environ, **params}, stderr=subprocess.PIPE, text=True)

|

||||

params["TMPDIR"] = os.path.join(cache_dir, 'tmp/ollama')

|

||||

instance = subprocess.Popen(["ollama", "serve"], env={**os.environ, **params}, stderr=subprocess.PIPE, text=True)

|

||||

logger.info("Starting Alpaca's Ollama instance...")

|

||||

logger.debug(params)

|

||||

sleep(1)

|

||||

logger.info("Started Alpaca's Ollama instance")

|

||||

v_str = subprocess.check_output("ollama -v", shell=True).decode('utf-8')

|

||||

logger.info('Ollama version: {}'.format(v_str.split('client version is ')[1].strip()))

|

||||

|

||||

def stop():

|

||||

logger.info("Stopping Alpaca's Ollama instance")

|

||||

@@ -39,4 +45,3 @@ def reset():

|

||||

stop()

|

||||

sleep(1)

|

||||

start()

|

||||

|

||||

|

||||

51

src/main.py

51

src/main.py

@@ -16,21 +16,35 @@

|

||||

# along with this program. If not, see <https://www.gnu.org/licenses/>.

|

||||

#

|

||||

# SPDX-License-Identifier: GPL-3.0-or-later

|

||||

"""

|

||||

Main script run at launch, handles actions, about dialog and the app itself (not the window)

|

||||

"""

|

||||

|

||||

import gi

|

||||

gi.require_version('Gtk', '4.0')

|

||||

gi.require_version('Adw', '1')

|

||||

from gi.repository import Gtk, Gio, Adw, GLib

|

||||

|

||||

from .window import AlpacaWindow

|

||||

from .internal import cache_dir, data_dir

|

||||

|

||||

import sys

|

||||

import logging

|

||||

import gi

|

||||

import os

|

||||

|

||||

gi.require_version('Gtk', '4.0')

|

||||

gi.require_version('Adw', '1')

|

||||

|

||||

from gi.repository import Gtk, Gio, Adw, GLib

|

||||

from .window import AlpacaWindow

|

||||

|

||||

|

||||

logger = logging.getLogger(__name__)

|

||||

|

||||

translators = [

|

||||

'Alex K (Russian) https://github.com/alexkdeveloper',

|

||||

'Jeffry Samuel (Spanish) https://github.com/jeffser',

|

||||

'Louis Chauvet-Villaret (French) https://github.com/loulou64490',

|

||||

'Théo FORTIN (French) https://github.com/topiga',

|

||||

'Daimar Stein (Brazilian Portuguese) https://github.com/not-a-dev-stein',

|

||||

'CounterFlow64 (Norwegian) https://github.com/CounterFlow64',

|

||||

'Aritra Saha (Bengali) https://github.com/olumolu',

|

||||

'Yuehao Sui (Simplified Chinese) https://github.com/8ar10der',

|

||||

'Aleksana (Simplified Chinese) https://github.com/Aleksanaa'

|

||||

]

|

||||

|

||||

class AlpacaApplication(Adw.Application):

|

||||

"""The main application singleton class."""

|

||||

@@ -38,8 +52,8 @@ class AlpacaApplication(Adw.Application):

|

||||

def __init__(self, version):

|

||||

super().__init__(application_id='com.jeffser.Alpaca',

|

||||

flags=Gio.ApplicationFlags.DEFAULT_FLAGS)

|

||||

self.create_action('quit', lambda *_: self.quit(), ['<primary>q'])

|

||||

self.create_action('preferences', lambda *_: AlpacaWindow.show_preferences_dialog(self.props.active_window), ['<primary>p'])

|

||||

self.create_action('quit', lambda *_: self.quit(), ['<primary>w'])

|

||||

self.create_action('preferences', lambda *_: AlpacaWindow.show_preferences_dialog(self.props.active_window), ['<primary>comma'])

|

||||

self.create_action('about', self.on_about_action)

|

||||

self.version = version

|

||||

|

||||

@@ -58,12 +72,13 @@ class AlpacaApplication(Adw.Application):

|

||||

support_url="https://github.com/Jeffser/Alpaca/discussions/155",

|

||||

developers=['Jeffser https://jeffser.com'],

|

||||

designers=['Jeffser https://jeffser.com', 'Tobias Bernard (App Icon) https://tobiasbernard.com/'],

|

||||

translator_credits='Alex K (Russian) https://github.com/alexkdeveloper\nJeffser (Spanish) https://jeffser.com\nDaimar Stein (Brazilian Portuguese) https://github.com/not-a-dev-stein\nLouis Chauvet-Villaret (French) https://github.com/loulou64490\nCounterFlow64 (Norwegian) https://github.com/CounterFlow64\nAritra Saha (Bengali) https://github.com/olumolu\nYuehao Sui (Simplified Chinese) https://github.com/8ar10der',

|

||||

translator_credits='\n'.join(translators),

|

||||

copyright='© 2024 Jeffser\n© 2024 Ollama',

|

||||

issue_url='https://github.com/Jeffser/Alpaca/issues',

|

||||

license_type=3,

|

||||

website="https://jeffser.com/alpaca",

|

||||

debug_info=open(os.path.join(os.getenv("XDG_DATA_HOME"), 'tmp.log'), 'r').read())

|

||||

debug_info=open(os.path.join(data_dir, 'tmp.log'), 'r').read())

|

||||

about.add_link("Become a Sponsor", "https://github.com/sponsors/Jeffser")

|

||||

about.present(parent=self.props.active_window)

|

||||

|

||||

def create_action(self, name, callback, shortcuts=None):

|

||||

@@ -75,16 +90,16 @@ class AlpacaApplication(Adw.Application):

|

||||

|

||||

|

||||

def main(version):

|

||||

if os.path.isfile(os.path.join(os.getenv("XDG_DATA_HOME"), 'tmp.log')):

|

||||

os.remove(os.path.join(os.getenv("XDG_DATA_HOME"), 'tmp.log'))

|

||||

if os.path.isdir(os.path.join(os.getenv("XDG_CACHE_HOME"), 'tmp')):

|

||||

os.system('rm -rf ' + os.path.join(os.getenv("XDG_CACHE_HOME"), "tmp/*"))

|

||||

if os.path.isfile(os.path.join(data_dir, 'tmp.log')):

|

||||

os.remove(os.path.join(data_dir, 'tmp.log'))

|

||||

if os.path.isdir(os.path.join(cache_dir, 'tmp')):

|

||||

os.system('rm -rf ' + os.path.join(cache_dir, "tmp/*"))

|

||||

else:

|

||||

os.mkdir(os.path.join(os.getenv("XDG_CACHE_HOME"), 'tmp'))

|

||||

os.mkdir(os.path.join(cache_dir, 'tmp'))

|

||||

logging.basicConfig(

|

||||

format="%(levelname)s\t[%(filename)s | %(funcName)s] %(message)s",

|

||||

level=logging.INFO,

|

||||

handlers=[logging.FileHandler(filename=os.path.join(os.getenv("XDG_DATA_HOME"), 'tmp.log')), logging.StreamHandler(stream=sys.stdout)]

|

||||

handlers=[logging.FileHandler(filename=os.path.join(data_dir, 'tmp.log')), logging.StreamHandler(stream=sys.stdout)]

|

||||

)

|

||||

app = AlpacaApplication(version)

|

||||

logger.info(f"Alpaca version: {app.version}")

|

||||

|

||||

@@ -44,7 +44,8 @@ alpaca_sources = [

|

||||

'local_instance.py',

|

||||

'available_models.json',

|

||||

'available_models_descriptions.py',

|

||||

'table_widget.py'

|

||||

'table_widget.py',

|

||||

'internal.py'

|

||||

]

|

||||

|

||||

install_data(alpaca_sources, install_dir: moduledir)

|

||||

|

||||

@@ -1,5 +1,10 @@

|

||||

#table_widget.py

|

||||

"""

|

||||

Handles the table widget shown in chat responses

|

||||

"""

|

||||

|

||||

import gi

|

||||

from gi.repository import Adw

|

||||

gi.require_version('Gtk', '4.0')

|

||||

from gi.repository import Gtk, GObject, Gio

|

||||

|

||||

import re

|

||||

|

||||

336

src/window.py

336

src/window.py

@@ -16,33 +16,37 @@

|

||||

# along with this program. If not, see <https://www.gnu.org/licenses/>.

|

||||

#

|

||||

# SPDX-License-Identifier: GPL-3.0-or-later

|

||||

|

||||

import gi

|

||||

gi.require_version('GtkSource', '5')

|

||||

gi.require_version('GdkPixbuf', '2.0')

|

||||

from gi.repository import Adw, Gtk, Gdk, GLib, GtkSource, Gio, GdkPixbuf

|

||||

import json, requests, threading, os, re, base64, sys, gettext, locale, subprocess, uuid, shutil, tarfile, tempfile, logging, random

|

||||

from time import sleep

|

||||

"""

|

||||

Handles the main window

|

||||

"""

|

||||

import json, threading, os, re, base64, sys, gettext, uuid, shutil, tarfile, tempfile, logging

|

||||

from io import BytesIO

|

||||

from PIL import Image

|

||||

from pypdf import PdfReader

|

||||

from datetime import datetime

|

||||

|

||||

import gi

|

||||

gi.require_version('GtkSource', '5')

|

||||

gi.require_version('GdkPixbuf', '2.0')

|

||||

|

||||

from gi.repository import Adw, Gtk, Gdk, GLib, GtkSource, Gio, GdkPixbuf

|

||||

|

||||

from . import dialogs, local_instance, connection_handler, available_models_descriptions

|

||||

from .table_widget import TableWidget

|

||||

from .internal import config_dir, data_dir, cache_dir, source_dir

|

||||

|

||||

logger = logging.getLogger(__name__)

|

||||

|

||||

|

||||

@Gtk.Template(resource_path='/com/jeffser/Alpaca/window.ui')

|

||||

class AlpacaWindow(Adw.ApplicationWindow):

|

||||

config_dir = os.getenv("XDG_CONFIG_HOME")

|

||||

data_dir = os.getenv("XDG_DATA_HOME")

|

||||

app_dir = os.getenv("FLATPAK_DEST")

|

||||

cache_dir = os.getenv("XDG_CACHE_HOME")

|

||||

config_dir = config_dir

|

||||

data_dir = data_dir

|

||||

cache_dir = cache_dir

|

||||

|

||||

__gtype_name__ = 'AlpacaWindow'

|

||||

|

||||

localedir = os.path.join(os.path.abspath(os.path.dirname(__file__)), 'locale')

|

||||

localedir = os.path.join(source_dir, 'locale')

|

||||

|

||||

gettext.bindtextdomain('com.jeffser.Alpaca', localedir)

|

||||

gettext.textdomain('com.jeffser.Alpaca')

|

||||

@@ -60,7 +64,6 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

pulling_models = {}

|

||||

chats = {"chats": {_("New Chat"): {"messages": {}}}, "selected_chat": "New Chat", "order": []}

|

||||

attachments = {}

|

||||

show_support = True

|

||||

|

||||

#Override elements

|

||||

override_HSA_OVERRIDE_GFX_VERSION = Gtk.Template.Child()

|

||||

@@ -68,7 +71,9 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

override_HIP_VISIBLE_DEVICES = Gtk.Template.Child()

|

||||

|

||||

#Elements

|

||||

split_view_overlay = Gtk.Template.Child()

|

||||

regenerate_button : Gtk.Button = None

|

||||

selected_chat_row : Gtk.ListBoxRow = None

|

||||

create_model_base = Gtk.Template.Child()

|

||||

create_model_name = Gtk.Template.Child()

|

||||

create_model_system = Gtk.Template.Child()

|

||||

@@ -134,22 +139,23 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

@Gtk.Template.Callback()

|

||||

def verify_if_image_can_be_used(self, pspec=None, user_data=None):

|

||||

logger.debug("Verifying if image can be used")

|

||||

if self.model_drop_down.get_selected_item() == None: return True

|

||||

if self.model_drop_down.get_selected_item() == None:

|

||||

return True

|

||||

selected = self.convert_model_name(self.model_drop_down.get_selected_item().get_string(), 1).split(":")[0]

|

||||

if selected in [key for key, value in self.available_models.items() if value["image"]]:

|

||||

for name, content in self.attachments.items():

|

||||

if content['type'] == 'image':

|

||||

content['button'].set_css_classes(["flat"])

|

||||

return True

|

||||

else:

|

||||

for name, content in self.attachments.items():

|

||||

if content['type'] == 'image':

|

||||

content['button'].set_css_classes(["flat", "error"])

|

||||

return False

|

||||

for name, content in self.attachments.items():

|

||||

if content['type'] == 'image':

|

||||

content['button'].set_css_classes(["flat", "error"])

|

||||

return False

|

||||

|

||||

@Gtk.Template.Callback()

|

||||

def stop_message(self, button=None):

|

||||

if self.loading_spinner: self.chat_container.remove(self.loading_spinner)

|

||||

if self.loading_spinner:

|

||||

self.chat_container.remove(self.loading_spinner)

|

||||

self.toggle_ui_sensitive(True)

|

||||

self.switch_send_stop_button()

|

||||

self.bot_message = None

|

||||

@@ -173,8 +179,10 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

self.save_history()

|

||||

self.show_toast(_("Message edited successfully"), self.main_overlay)

|

||||

|

||||

if self.bot_message or self.get_focus() not in (self.message_text_view, self.send_button): return

|

||||

if not self.message_text_view.get_buffer().get_text(self.message_text_view.get_buffer().get_start_iter(), self.message_text_view.get_buffer().get_end_iter(), False): return

|

||||

if self.bot_message or self.get_focus() not in (self.message_text_view, self.send_button):

|

||||

return

|

||||

if not self.message_text_view.get_buffer().get_text(self.message_text_view.get_buffer().get_start_iter(), self.message_text_view.get_buffer().get_end_iter(), False):

|

||||

return

|

||||

current_chat_row = self.chat_list_box.get_selected_row()

|

||||

self.chat_list_box.unselect_all()

|

||||

self.chat_list_box.remove(current_chat_row)

|

||||

@@ -187,18 +195,19 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

if current_model is None:

|

||||

self.show_toast(_("Please select a model before chatting"), self.main_overlay)

|

||||

return

|

||||

id = self.generate_uuid()

|

||||

message_id = self.generate_uuid()

|

||||

|

||||

attached_images = []

|

||||

attached_files = {}

|

||||

can_use_images = self.verify_if_image_can_be_used()

|

||||

for name, content in self.attachments.items():

|

||||

if content["type"] == 'image' and can_use_images: attached_images.append(name)

|

||||

if content["type"] == 'image' and can_use_images:

|

||||

attached_images.append(name)

|

||||

else:

|

||||

attached_files[name] = content['type']

|

||||

if not os.path.exists(os.path.join(self.data_dir, "chats", self.chats['selected_chat'], id)):

|

||||

os.makedirs(os.path.join(self.data_dir, "chats", self.chats['selected_chat'], id))

|

||||

shutil.copy(content['path'], os.path.join(self.data_dir, "chats", self.chats['selected_chat'], id, name))

|

||||

if not os.path.exists(os.path.join(self.data_dir, "chats", self.chats['selected_chat'], message_id)):

|

||||

os.makedirs(os.path.join(self.data_dir, "chats", self.chats['selected_chat'], message_id))

|

||||

shutil.copy(content['path'], os.path.join(self.data_dir, "chats", self.chats['selected_chat'], message_id, name))

|

||||

content["button"].get_parent().remove(content["button"])

|

||||

self.attachments = {}

|

||||

self.attachment_box.set_visible(False)

|

||||

@@ -207,16 +216,16 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

|

||||

current_datetime = datetime.now()

|

||||

|

||||

self.chats["chats"][self.chats["selected_chat"]]["messages"][id] = {

|

||||

self.chats["chats"][self.chats["selected_chat"]]["messages"][message_id] = {

|

||||

"role": "user",

|

||||

"model": "User",

|

||||

"date": current_datetime.strftime("%Y/%m/%d %H:%M:%S"),

|

||||

"content": self.message_text_view.get_buffer().get_text(self.message_text_view.get_buffer().get_start_iter(), self.message_text_view.get_buffer().get_end_iter(), False)

|

||||

}

|

||||

if len(attached_images) > 0:

|

||||

self.chats["chats"][self.chats["selected_chat"]]["messages"][id]['images'] = attached_images

|

||||

self.chats["chats"][self.chats["selected_chat"]]["messages"][message_id]['images'] = attached_images

|

||||

if len(attached_files.keys()) > 0:

|

||||

self.chats["chats"][self.chats["selected_chat"]]["messages"][id]['files'] = attached_files

|

||||

self.chats["chats"][self.chats["selected_chat"]]["messages"][message_id]['files'] = attached_files

|

||||

data = {

|

||||

"model": current_model,

|

||||

"messages": self.convert_history_to_ollama(),

|

||||

@@ -229,12 +238,12 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

#self.attachments[name] = {"path": file_path, "type": file_type, "content": content}

|

||||

raw_message = self.message_text_view.get_buffer().get_text(self.message_text_view.get_buffer().get_start_iter(), self.message_text_view.get_buffer().get_end_iter(), False)

|

||||

formated_date = GLib.markup_escape_text(self.generate_datetime_format(current_datetime))

|

||||

self.show_message(raw_message, False, f"\n\n<small>{formated_date}</small>", attached_images, attached_files, id=id)

|

||||

self.show_message(raw_message, False, f"\n\n<small>{formated_date}</small>", attached_images, attached_files, message_id=message_id)

|

||||

self.message_text_view.get_buffer().set_text("", 0)

|

||||

self.loading_spinner = Gtk.Spinner(spinning=True, margin_top=12, margin_bottom=12, hexpand=True)

|

||||

self.chat_container.append(self.loading_spinner)

|

||||

bot_id=self.generate_uuid()

|

||||

self.show_message("", True, id=bot_id)

|

||||

self.show_message("", True, message_id=bot_id)

|

||||

|

||||

thread = threading.Thread(target=self.run_message, args=(data['messages'], data['model'], bot_id))

|

||||

thread.start()

|

||||

@@ -244,17 +253,13 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

generate_title_thread = threading.Thread(target=self.generate_chat_title, args=(message_data, self.chat_list_box.get_selected_row().get_child()))

|

||||

generate_title_thread.start()

|

||||

|

||||

@Gtk.Template.Callback()

|

||||

def manage_models_button_activate(self, button=None):

|

||||

logger.debug(f"Managing models")

|

||||

self.update_list_local_models()

|

||||

self.manage_models_dialog.present(self)

|

||||

|

||||

@Gtk.Template.Callback()

|

||||

def welcome_carousel_page_changed(self, carousel, index):

|

||||

logger.debug("Showing welcome carousel")

|

||||

if index == 0: self.welcome_previous_button.set_sensitive(False)

|

||||

else: self.welcome_previous_button.set_sensitive(True)

|

||||

if index == 0:

|

||||

self.welcome_previous_button.set_sensitive(False)

|

||||

else:

|

||||

self.welcome_previous_button.set_sensitive(True)

|

||||

if index == carousel.get_n_pages()-1:

|

||||

self.welcome_next_button.set_label(_("Close"))

|

||||

self.welcome_next_button.set_tooltip_text(_("Close"))

|

||||

@@ -268,7 +273,8 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

|

||||

@Gtk.Template.Callback()

|

||||

def welcome_next_button_activate(self, button):

|

||||

if button.get_label() == "Next": self.welcome_carousel.scroll_to(self.welcome_carousel.get_nth_page(self.welcome_carousel.get_position()+1), True)

|

||||

if button.get_label() == "Next":

|

||||

self.welcome_carousel.scroll_to(self.welcome_carousel.get_nth_page(self.welcome_carousel.get_position()+1), True)

|

||||

else:

|

||||

self.welcome_dialog.force_close()

|

||||

if not self.verify_connection():

|

||||

@@ -336,8 +342,10 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

@Gtk.Template.Callback()

|

||||

def model_spin_changed(self, spin):

|

||||

value = spin.get_value()

|

||||

if spin.get_name() != "temperature": value = round(value)

|

||||

else: value = round(value, 1)

|

||||

if spin.get_name() != "temperature":

|

||||

value = round(value)

|

||||

else:

|

||||

value = round(value, 1)

|

||||

if self.model_tweaks[spin.get_name()] is not None and self.model_tweaks[spin.get_name()] != value:

|

||||

self.model_tweaks[spin.get_name()] = value

|

||||

self.save_server_config()

|

||||

@@ -378,18 +386,21 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

overlay.add_overlay(progress_bar)

|

||||

self.pulling_model_list_box.append(overlay)

|

||||

self.navigation_view_manage_models.pop()

|

||||

self.manage_models_dialog.present(self)

|

||||

thread.start()

|

||||

|

||||

@Gtk.Template.Callback()

|

||||

def override_changed(self, entry):

|

||||

name = entry.get_name()

|

||||

value = entry.get_text()

|

||||

if (not value and name not in local_instance.overrides) or (value and value in local_instance.overrides and local_instance.overrides[name] == value): return

|

||||

if not value: del local_instance.overrides[name]

|

||||

else: local_instance.overrides[name] = value

|

||||

if (not value and name not in local_instance.overrides) or (value and value in local_instance.overrides and local_instance.overrides[name] == value):

|

||||

return

|

||||

if not value:

|

||||

del local_instance.overrides[name]

|

||||

else:

|

||||

local_instance.overrides[name] = value

|

||||

self.save_server_config()

|

||||

if not self.run_remote: local_instance.reset()

|

||||

if not self.run_remote:

|

||||

local_instance.reset()

|

||||

|

||||

@Gtk.Template.Callback()

|

||||

def link_button_handler(self, button):

|

||||

@@ -407,7 +418,8 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

for i, key in enumerate(self.available_models.keys()):

|

||||

row = self.available_model_list_box.get_row_at_index(i)

|

||||

row.set_visible(re.search(entry.get_text(), '{} {} {}'.format(row.get_title(), (_("image") if self.available_models[key]['image'] else " "), row.get_subtitle()), re.IGNORECASE))

|

||||

if row.get_visible(): results += 1

|

||||

if row.get_visible():

|

||||

results += 1

|

||||

if entry.get_text() and results == 0:

|

||||

self.available_model_list_box.set_visible(False)

|

||||

self.no_results_page.set_visible(True)

|

||||

@@ -415,13 +427,28 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

self.available_model_list_box.set_visible(True)

|

||||

self.no_results_page.set_visible(False)

|

||||

|

||||

def manage_models_button_activate(self, button=None):

|

||||

logger.debug(f"Managing models")

|

||||

self.update_list_local_models()

|

||||

if len(self.chats["chats"][self.chats["selected_chat"]]["messages"].keys()) > 0:

|

||||

last_model_used = self.chats["chats"][self.chats["selected_chat"]]["messages"][list(self.chats["chats"][self.chats["selected_chat"]]["messages"].keys())[-1]]["model"]

|

||||

last_model_used = self.convert_model_name(last_model_used, 0)

|

||||

for i in range(self.model_string_list.get_n_items()):

|

||||

if self.model_string_list.get_string(i) == last_model_used:

|

||||

self.model_drop_down.set_selected(i)

|

||||

break

|

||||

self.manage_models_dialog.present(self)

|

||||

|

||||

def convert_model_name(self, name:str, mode:int) -> str: # mode=0 name:tag -> Name (tag) | mode=1 Name (tag) -> name:tag

|

||||

if mode == 0: return "{} ({})".format(name.split(":")[0].replace("-", " ").title(), name.split(":")[1])

|

||||

if mode == 1: return "{}:{}".format(name.split(" (")[0].replace(" ", "-").lower(), name.split(" (")[1][:-1])

|

||||

if mode == 0:

|

||||

return "{} ({})".format(name.split(":")[0].replace("-", " ").title(), name.split(":")[1])

|

||||

if mode == 1:

|

||||

return "{}:{}".format(name.split(" (")[0].replace(" ", "-").lower(), name.split(" (")[1][:-1])

|

||||

|

||||

def check_alphanumeric(self, editable, text, length, position):

|

||||

new_text = ''.join([char for char in text if char.isalnum() or char in ['-', '.', ':']])

|

||||

if new_text != text: editable.stop_emission_by_name("insert-text")

|

||||

new_text = ''.join([char for char in text if char.isalnum() or char in ['-', '.', ':', '_']])

|

||||

if new_text != text:

|

||||

editable.stop_emission_by_name("insert-text")

|

||||

|

||||

def create_model(self, model:str, file:bool):

|

||||

modelfile_buffer = self.create_model_modelfile.get_buffer()

|

||||

@@ -442,9 +469,10 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

else:

|

||||

##TODO ERROR MESSAGE

|

||||

return

|

||||

self.create_model_base.set_subtitle(self.convert_model_name(model, 1))

|

||||

else:

|

||||

self.create_model_name.set_text(model.split("/")[-1].split(".")[0])

|

||||

self.create_model_base.set_subtitle(self.convert_model_name(model, 1))

|

||||

self.create_model_name.set_text(os.path.splitext(os.path.basename(model))[0])

|

||||

self.create_model_base.set_subtitle(model)

|

||||

self.navigation_view_manage_models.push_by_tag('model_create_page')

|

||||

|

||||

def show_toast(self, message:str, overlay):

|

||||

@@ -460,31 +488,33 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

logger.info(f"{title}, {body}")

|

||||

notification = Gio.Notification.new(title)

|

||||

notification.set_body(body)

|

||||

if icon: notification.set_icon(icon)

|

||||

if icon:

|

||||

notification.set_icon(icon)

|

||||

self.get_application().send_notification(None, notification)

|

||||

|

||||

def delete_message(self, message_element):

|

||||

logger.debug("Deleting message")

|

||||

id = message_element.get_name()

|

||||

del self.chats["chats"][self.chats["selected_chat"]]["messages"][id]

|

||||

message_id = message_element.get_name()

|

||||

del self.chats["chats"][self.chats["selected_chat"]]["messages"][message_id]

|

||||

self.chat_container.remove(message_element)

|

||||

if os.path.exists(os.path.join(self.data_dir, "chats", self.chats['selected_chat'], id)):

|

||||

shutil.rmtree(os.path.join(self.data_dir, "chats", self.chats['selected_chat'], id))

|

||||

if os.path.exists(os.path.join(self.data_dir, "chats", self.chats['selected_chat'], message_id)):

|

||||

shutil.rmtree(os.path.join(self.data_dir, "chats", self.chats['selected_chat'], message_id))

|

||||

self.save_history()

|

||||

|

||||

def copy_message(self, message_element):

|

||||

logger.debug("Copying message")

|

||||

id = message_element.get_name()

|

||||

message_id = message_element.get_name()

|

||||

clipboard = Gdk.Display().get_default().get_clipboard()

|

||||

clipboard.set(self.chats["chats"][self.chats["selected_chat"]]["messages"][id]["content"])

|

||||

clipboard.set(self.chats["chats"][self.chats["selected_chat"]]["messages"][message_id]["content"])

|

||||

self.show_toast(_("Message copied to the clipboard"), self.main_overlay)

|

||||

|

||||

def edit_message(self, message_element, text_view, button_container):

|

||||

logger.debug("Editing message")

|

||||

if self.editing_message: self.send_message()

|

||||

if self.editing_message:

|

||||

self.send_message()

|

||||

|

||||

button_container.set_visible(False)

|

||||

id = message_element.get_name()

|

||||

message_id = message_element.get_name()

|

||||

|

||||

text_buffer = text_view.get_buffer()

|

||||

end_iter = text_buffer.get_end_iter()

|

||||

@@ -498,7 +528,7 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

text_view.set_css_classes(["view", "editing_message_textview"])

|

||||

text_view.set_cursor_visible(True)

|

||||

|

||||

self.editing_message = {"text_view": text_view, "id": id, "button_container": button_container, "footer": footer}

|

||||

self.editing_message = {"text_view": text_view, "id": message_id, "button_container": button_container, "footer": footer}

|

||||

|

||||

def preview_file(self, file_path, file_type, presend_name):

|

||||

logger.debug(f"Previewing file: {file_path}")

|

||||

@@ -541,22 +571,24 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

|

||||

def convert_history_to_ollama(self):

|

||||

messages = []

|

||||

for id, message in self.chats["chats"][self.chats["selected_chat"]]["messages"].items():

|

||||

for message_id, message in self.chats["chats"][self.chats["selected_chat"]]["messages"].items():

|

||||

new_message = message.copy()

|

||||

if 'files' in message and len(message['files']) > 0:

|

||||

del new_message['files']

|

||||

new_message['content'] = ''

|

||||

for name, file_type in message['files'].items():

|

||||

file_path = os.path.join(self.data_dir, "chats", self.chats['selected_chat'], id, name)

|

||||

file_path = os.path.join(self.data_dir, "chats", self.chats['selected_chat'], message_id, name)

|

||||

file_data = self.get_content_of_file(file_path, file_type)

|

||||

if file_data: new_message['content'] += f"```[{name}]\n{file_data}\n```"

|

||||

if file_data:

|

||||

new_message['content'] += f"```[{name}]\n{file_data}\n```"

|

||||

new_message['content'] += message['content']

|

||||

if 'images' in message and len(message['images']) > 0:

|

||||

new_message['images'] = []

|

||||

for name in message['images']:

|

||||

file_path = os.path.join(self.data_dir, "chats", self.chats['selected_chat'], id, name)

|

||||

file_path = os.path.join(self.data_dir, "chats", self.chats['selected_chat'], message_id, name)

|

||||

image_data = self.get_content_of_file(file_path, 'image')

|

||||

if image_data: new_message['images'].append(image_data)

|

||||

if image_data:

|

||||

new_message['images'].append(image_data)

|

||||

messages.append(new_message)

|

||||

return messages

|

||||

|

||||

@@ -575,14 +607,15 @@ Generate a title following these rules:

|

||||

```"""

|

||||

current_model = self.convert_model_name(self.model_drop_down.get_selected_item().get_string(), 1)

|

||||

data = {"model": current_model, "prompt": prompt, "stream": False}

|

||||

if 'images' in message: data["images"] = message['images']

|

||||

if 'images' in message:

|

||||

data["images"] = message['images']

|

||||

response = connection_handler.simple_post(f"{connection_handler.url}/api/generate", data=json.dumps(data))

|

||||

|

||||

new_chat_name = json.loads(response.text)["response"].strip().removeprefix("Title: ").removeprefix("title: ").strip('\'"').replace('\n', ' ').title().replace('\'S', '\'s')

|

||||

new_chat_name = new_chat_name[:50] + (new_chat_name[50:] and '...')

|

||||

self.rename_chat(label_element.get_name(), new_chat_name, label_element)

|

||||

|

||||

def show_message(self, msg:str, bot:bool, footer:str=None, images:list=None, files:dict=None, id:str=None):

|

||||

def show_message(self, msg:str, bot:bool, footer:str=None, images:list=None, files:dict=None, message_id:str=None):

|

||||

message_text = Gtk.TextView(

|

||||

editable=False,

|

||||

focusable=True,

|

||||

@@ -597,7 +630,8 @@ Generate a title following these rules:

|

||||

)

|

||||

message_buffer = message_text.get_buffer()

|

||||

message_buffer.insert(message_buffer.get_end_iter(), msg)

|

||||

if footer is not None: message_buffer.insert_markup(message_buffer.get_end_iter(), footer, len(footer.encode('utf-8')))

|

||||

if footer is not None:

|

||||

message_buffer.insert_markup(message_buffer.get_end_iter(), footer, len(footer.encode('utf-8')))

|

||||

|

||||

delete_button = Gtk.Button(

|

||||

icon_name = "user-trash-symbolic",

|

||||

@@ -650,7 +684,7 @@ Generate a title following these rules:

|

||||

child=image_container

|

||||

)

|

||||

for image in images:

|

||||

path = os.path.join(self.data_dir, "chats", self.chats['selected_chat'], id, image)

|

||||

path = os.path.join(self.data_dir, "chats", self.chats['selected_chat'], message_id, image)

|

||||

try:

|

||||

if not os.path.isfile(path):

|

||||

raise FileNotFoundError("'{}' was not found or is a directory".format(path))

|

||||

@@ -659,8 +693,8 @@ Generate a title following these rules:

|

||||

button = Gtk.Button(

|

||||

child=image_element,

|

||||

css_classes=["flat", "chat_image_button"],

|

||||

name=os.path.join(self.data_dir, "chats", "{selected_chat}", id, image),

|

||||

tooltip_text=os.path.basename(path)

|

||||

name=os.path.join(self.data_dir, "chats", "{selected_chat}", message_id, image),

|

||||

tooltip_text=_("Image")

|

||||

)

|

||||

button.connect("clicked", lambda button, file_path=path: self.preview_file(file_path, 'image', None))

|

||||

except Exception as e:

|

||||

@@ -686,7 +720,7 @@ Generate a title following these rules:

|

||||

button = Gtk.Button(

|

||||

child=image_box,

|

||||

css_classes=["flat", "chat_image_button"],

|

||||

tooltip_text=_("Missing image")

|

||||

tooltip_text=_("Missing Image")

|

||||

)

|

||||

button.connect("clicked", lambda button : self.show_toast(_("Missing image"), self.main_overlay))

|

||||

image_container.append(button)

|

||||

@@ -722,19 +756,19 @@ Generate a title following these rules:

|

||||

tooltip_text=name,

|

||||

child=button_content

|

||||

)

|

||||

file_path = os.path.join(self.data_dir, "chats", "{selected_chat}", id, name)

|

||||

file_path = os.path.join(self.data_dir, "chats", "{selected_chat}", message_id, name)

|

||||

button.connect("clicked", lambda button, file_path=file_path, file_type=file_type: self.preview_file(file_path, file_type, None))

|

||||

file_container.append(button)

|

||||

message_box.append(file_scroller)

|

||||

|

||||

message_box.append(message_text)

|

||||

overlay = Gtk.Overlay(css_classes=["message"], name=id)

|

||||

overlay = Gtk.Overlay(css_classes=["message"], name=message_id)

|

||||

overlay.set_child(message_box)

|

||||

|

||||

delete_button.connect("clicked", lambda button, element=overlay: self.delete_message(element))

|

||||

copy_button.connect("clicked", lambda button, element=overlay: self.copy_message(element))

|

||||

edit_button.connect("clicked", lambda button, element=overlay, textview=message_text, button_container=button_container: self.edit_message(element, textview, button_container))

|

||||

regenerate_button.connect('clicked', lambda button, id=id, bot_message_box=message_box, bot_message_button_container=button_container : self.regenerate_message(id, bot_message_box, bot_message_button_container))

|

||||

regenerate_button.connect('clicked', lambda button, message_id=message_id, bot_message_box=message_box, bot_message_button_container=button_container : self.regenerate_message(message_id, bot_message_box, bot_message_button_container))

|

||||

button_container.append(delete_button)

|

||||

button_container.append(copy_button)

|

||||

button_container.append(regenerate_button if bot else edit_button)

|

||||

@@ -779,13 +813,12 @@ Generate a title following these rules:

|

||||

self.model_string_list.append(model_name)

|

||||

self.local_models.append(model["name"])

|

||||

#self.verify_if_image_can_be_used()

|

||||

return

|

||||

else:

|

||||

self.connection_error()

|

||||

|

||||

def save_server_config(self):

|

||||

with open(os.path.join(self.config_dir, "server.json"), "w+") as f:

|

||||

json.dump({'remote_url': self.remote_url, 'remote_bearer_token': self.remote_bearer_token, 'run_remote': self.run_remote, 'local_port': local_instance.port, 'run_on_background': self.run_on_background, 'model_tweaks': self.model_tweaks, 'ollama_overrides': local_instance.overrides, 'show_support': self.show_support}, f, indent=6)

|

||||

with open(os.path.join(self.config_dir, "server.json"), "w+", encoding="utf-8") as f:

|

||||

json.dump({'remote_url': self.remote_url, 'remote_bearer_token': self.remote_bearer_token, 'run_remote': self.run_remote, 'local_port': local_instance.port, 'run_on_background': self.run_on_background, 'model_tweaks': self.model_tweaks, 'ollama_overrides': local_instance.overrides}, f, indent=6)

|

||||

|

||||

def verify_connection(self):

|

||||

try:

|

||||

@@ -941,19 +974,21 @@ Generate a title following these rules:

|

||||