Compare commits

No commits in common. "main" and "0.4.0" have entirely different histories.

1

.github/FUNDING.yml

vendored

@ -1,7 +1,6 @@

|

||||

# These are supported funding model platforms

|

||||

|

||||

github: jeffser # Replace with up to 4 GitHub Sponsors-enabled usernames e.g., [user1, user2]

|

||||

#ko_fi: jeffser

|

||||

#patreon: # Replace with a single Patreon username

|

||||

#open_collective: # Replace with a single Open Collective username

|

||||

#ko_fi: # Replace with a single Ko-fi username

|

||||

|

||||

22

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@ -1,22 +0,0 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Something is wrong

|

||||

title: ''

|

||||

labels: bug

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

<!--Please be aware that GNOME Code of Conduct applies to Alpaca, https://conduct.gnome.org/-->

|

||||

**Describe the bug**

|

||||

A clear and concise description of what the bug is.

|

||||

|

||||

**Expected behavior**

|

||||

A clear and concise description of what you expected to happen.

|

||||

|

||||

**Screenshots**

|

||||

If applicable, add screenshots to help explain your problem.

|

||||

|

||||

**Debugging information**

|

||||

```

|

||||

Please paste here the debugging information available at 'About Alpaca' > 'Troubleshooting' > 'Debugging Information'

|

||||

```

|

||||

20

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@ -1,20 +0,0 @@

|

||||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: enhancement

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

<!--Please be aware that GNOME Code of Conduct applies to Alpaca, https://conduct.gnome.org/-->

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

||||

|

||||

**Describe the solution you'd like**

|

||||

A clear and concise description of what you want to happen.

|

||||

|

||||

**Describe alternatives you've considered**

|

||||

A clear and concise description of any alternative solutions or features you've considered.

|

||||

|

||||

**Additional context**

|

||||

Add any other context or screenshots about the feature request here.

|

||||

18

.github/workflows/flatpak-builder.yml

vendored

@ -1,18 +0,0 @@

|

||||

# .github/workflows/flatpak-build.yml

|

||||

on:

|

||||

workflow_dispatch:

|

||||

name: Flatpak Build

|

||||

jobs:

|

||||

flatpak:

|

||||

name: "Flatpak"

|

||||

runs-on: ubuntu-latest

|

||||

container:

|

||||

image: bilelmoussaoui/flatpak-github-actions:gnome-46

|

||||

options: --privileged

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: flatpak/flatpak-github-actions/flatpak-builder@v6

|

||||

with:

|

||||

bundle: com.jeffser.Alpaca.flatpak

|

||||

manifest-path: com.jeffser.Alpaca.json

|

||||

cache-key: flatpak-builder-${{ github.sha }}

|

||||

24

.github/workflows/pylint.yml

vendored

@ -1,24 +0,0 @@

|

||||

name: Pylint

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: ["3.11"]

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v3

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install pylint

|

||||

- name: Analysing the code with pylint

|

||||

run: |

|

||||

pylint --rcfile=.pylintrc $(git ls-files '*.py' | grep -v 'src/available_models_descriptions.py')

|

||||

14

.pylintrc

@ -1,14 +0,0 @@

|

||||

[MASTER]

|

||||

|

||||

[MESSAGES CONTROL]

|

||||

disable=undefined-variable, line-too-long, missing-function-docstring, consider-using-f-string, import-error

|

||||

|

||||

[FORMAT]

|

||||

max-line-length=200

|

||||

|

||||

# Reasons for removing some checks:

|

||||

# undefined-variable: _() is used by the translator on build time but it is not defined on the scripts

|

||||

# line-too-long: I... I'm too lazy to make the lines shorter, maybe later

|

||||

# missing-function-docstring I'm not adding a docstring to all the functions, most are self explanatory

|

||||

# consider-using-f-string I can't use f-string because of the translator

|

||||

# import-error The linter doesn't have access to all the libraries that the project itself does

|

||||

34

Alpaca.doap

@ -1,34 +0,0 @@

|

||||

<Project xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

|

||||

xmlns:rdfs="http://www.w3.org/2000/01/rdf-schema#"

|

||||

xmlns:foaf="http://xmlns.com/foaf/0.1/"

|

||||

xmlns:gnome="http://api.gnome.org/doap-extensions#"

|

||||

xmlns="http://usefulinc.com/ns/doap#">

|

||||

|

||||

<name xml:lang="en">Alpaca</name>

|

||||

<shortdesc xml:lang="en">An Ollama client made with GTK4 and Adwaita</shortdesc>

|

||||

<homepage rdf:resource="https://jeffser.com/alpaca" />

|

||||

<bug-database rdf:resource="https://github.com/Jeffser/Alpaca/issues"/>

|

||||

<programming-language>Python</programming-language>

|

||||

|

||||

<platform>GTK 4</platform>

|

||||

<platform>Libadwaita</platform>

|

||||

|

||||

<maintainer>

|

||||

<foaf:Person>

|

||||

<foaf:name>Jeffry Samuel</foaf:name>

|

||||

<foaf:mbox rdf:resource="mailto:jeffrysamuer@gmail.com"/>

|

||||

<foaf:account>

|

||||

<foaf:OnlineAccount>

|

||||

<foaf:accountServiceHomepage rdf:resource="https://github.com"/>

|

||||

<foaf:accountName>jeffser</foaf:accountName>

|

||||

</foaf:OnlineAccount>

|

||||

</foaf:account>

|

||||

<foaf:account>

|

||||

<foaf:OnlineAccount>

|

||||

<foaf:accountServiceHomepage rdf:resource="https://gitlab.gnome.org"/>

|

||||

<foaf:accountName>jeffser</foaf:accountName>

|

||||

</foaf:OnlineAccount>

|

||||

</foaf:account>

|

||||

</foaf:Person>

|

||||

</maintainer>

|

||||

</Project>

|

||||

@ -1,4 +0,0 @@

|

||||

Alpaca follows [GNOME's code of conduct](https://conduct.gnome.org/), please make sure to read it before interacting in any way with this repository.

|

||||

To report any misconduct please reach out via private message on

|

||||

- X (formally Twitter): [@jeffrysamuer](https://x.com/jeffrysamuer)

|

||||

- Mastodon: [@jeffser@floss.social](https://floss.social/@jeffser)

|

||||

@ -1,30 +0,0 @@

|

||||

# Contributing Rules

|

||||

|

||||

## Translations

|

||||

|

||||

If you want to translate or contribute on existing translations please read [this discussion](https://github.com/Jeffser/Alpaca/discussions/153).

|

||||

|

||||

## Code

|

||||

|

||||

1) Before contributing code make sure there's an open [issue](https://github.com/Jeffser/Alpaca/issues) for that particular problem or feature.

|

||||

2) Ask to contribute on the responses to the issue.

|

||||

3) Wait for [my](https://github.com/Jeffser) approval, I might have already started working on that issue.

|

||||

4) Test your code before submitting a pull request.

|

||||

|

||||

## Q&A

|

||||

|

||||

### Do I need to comment my code?

|

||||

|

||||

There's no need to add comments if the code is easy to read by itself.

|

||||

|

||||

### What if I need help or I don't understand the existing code?

|

||||

|

||||

You can reach out on the issue, I'll try to answer as soon as possible.

|

||||

|

||||

### What IDE should I use?

|

||||

|

||||

I use Gnome Builder but you can use whatever you want.

|

||||

|

||||

### Can I be credited?

|

||||

|

||||

You might be credited on the GitHub repository in the [thanks](https://github.com/Jeffser/Alpaca/blob/main/README.md#thanks) section of the README.

|

||||

116

README.md

@ -1,108 +1,50 @@

|

||||

<p align="center"><img src="https://jeffser.com/images/alpaca/logo.svg"></p>

|

||||

<img src="https://jeffser.com/images/alpaca/logo.svg">

|

||||

|

||||

# Alpaca

|

||||

|

||||

<a href='https://flathub.org/apps/com.jeffser.Alpaca'><img width='240' alt='Download on Flathub' src='https://flathub.org/api/badge?locale=en'/></a>

|

||||

An [Ollama](https://github.com/ollama/ollama) client made with GTK4 and Adwaita.

|

||||

|

||||

|

||||

|

||||

|

||||

Alpaca is an [Ollama](https://github.com/ollama/ollama) client where you can manage and chat with multiple models, Alpaca provides an easy and begginer friendly way of interacting with local AI, everything is open source and powered by Ollama.

|

||||

|

||||

---

|

||||

|

||||

> [!WARNING]

|

||||

> This project is not affiliated at all with Ollama, I'm not responsible for any damages to your device or software caused by running code given by any AI models.

|

||||

> This project is not affiliated at all with Ollama, I'm not responsible for any damages to your device or software caused by running code given by any models.

|

||||

|

||||

> [!IMPORTANT]

|

||||

> Please be aware that [GNOME Code of Conduct](https://conduct.gnome.org) applies to Alpaca before interacting with this repository.

|

||||

> [!important]

|

||||

> This is my first GTK4 / Adwaita / Python app, so it might crash and some features are still under development, please report any errors if you can, thank you!

|

||||

|

||||

## Features!

|

||||

|

||||

- Talk to multiple models in the same conversation

|

||||

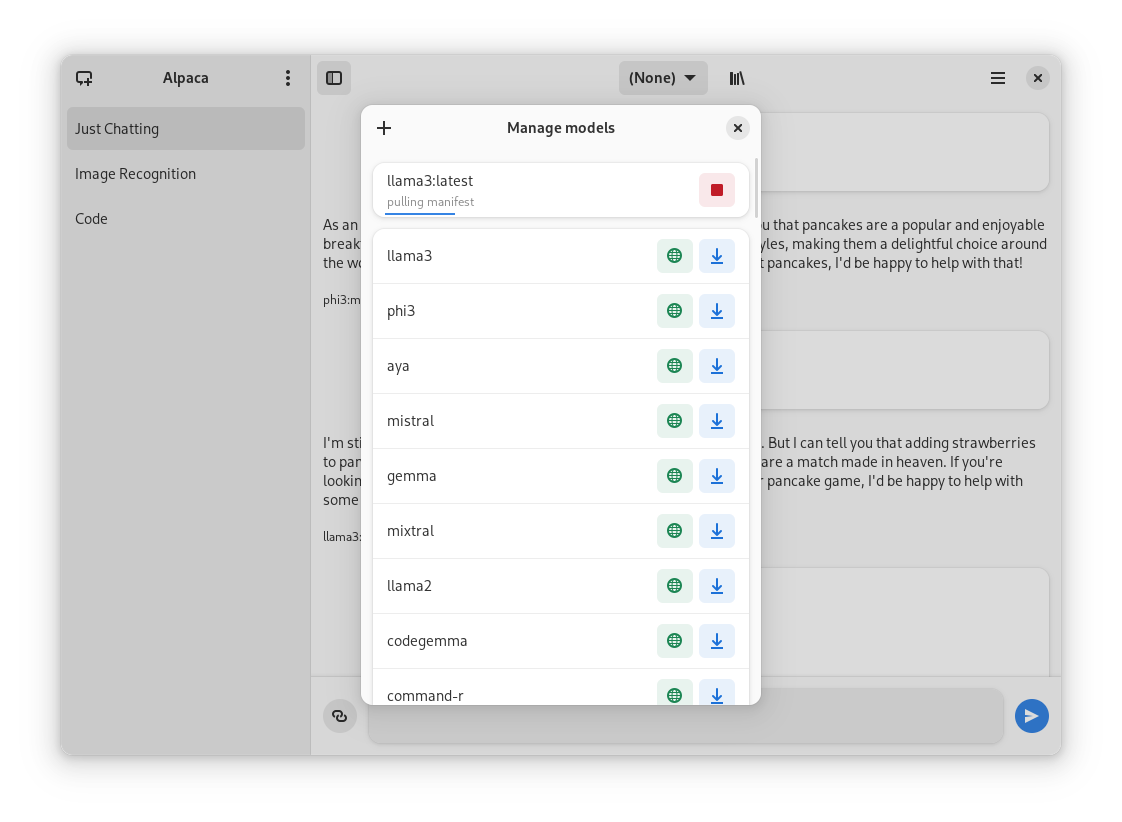

- Pull and delete models from the app

|

||||

- Image recognition

|

||||

- Document recognition (plain text files)

|

||||

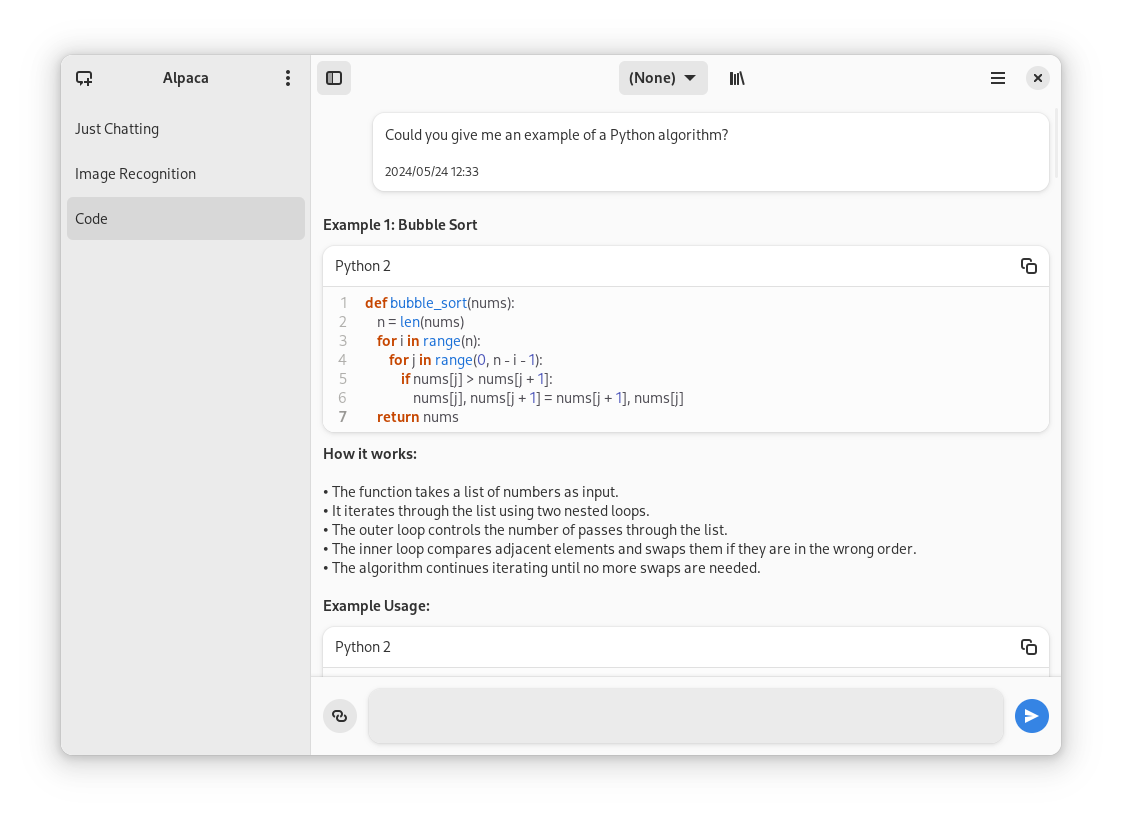

- Code highlighting

|

||||

|

||||

## Future features!

|

||||

- Multiple conversations

|

||||

- Image / document recognition

|

||||

- Notifications

|

||||

- Import / Export chats

|

||||

- Delete / Edit messages

|

||||

- Regenerate messages

|

||||

- YouTube recognition (Ask questions about a YouTube video using the transcript)

|

||||

- Website recognition (Ask questions about a certain website by parsing the url)

|

||||

- Code highlighting

|

||||

|

||||

## Screenies

|

||||

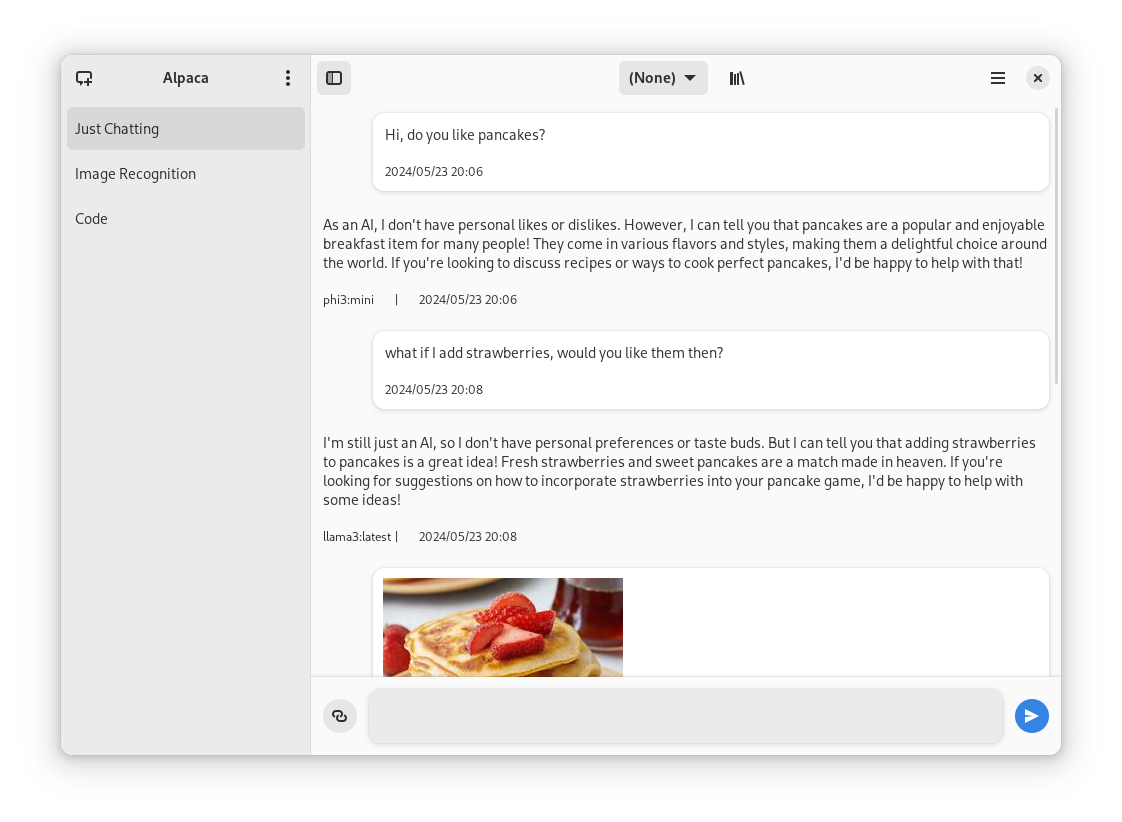

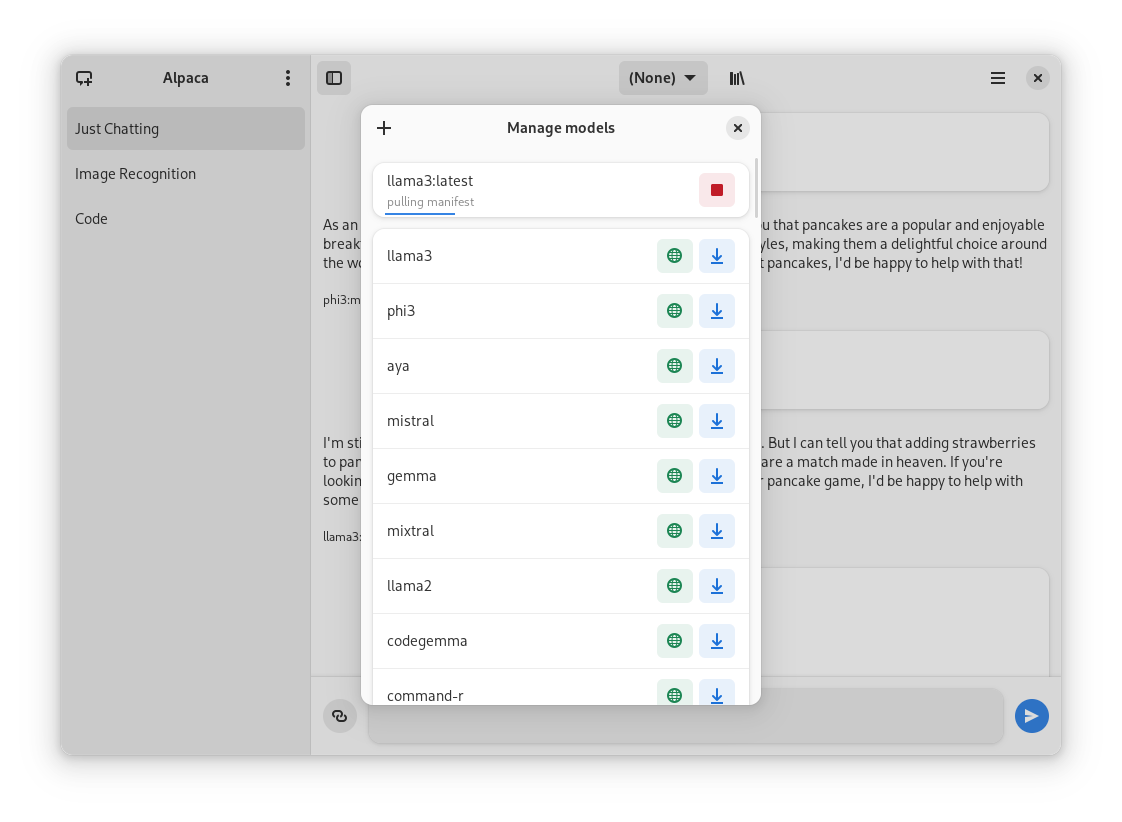

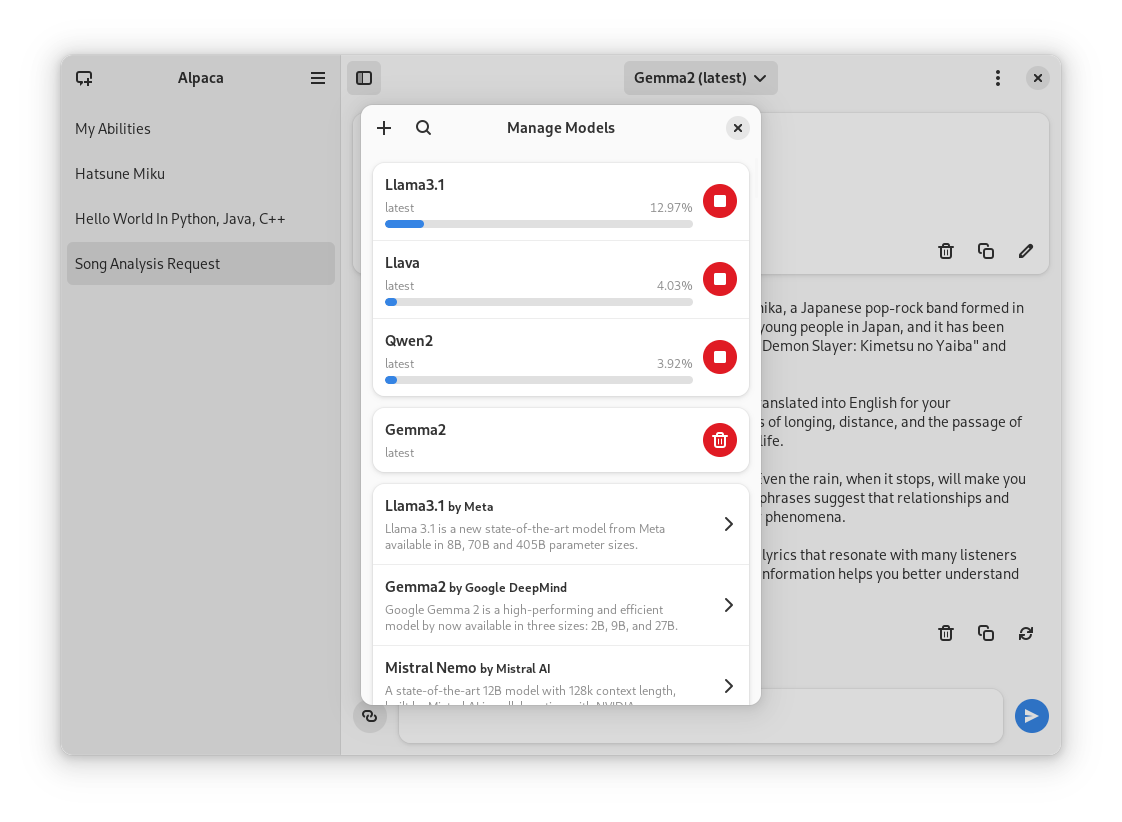

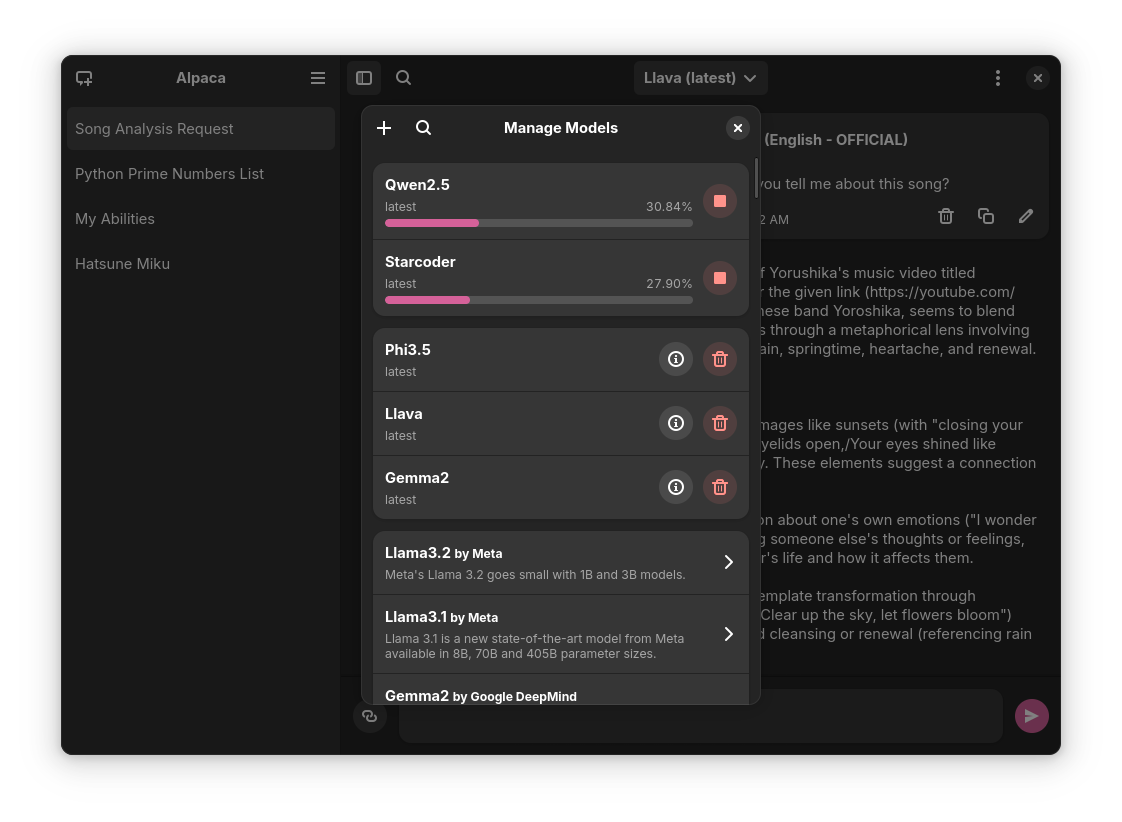

Login to Ollama instance | Chatting with models | Managing models

|

||||

:-------------------------:|:-------------------------:|:-------------------------:

|

||||

|  |

|

||||

|

||||

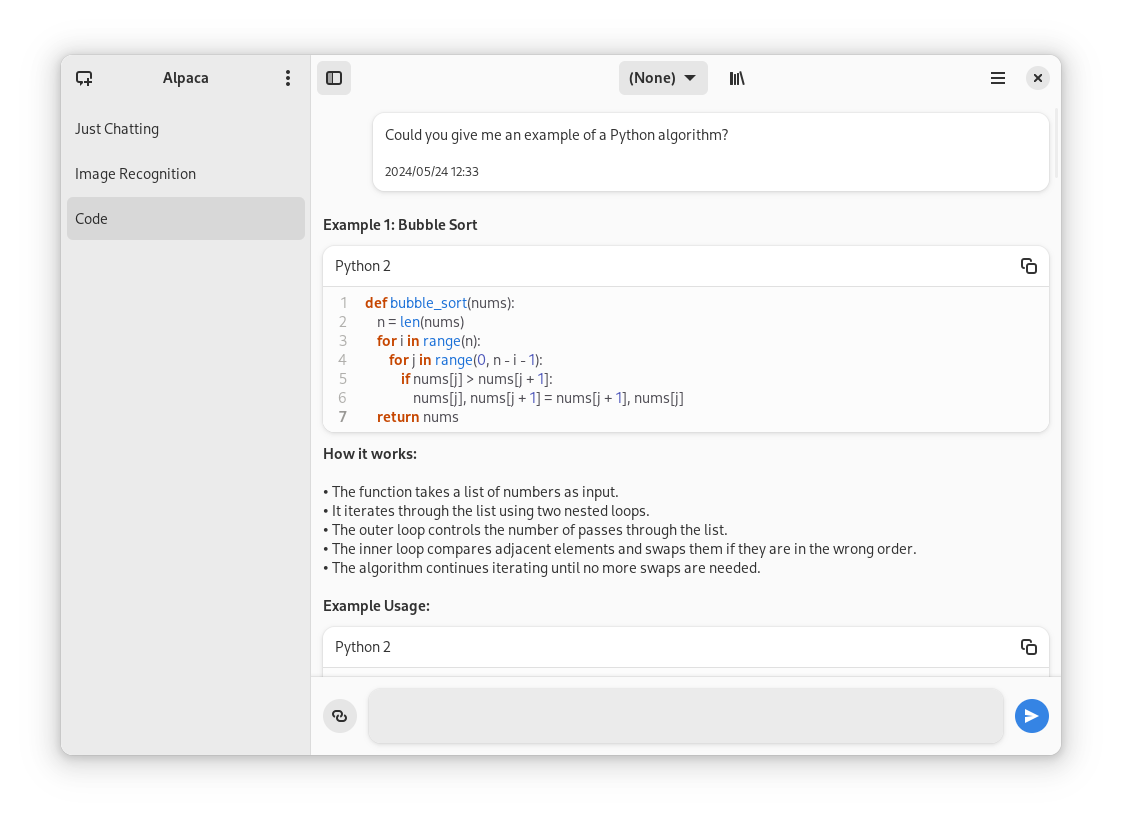

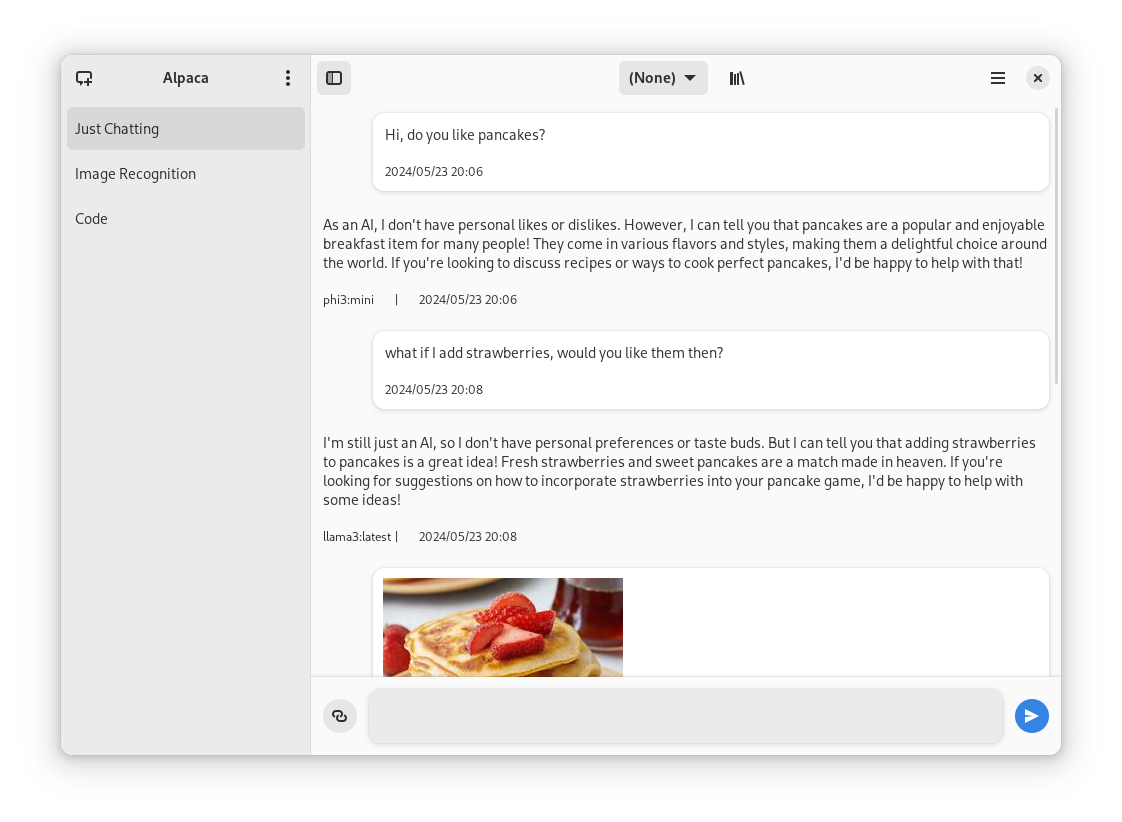

Normal conversation | Image recognition | Code highlighting | YouTube transcription | Model management

|

||||

:------------------:|:-----------------:|:-----------------:|:---------------------:|:----------------:

|

||||

|  |  |  |

|

||||

## Preview

|

||||

1. Clone repo using Gnome Builder

|

||||

2. Press the `run` button

|

||||

|

||||

## Installation

|

||||

## Instalation

|

||||

1. Go to the `releases` page

|

||||

2. Download the latest flatpak package

|

||||

3. Open it

|

||||

|

||||

### Flathub

|

||||

## Usage

|

||||

- You'll need an Ollama instance, I recommend using the [Docker image](https://ollama.com/blog/ollama-is-now-available-as-an-official-docker-image)

|

||||

- Once you open Alpaca it will ask you for a url, if you are using the same computer as the Ollama instance and didn't change the ports you can use the default url.

|

||||

- You might need a model, you can get one using the box icon at the top of the app, I recommend using phi3 because it is very lightweight but you can use whatever you want (I haven't actually tested all so your mileage may vary).

|

||||

- Then just start talking! you can mix different models, they all share the same conversation, it's really cool in my opinion.

|

||||

|

||||

You can find the latest stable version of the app on [Flathub](https://flathub.org/apps/com.jeffser.Alpaca)

|

||||

|

||||

### Flatpak Package

|

||||

|

||||

Everytime a new version is published they become available on the [releases page](https://github.com/Jeffser/Alpaca/releases) of the repository

|

||||

|

||||

### Snap Package

|

||||

|

||||

You can also find the Snap package on the [releases page](https://github.com/Jeffser/Alpaca/releases), to install it run this command:

|

||||

```BASH

|

||||

sudo snap install ./{package name} --dangerous

|

||||

```

|

||||

The `--dangerous` comes from the package being installed without any involvement of the SnapStore, I'm working on getting the app there, but for now you can test the app this way.

|

||||

|

||||

### Building Git Version

|

||||

|

||||

Note: This is not recommended since the prerelease versions of the app often present errors and general instability.

|

||||

|

||||

1. Clone the project

|

||||

2. Open with Gnome Builder

|

||||

3. Press the run button (or export if you want to build a Flatpak package)

|

||||

|

||||

## Translators

|

||||

|

||||

Language | Contributors

|

||||

:----------------------|:-----------

|

||||

🇷🇺 Russian | [Alex K](https://github.com/alexkdeveloper)

|

||||

🇪🇸 Spanish | [Jeffry Samuel](https://github.com/jeffser)

|

||||

🇫🇷 French | [Louis Chauvet-Villaret](https://github.com/loulou64490) , [Théo FORTIN](https://github.com/topiga)

|

||||

🇧🇷 Brazilian Portuguese | [Daimar Stein](https://github.com/not-a-dev-stein) , [Bruno Antunes](https://github.com/antun3s)

|

||||

🇳🇴 Norwegian | [CounterFlow64](https://github.com/CounterFlow64)

|

||||

🇮🇳 Bengali | [Aritra Saha](https://github.com/olumolu)

|

||||

🇨🇳 Simplified Chinese | [Yuehao Sui](https://github.com/8ar10der) , [Aleksana](https://github.com/Aleksanaa)

|

||||

🇮🇳 Hindi | [Aritra Saha](https://github.com/olumolu)

|

||||

🇹🇷 Turkish | [YusaBecerikli](https://github.com/YusaBecerikli)

|

||||

🇺🇦 Ukrainian | [Simon](https://github.com/OriginalSimon)

|

||||

🇩🇪 German | [Marcel Margenberg](https://github.com/MehrzweckMandala)

|

||||

🇮🇱 Hebrew | [Yosef Or Boczko](https://github.com/yoseforb)

|

||||

🇮🇳 Telugu | [Aryan Karamtoth](https://github.com/SpaciousCoder78)

|

||||

|

||||

Want to add a language? Visit [this discussion](https://github.com/Jeffser/Alpaca/discussions/153) to get started!

|

||||

|

||||

---

|

||||

|

||||

## Thanks

|

||||

|

||||

- [not-a-dev-stein](https://github.com/not-a-dev-stein) for their help with requesting a new icon and bug reports

|

||||

- [TylerLaBree](https://github.com/TylerLaBree) for their requests and ideas

|

||||

- [Imbev](https://github.com/imbev) for their reports and suggestions

|

||||

- [Nokse](https://github.com/Nokse22) for their contributions to the UI and table rendering

|

||||

- [Louis Chauvet-Villaret](https://github.com/loulou64490) for their suggestions

|

||||

- [Aleksana](https://github.com/Aleksanaa) for her help with better handling of directories

|

||||

- [Gnome Builder Team](https://gitlab.gnome.org/GNOME/gnome-builder) for the awesome IDE I use to develop Alpaca

|

||||

- Sponsors for giving me enough money to be able to take a ride to my campus every time I need to <3

|

||||

- Everyone that has shared kind words of encouragement!

|

||||

|

||||

---

|

||||

|

||||

## Dependencies

|

||||

|

||||

- [Requests](https://github.com/psf/requests)

|

||||

- [Pillow](https://github.com/python-pillow/Pillow)

|

||||

- [Pypdf](https://github.com/py-pdf/pypdf)

|

||||

- [Pytube](https://github.com/pytube/pytube)

|

||||

- [Html2Text](https://github.com/aaronsw/html2text)

|

||||

- [Ollama](https://github.com/ollama/ollama)

|

||||

- [Numactl](https://github.com/numactl/numactl)

|

||||

## About forks

|

||||

If you want to fork this... I mean, I think it would be better if you start from scratch, my code isn't well documented at all, but if you really want to, please give me some credit, that's all I ask for... And maybe a donation (joke)

|

||||

|

||||

12

SECURITY.md

@ -1,12 +0,0 @@

|

||||

# Security Policy

|

||||

|

||||

## Supported Packaging

|

||||

|

||||

Alpaca only supports [Flatpak](https://flatpak.org/) packaging officially, any other packaging methods might not behave as expected.

|

||||

|

||||

## Official Versions

|

||||

|

||||

The only ways Alpaca is being distributed officially are:

|

||||

|

||||

- [Alpaca's GitHub Repository Releases Page](https://github.com/Jeffser/Alpaca/releases)

|

||||

- [Flathub](https://flathub.org/apps/com.jeffser.Alpaca)

|

||||

@ -1,28 +1,16 @@

|

||||

{

|

||||

"id" : "com.jeffser.Alpaca",

|

||||

"runtime" : "org.gnome.Platform",

|

||||

"runtime-version" : "47",

|

||||

"runtime-version" : "46",

|

||||

"sdk" : "org.gnome.Sdk",

|

||||

"command" : "alpaca",

|

||||

"finish-args" : [

|

||||

"--share=network",

|

||||

"--share=ipc",

|

||||

"--socket=fallback-x11",

|

||||

"--device=all",

|

||||

"--socket=wayland",

|

||||

"--filesystem=/sys/module/amdgpu:ro",

|

||||

"--env=LD_LIBRARY_PATH=/app/lib:/usr/lib/x86_64-linux-gnu/GL/default/lib:/usr/lib/x86_64-linux-gnu/openh264/extra:/usr/lib/x86_64-linux-gnu/openh264/extra:/usr/lib/sdk/llvm15/lib:/usr/lib/x86_64-linux-gnu/GL/default/lib:/usr/lib/ollama:/app/plugins/AMD/lib/ollama",

|

||||

"--env=GSK_RENDERER=ngl"

|

||||

"--device=dri",

|

||||

"--socket=wayland"

|

||||

],

|

||||

"add-extensions": {

|

||||

"com.jeffser.Alpaca.Plugins": {

|

||||

"add-ld-path": "/app/plugins/AMD/lib/ollama",

|

||||

"directory": "plugins",

|

||||

"no-autodownload": true,

|

||||

"autodelete": true,

|

||||

"subdirectories": true

|

||||

}

|

||||

},

|

||||

"cleanup" : [

|

||||

"/include",

|

||||

"/lib/pkgconfig",

|

||||

@ -83,141 +71,6 @@

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "python3-pypdf",

|

||||

"buildsystem": "simple",

|

||||

"build-commands": [

|

||||

"pip3 install --verbose --exists-action=i --no-index --find-links=\"file://${PWD}\" --prefix=${FLATPAK_DEST} \"pypdf\" --no-build-isolation"

|

||||

],

|

||||

"sources": [

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/c9/d1/450b19bbdbb2c802f554312c62ce2a2c0d8744fe14735bc70ad2803578c7/pypdf-4.2.0-py3-none-any.whl",

|

||||

"sha256": "dc035581664e0ad717e3492acebc1a5fc23dba759e788e3d4a9fc9b1a32e72c1"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "python3-pytube",

|

||||

"buildsystem": "simple",

|

||||

"build-commands": [

|

||||

"pip3 install --verbose --exists-action=i --no-index --find-links=\"file://${PWD}\" --prefix=${FLATPAK_DEST} \"pytube\" --no-build-isolation"

|

||||

],

|

||||

"sources": [

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/51/64/bcf8632ed2b7a36bbf84a0544885ffa1d0b4bcf25cc0903dba66ec5fdad9/pytube-15.0.0-py3-none-any.whl",

|

||||

"sha256": "07b9904749e213485780d7eb606e5e5b8e4341aa4dccf699160876da00e12d78"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "python3-youtube-transcript-api",

|

||||

"buildsystem": "simple",

|

||||

"build-commands": [

|

||||

"pip3 install --verbose --exists-action=i --no-index --find-links=\"file://${PWD}\" --prefix=${FLATPAK_DEST} \"youtube-transcript-api\" --no-build-isolation"

|

||||

],

|

||||

"sources": [

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/12/90/3c9ff0512038035f59d279fddeb79f5f1eccd8859f06d6163c58798b9487/certifi-2024.8.30-py3-none-any.whl",

|

||||

"sha256": "922820b53db7a7257ffbda3f597266d435245903d80737e34f8a45ff3e3230d8"

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/f2/4f/e1808dc01273379acc506d18f1504eb2d299bd4131743b9fc54d7be4df1e/charset_normalizer-3.4.0.tar.gz",

|

||||

"sha256": "223217c3d4f82c3ac5e29032b3f1c2eb0fb591b72161f86d93f5719079dae93e"

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/76/c6/c88e154df9c4e1a2a66ccf0005a88dfb2650c1dffb6f5ce603dfbd452ce3/idna-3.10-py3-none-any.whl",

|

||||

"sha256": "946d195a0d259cbba61165e88e65941f16e9b36ea6ddb97f00452bae8b1287d3"

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/f9/9b/335f9764261e915ed497fcdeb11df5dfd6f7bf257d4a6a2a686d80da4d54/requests-2.32.3-py3-none-any.whl",

|

||||

"sha256": "70761cfe03c773ceb22aa2f671b4757976145175cdfca038c02654d061d6dcc6"

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/ce/d9/5f4c13cecde62396b0d3fe530a50ccea91e7dfc1ccf0e09c228841bb5ba8/urllib3-2.2.3-py3-none-any.whl",

|

||||

"sha256": "ca899ca043dcb1bafa3e262d73aa25c465bfb49e0bd9dd5d59f1d0acba2f8fac"

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/52/42/5f57d37d56bdb09722f226ed81cc1bec63942da745aa27266b16b0e16a5d/youtube_transcript_api-0.6.2-py3-none-any.whl",

|

||||

"sha256": "019dbf265c6a68a0591c513fff25ed5a116ce6525832aefdfb34d4df5567121c"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "python3-html2text",

|

||||

"buildsystem": "simple",

|

||||

"build-commands": [

|

||||

"pip3 install --verbose --exists-action=i --no-index --find-links=\"file://${PWD}\" --prefix=${FLATPAK_DEST} \"html2text\" --no-build-isolation"

|

||||

],

|

||||

"sources": [

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/1a/43/e1d53588561e533212117750ee79ad0ba02a41f52a08c1df3396bd466c05/html2text-2024.2.26.tar.gz",

|

||||

"sha256": "05f8e367d15aaabc96415376776cdd11afd5127a77fce6e36afc60c563ca2c32"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "ollama",

|

||||

"buildsystem": "simple",

|

||||

"build-commands": [

|

||||

"cp -r --remove-destination * ${FLATPAK_DEST}/",

|

||||

"mkdir ${FLATPAK_DEST}/plugins"

|

||||

],

|

||||

"sources": [

|

||||

{

|

||||

"type": "archive",

|

||||

"url": "https://github.com/ollama/ollama/releases/download/v0.3.12/ollama-linux-amd64.tgz",

|

||||

"sha256": "f0efa42f7ad77cd156bd48c40cd22109473801e5113173b0ad04f094a4ef522b",

|

||||

"only-arches": [

|

||||

"x86_64"

|

||||

]

|

||||

},

|

||||

{

|

||||

"type": "archive",

|

||||

"url": "https://github.com/ollama/ollama/releases/download/v0.3.12/ollama-linux-arm64.tgz",

|

||||

"sha256": "da631cbe4dd2c168dae58d6868b1ff60e881e050f2d07578f2f736e689fec04c",

|

||||

"only-arches": [

|

||||

"aarch64"

|

||||

]

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "libnuma",

|

||||

"buildsystem": "autotools",

|

||||

"build-commands": [

|

||||

"autoreconf -i",

|

||||

"make",

|

||||

"make install"

|

||||

],

|

||||

"sources": [

|

||||

{

|

||||

"type": "archive",

|

||||

"url": "https://github.com/numactl/numactl/releases/download/v2.0.18/numactl-2.0.18.tar.gz",

|

||||

"sha256": "b4fc0956317680579992d7815bc43d0538960dc73aa1dd8ca7e3806e30bc1274"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "vte",

|

||||

"buildsystem": "meson",

|

||||

"config-opts": ["-Dvapi=false"],

|

||||

"sources": [

|

||||

{

|

||||

"type": "archive",

|

||||

"url": "https://gitlab.gnome.org/GNOME/vte/-/archive/0.78.0/vte-0.78.0.tar.gz",

|

||||

"sha256": "82e19d11780fed4b66400f000829ce5ca113efbbfb7975815f26ed93e4c05f2d"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name" : "alpaca",

|

||||

"builddir" : true,

|

||||

@ -225,8 +78,7 @@

|

||||

"sources" : [

|

||||

{

|

||||

"type" : "git",

|

||||

"url": "https://github.com/Jeffser/Alpaca.git",

|

||||

"branch" : "main"

|

||||

"url" : "file:///home/tentri/Documents/Alpaca"

|

||||

}

|

||||

]

|

||||

}

|

||||

|

||||

@ -5,6 +5,4 @@ Icon=com.jeffser.Alpaca

|

||||

Terminal=false

|

||||

Type=Application

|

||||

Categories=Utility;Development;Chat;

|

||||

Keywords=ai;ollama;llm

|

||||

StartupNotify=true

|

||||

X-Purism-FormFactor=Workstation;Mobile;

|

||||

|

||||

@ -5,21 +5,14 @@

|

||||

<project_license>GPL-3.0-or-later</project_license>

|

||||

<launchable type="desktop-id">com.jeffser.Alpaca.desktop</launchable>

|

||||

<name>Alpaca</name>

|

||||

<summary>Chat with local AI models</summary>

|

||||

<summary>An Ollama client</summary>

|

||||

<description>

|

||||

<p>Chat with multiple AI models</p>

|

||||

<p>An Ollama client</p>

|

||||

<p>Features</p>

|

||||

<ul>

|

||||

<li>Built in Ollama instance</li>

|

||||

<li>Talk to multiple models in the same conversation</li>

|

||||

<li>Pull and delete models from the app</li>

|

||||

<li>Have multiple conversations</li>

|

||||

<li>Image recognition (Only available with compatible models)</li>

|

||||

<li>Plain text documents recognition</li>

|

||||

<li>Import and export chats</li>

|

||||

<li>Append YouTube transcripts to the prompt</li>

|

||||

<li>Append text from a website to the prompt</li>

|

||||

<li>PDF recognition</li>

|

||||

</ul>

|

||||

<p>Disclaimer</p>

|

||||

<p>This project is not affiliated at all with Ollama, I'm not responsible for any damages to your device or software caused by running code given by any models.</p>

|

||||

@ -36,14 +29,6 @@

|

||||

<category>Development</category>

|

||||

<category>Chat</category>

|

||||

</categories>

|

||||

<requires>

|

||||

<display_length compare="ge">360</display_length>

|

||||

</requires>

|

||||

<recommends>

|

||||

<control>keyboard</control>

|

||||

<control>pointing</control>

|

||||

<control>touch</control>

|

||||

</recommends>

|

||||

<branding>

|

||||

<color type="primary" scheme_preference="light">#8cdef5</color>

|

||||

<color type="primary" scheme_preference="dark">#0f2b78</color>

|

||||

@ -51,689 +36,22 @@

|

||||

<screenshots>

|

||||

<screenshot type="default">

|

||||

<image>https://jeffser.com/images/alpaca/screenie1.png</image>

|

||||

<caption>A normal conversation with an AI Model</caption>

|

||||

<caption>Welcome dialog</caption>

|

||||

</screenshot>

|

||||

<screenshot>

|

||||

<image>https://jeffser.com/images/alpaca/screenie2.png</image>

|

||||

<caption>A conversation involving image recognition</caption>

|

||||

<caption>A conversation involving multiple models</caption>

|

||||

</screenshot>

|

||||

<screenshot>

|

||||

<image>https://jeffser.com/images/alpaca/screenie3.png</image>

|

||||

<caption>A conversation showing code highlighting</caption>

|

||||

</screenshot>

|

||||

<screenshot>

|

||||

<image>https://jeffser.com/images/alpaca/screenie4.png</image>

|

||||

<caption>A Python script running inside integrated terminal</caption>

|

||||

</screenshot>

|

||||

<screenshot>

|

||||

<image>https://jeffser.com/images/alpaca/screenie5.png</image>

|

||||

<caption>A conversation involving a YouTube video transcript</caption>

|

||||

</screenshot>

|

||||

<screenshot>

|

||||

<image>https://jeffser.com/images/alpaca/screenie6.png</image>

|

||||

<caption>Multiple models being downloaded</caption>

|

||||

<caption>Managing models</caption>

|

||||

</screenshot>

|

||||

</screenshots>

|

||||

<content_rating type="oars-1.1" />

|

||||

<url type="bugtracker">https://github.com/Jeffser/Alpaca/issues</url>

|

||||

<url type="homepage">https://jeffser.com/alpaca/</url>

|

||||

<url type="homepage">https://github.com/Jeffser/Alpaca</url>

|

||||

<url type="donation">https://github.com/sponsors/Jeffser</url>

|

||||

<url type="translate">https://github.com/Jeffser/Alpaca/discussions/153</url>

|

||||

<url type="contribute">https://github.com/Jeffser/Alpaca/discussions/154</url>

|

||||

<url type="vcs-browser">https://github.com/Jeffser/Alpaca</url>

|

||||

<releases>

|

||||

<release version="2.7.0" date="2024-10-15">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.7.0</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>User messages are now compacted into bubbles</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Fixed re connection dialog not working when 'use local instance' is selected</li>

|

||||

<li>Fixed model manager not adapting to large system fonts</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="2.6.5" date="2024-10-13">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.6.5</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Details page for models</li>

|

||||

<li>Model selector gets replaced with 'manage models' button when there are no models downloaded</li>

|

||||

<li>Added warning when model is too big for the device</li>

|

||||

<li>Added AMD GPU indicator in preferences</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="2.6.0" date="2024-10-11">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.6.0</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Better system for handling dialogs</li>

|

||||

<li>Better system for handling instance switching</li>

|

||||

<li>Remote connection dialog</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Fixed: Models get duplicated when switching remote and local instance</li>

|

||||

<li>Better internal instance manager</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="2.5.1" date="2024-10-09">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.5.1</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Added 'Cancel' and 'Save' buttons when editing a message</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Better handling of image recognition</li>

|

||||

<li>Remove unused files when canceling a model download</li>

|

||||

<li>Better message blocks rendering</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="2.5.0" date="2024-10-06">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.5.0</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Run bash and python scripts straight from chat</li>

|

||||

<li>Updated Ollama to 0.3.12</li>

|

||||

<li>New models!</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Fixed and made faster the launch sequence</li>

|

||||

<li>Better detection of code blocks in messages</li>

|

||||

<li>Fixed app not loading in certain setups with Nvidia GPUs</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="2.0.6" date="2024-09-29">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.6</url>

|

||||

<description>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Fixed message notification sometimes crashing text rendering because of them running on different threads</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="2.0.5" date="2024-09-25">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.5</url>

|

||||

<description>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Fixed message generation sometimes failing</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="2.0.4" date="2024-09-22">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.4</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Sidebar resizes with the window</li>

|

||||

<li>New welcome dialog</li>

|

||||

<li>Message search</li>

|

||||

<li>Updated Ollama to v0.3.11</li>

|

||||

<li>A lot of new models provided by Ollama repository</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Fixed text inside model manager when the accessibility option 'large text' is on</li>

|

||||

<li>Fixed image recognition on unsupported models</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="2.0.3" date="2024-09-18">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.3</url>

|

||||

<description>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Fixed spinner not hiding if the back end fails</li>

|

||||

<li>Fixed image recognition with local images</li>

|

||||

<li>Changed appearance of delete / stop model buttons</li>

|

||||

<li>Fixed stop button crashing the app</li>

|

||||

</ul>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Made sidebar resize a little when the window is smaller</li>

|

||||

<li>Instant launch</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="2.0.2" date="2024-09-11">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.2</url>

|

||||

<description>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Fixed error on first run (welcome dialog)</li>

|

||||

<li>Fixed checker for Ollama instance (used on system packages)</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="2.0.1" date="2024-09-11">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.1</url>

|

||||

<description>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Fixed 'clear chat' option</li>

|

||||

<li>Fixed welcome dialog causing the local instance to not launch</li>

|

||||

<li>Fixed support for AMD GPUs</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="2.0.0" date="2024-09-01">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.0</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Model, message and chat systems have been rewritten</li>

|

||||

<li>New models are available</li>

|

||||

<li>Ollama updated to v0.3.9</li>

|

||||

<li>Added support for multiple chat generations simultaneously</li>

|

||||

<li>Added experimental AMD GPU support</li>

|

||||

<li>Added message loading spinner and new message indicator to chat tab</li>

|

||||

<li>Added animations</li>

|

||||

<li>Changed model manager / model selector appearance</li>

|

||||

<li>Changed message appearance</li>

|

||||

<li>Added markdown and code blocks to user messages</li>

|

||||

<li>Added loading dialog at launch so the app opens faster</li>

|

||||

<li>Added warning when device is on 'battery saver' mode</li>

|

||||

<li>Added inactivity timer to integrated instance</li>

|

||||

</ul>

|

||||

<ul>

|

||||

<li>The chat is now scrolled to the bottom when it's changed</li>

|

||||

<li>Better handling of focus on messages</li>

|

||||

<li>Better general performance on the app</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.1.1" date="2024-08-12">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.1.1</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>New duplicate chat option</li>

|

||||

<li>Changed model selector appearance</li>

|

||||

<li>Message entry is focused on launch and chat change</li>

|

||||

<li>Message is focused when it's being edited</li>

|

||||

<li>Added loading spinner when regenerating a message</li>

|

||||

<li>Added Ollama debugging to 'About Alpaca' dialog</li>

|

||||

<li>Changed YouTube transcription dialog appearance and behavior</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>CTRL+W and CTRL+Q stops local instance before closing the app</li>

|

||||

<li>Changed appearance of 'Open Model Manager' button on welcome screen</li>

|

||||

<li>Fixed message generation not working consistently</li>

|

||||

<li>Fixed message edition not working consistently</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.1.0" date="2024-08-10">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.1.0</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Model manager opens faster</li>

|

||||

<li>Delete chat option in secondary menu</li>

|

||||

<li>New model selector popup</li>

|

||||

<li>Standard shortcuts</li>

|

||||

<li>Model manager is navigable with keyboard</li>

|

||||

<li>Changed sidebar collapsing behavior</li>

|

||||

<li>Focus indicators on messages</li>

|

||||

<li>Welcome screen</li>

|

||||

<li>Give message entry focus at launch</li>

|

||||

<li>Generally better code</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Better width for dialogs</li>

|

||||

<li>Better compatibility with screen readers</li>

|

||||

<li>Fixed message regenerator</li>

|

||||

<li>Removed 'Featured models' from welcome dialog</li>

|

||||

<li>Added default buttons to dialogs</li>

|

||||

<li>Fixed import / export of chats</li>

|

||||

<li>Changed Python2 title to Python on code blocks</li>

|

||||

<li>Prevent regeneration of title when the user changed it to a custom title</li>

|

||||

<li>Show date on stopped messages</li>

|

||||

<li>Fix clear chat error</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.0.6" date="2024-08-04">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.0.6</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Changed shortcuts to standards</li>

|

||||

<li>Moved 'Manage Models' button to primary menu</li>

|

||||

<li>Stable support for GGUF model files</li>

|

||||

<li>General optimizations</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Better handling of enter key (important for Japanese input)</li>

|

||||

<li>Removed sponsor dialog</li>

|

||||

<li>Added sponsor link in about dialog</li>

|

||||

<li>Changed window and elements dimensions</li>

|

||||

<li>Selected model changes when entering model manager</li>

|

||||

<li>Better image tooltips</li>

|

||||

<li>GGUF Support</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.0.5" date="2024-08-02">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.0.5</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Regenerate any response, even if they are incomplete</li>

|

||||

<li>Support for pulling models by name:tag</li>

|

||||

<li>Stable support for GGUF model files</li>

|

||||

<li>Restored sidebar toggle button</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Reverted back to standard styles</li>

|

||||

<li>Fixed generated titles having "'S" for some reason</li>

|

||||

<li>Changed min width for model dropdown</li>

|

||||

<li>Changed message entry shadow</li>

|

||||

<li>The last model used is now restored when the user changes chat</li>

|

||||

<li>Better check for message finishing</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.0.4" date="2024-08-01">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.0.4</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Added table rendering (Thanks Nokse)</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Made support dialog more common</li>

|

||||

<li>Dialog title on tag chooser when downloading models didn't display properly</li>

|

||||

<li>Prevent chat generation from generating a title with multiple lines</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.0.3" date="2024-08-01">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.0.3</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Bearer Token entry on connection error dialog</li>

|

||||

<li>Small appearance changes</li>

|

||||

<li>Compatibility with code blocks without explicit language</li>

|

||||

<li>Rare, optional and dismissible support dialog</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Date format for Simplified Chinese translation</li>

|

||||

<li>Bug with unsupported localizations</li>

|

||||

<li>Min height being too large to be used on mobile</li>

|

||||

<li>Remote connection checker bug</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.0.2" date="2024-07-29">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.0.2</url>

|

||||

<description>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Models with capital letters on their tag don't work</li>

|

||||

<li>Ollama fails to launch on some systems</li>

|

||||

<li>YouTube transcripts are not being saved in the right TMP directory</li>

|

||||

</ul>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Debug messages are now shown on the 'About Alpaca' dialog</li>

|

||||

<li>Updated Ollama to v0.3.0 (new models)</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.0.1" date="2024-07-23">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.0.1</url>

|

||||

<description>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Models with '-' in their names didn't work properly, this is now fixed</li>

|

||||

<li>Better connection check for Ollama</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.0.0" date="2024-07-22">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.0.0</url>

|

||||

<description>

|

||||

<p>Stable Release</p>

|

||||

<p>The new icon was made by Tobias Bernard over the Gnome Gitlab, thanks for the great icon!</p>

|

||||

<p>Features and fixes</p>

|

||||

<ul>

|

||||

<li>Updated Ollama instance to 0.2.8</li>

|

||||

<li>Better model selector</li>

|

||||

<li>Model manager redesign</li>

|

||||

<li>Better tag selector when pulling a model</li>

|

||||

<li>Model search</li>

|

||||

<li>Added support for bearer tokens on remote instances</li>

|

||||

<li>Preferences dialog redesign</li>

|

||||

<li>Added context menus to interact with a chat</li>

|

||||

<li>Redesigned primary and secondary menus</li>

|

||||

<li>YouTube integration: Paste the URL of a video with a transcript and it will be added to the prompt</li>

|

||||

<li>Website integration (Experimental): Extract the text from the body of a website by adding it's URL to the prompt</li>

|

||||

<li>Chat title generation</li>

|

||||

<li>Auto resizing of message entry</li>

|

||||

<li>Chat notifications</li>

|

||||

<li>Added indicator when an image is missing</li>

|

||||

<li>Auto rearrange the order of chats when a message is received</li>

|

||||

<li>Redesigned file preview dialog</li>

|

||||

<li>Credited new contributors</li>

|

||||

<li>Better stability and optimization</li>

|

||||

<li>Edit messages to change the context of a conversation</li>

|

||||

<li>Added disclaimers when pulling models</li>

|

||||

<li>Preview files before sending a message</li>

|

||||

<li>Better format for date and time on messages</li>

|

||||

<li>Error and debug logging on terminal</li>

|

||||

<li>Auto-hiding sidebar button</li>

|

||||

<li>Various UI tweaks</li>

|

||||

</ul>

|

||||

<p>New Models</p>

|

||||

<ul>

|

||||

<li>Gemma2</li>

|

||||

<li>GLM4</li>

|

||||

<li>Codegeex4</li>

|

||||

<li>InternLM2</li>

|

||||

<li>Llama3-groq-tool-use</li>

|

||||

<li>Mathstral</li>

|

||||

<li>Mistral-nemo</li>

|

||||

<li>Firefunction-v2</li>

|

||||

<li>Nuextract</li>

|

||||

</ul>

|

||||

<p>Translations</p>

|

||||

<p>These are all the available translations on 1.0.0, thanks to all the contributors!</p>

|

||||

<ul>

|

||||

<li>Russian: Alex K</li>

|

||||

<li>Spanish: Jeffser</li>

|

||||

<li>Brazilian Portuguese: Daimar Stein</li>

|

||||

<li>French: Louis Chauvet-Villaret</li>

|

||||

<li>Norwegian: CounterFlow64</li>

|

||||

<li>Bengali: Aritra Saha</li>

|

||||

<li>Simplified Chinese: Yuehao Sui</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.9.6.1" date="2024-06-22">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.9.6.1</url>

|

||||

<description>

|

||||

<p>Fix</p>

|

||||

<p>Removed DOCX compatibility temporally due to error with python-lxml dependency </p>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.9.6" date="2024-06-21">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.9.6</url>

|

||||

<description>

|

||||

<p>Big Update</p>

|

||||

<ul>

|

||||

<li>Added compatibility for PDF</li>

|

||||

<li>Added compatibility for DOCX</li>

|

||||

<li>Merged 'file attachment' menu into one button</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.9.5" date="2024-06-04">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.9.5</url>

|

||||

<description>

|

||||

<p>Quick Fix</p>

|

||||

<p>There were some errors when transitioning from the old version of chats to the new version. I apologize if this caused any corruption in your chat history. This should be the only time such a transition is needed.</p>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.9.4" date="2024-06-04">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.9.4</url>

|

||||

<description>

|

||||

<p>Huge Update</p>

|

||||

<ul>

|

||||

<li>Added: Support for plain text files</li>

|

||||

<li>Added: New backend system for storing messages</li>

|

||||

<li>Added: Support for changing Ollama's overrides</li>

|

||||

<li>General Optimization</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.9.3" date="2024-06-01">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.9.3</url>

|

||||

<description>

|

||||

<p>Big Update</p>

|

||||

<ul>

|

||||

<li>Added: Support for GGUF models (experimental)</li>

|

||||

<li>Added: Support for customization and creation of models</li>

|

||||

<li>Fixed: Icons don't appear on non Gnome systems</li>

|

||||

<li>Update Ollama to v0.1.39</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.9.2" date="2024-05-30">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.9.2</url>

|

||||

<description>

|

||||

<p>Fix</p>

|

||||

<ul>

|

||||

<li>Fixed: app didn't open if models tweaks wasn't present in the config files</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.9.1" date="2024-05-29">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.9.1</url>

|

||||

<description>

|

||||

<p>Big Update</p>

|

||||

<ul>

|

||||

<li>Changed multiple icons (paper airplane for the send button)</li>

|

||||

<li>Combined export / import chat buttons into a menu</li>

|

||||

<li>Added 'model tweaks' (temperature, seed, keep_alive)</li>

|

||||

<li>Fixed send / stop button</li>

|

||||

<li>Fixed app not checking if remote connection works when starting</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.9.0" date="2024-05-29">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.9.0</url>

|

||||

<description>

|

||||

<p>Daily Update</p>

|

||||

<ul>

|

||||

<li>Added text ellipsis to chat name so it doesn't change the button width</li>

|

||||

<li>New shortcut for creating a chat (CTRL+N)</li>

|

||||

<li>New message entry design</li>

|

||||

<li>Fixed: Can't rename the same chat multiple times</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.8.8" date="2024-05-28">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.8.8</url>

|

||||

<description>

|

||||

<p>The fix</p>

|

||||

<ul>

|

||||

<li>Fixed: Ollama instance keeps running on the background even when it is disabled</li>

|

||||

<li>Fixed: Can't pull models on the integrated instance</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.8.7" date="2024-05-27">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.8.7</url>

|

||||

<description>

|

||||

<p>Quick tweaks</p>

|

||||

<ul>

|

||||

<li>Added progress bar to models that are being pulled</li>

|

||||

<li>Added size to tags when pulling a model</li>

|

||||

<li>General optimizations on the background</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.8.6" date="2024-05-26">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.8.6</url>

|

||||

<description>

|

||||

<p>Quick fixes</p>

|

||||

<ul>

|

||||

<li>Fixed: Scroll when message is received</li>

|

||||

<li>Fixed: Content doesn't change when creating a new chat</li>

|

||||

<li>Added 'Featured Models' page on welcome dialog</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.8.5" date="2024-05-26">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.8.5</url>

|

||||

<description>

|

||||

<p>Nice Update</p>

|

||||

<ul>

|

||||

<li>UI tweaks (Thanks Nokse22)</li>

|

||||

<li>General optimizations</li>

|

||||

<li>Metadata fixes</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.8.1" date="2024-05-24">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.8.1</url>

|

||||

<description>

|

||||

<p>Quick fix</p>

|

||||

<ul>

|

||||

<li>Updated Spanish translation</li>

|

||||

<li>Added compatibility for PNG</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.8.0" date="2024-05-24">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.8.0</url>

|

||||

<description>

|

||||

<p>New Update</p>

|

||||

<ul>

|

||||

<li>Updated model list</li>

|

||||

<li>Added image recognition to more models</li>

|

||||

<li>Added Brazilian Portuguese translation (Thanks Daimaar Stein)</li>

|

||||

<li>Refined the general UI (Thanks Nokse22)</li>

|

||||

<li>Added 'delete message' feature</li>

|

||||

<li>Added metadata so that software distributors know that the app is compatible with mobile</li>

|

||||

<li>Changed 'send' shortcut to just the return/enter key (to add a new line use shift+return)</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.7.1" date="2024-05-23">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.7.1</url>

|

||||

<description>

|

||||

<p>Bug Fixes</p>

|

||||

<ul>

|

||||

<li>Fixed: Minor spelling mistake</li>

|

||||

<li>Added 'mobile' as a supported form factor</li>

|

||||

<li>Fixed: 'Connection Error' dialog not working properly</li>

|

||||

<li>Fixed: App might freeze randomly on startup</li>

|

||||

<li>Changed 'chats' label on sidebar for 'Alpaca'</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.7.0" date="2024-05-22">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.7.0</url>

|

||||

<description>

|

||||

<p>Cool Update</p>

|

||||

<ul>

|

||||

<li>Better design for chat window</li>

|

||||

<li>Better design for chat sidebar</li>

|

||||

<li>Fixed remote connections</li>

|

||||

<li>Fixed Ollama restarting in loop</li>

|

||||

<li>Other cool backend stuff</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.6.0" date="2024-05-21">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.6.0</url>

|

||||

<description>

|

||||

<p>Huge Update</p>

|

||||

<ul>

|

||||

<li>Added Ollama as part of Alpaca, Ollama will run in a sandbox</li>

|

||||

<li>Added option to connect to remote instances (how it worked before)</li>

|

||||

<li>Added option to import and export chats</li>

|

||||

<li>Added option to run Alpaca with Ollama in the background</li>

|

||||

<li>Added preferences dialog</li>

|

||||

<li>Changed the welcome dialog</li>

|

||||

</ul>

|

||||

<p>

|

||||

Please report any errors to the issues page, thank you.

|

||||

</p>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.5.5" date="2024-05-20">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.5.5</url>

|

||||

<description>

|

||||

<p>Yet Another Daily Update</p>

|

||||

<ul>

|

||||

<li>Added better UI for 'Manage Models' dialog</li>

|

||||

<li>Added better UI for the chat sidebar</li>

|

||||

<li>Replaced model description with a button to open Ollama's website for the model</li>

|

||||

<li>Added myself to the credits as the spanish translator</li>

|

||||

<li>Using XDG properly to get config folder</li>

|

||||

<li>Update for translations</li>

|

||||

</ul>

|

||||

<p>

|

||||

Please report any errors to the issues page, thank you.

|

||||

</p>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.5.2" date="2024-05-19">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.5.2</url>

|

||||

<description>

|

||||

<p>Quick Fix</p>

|

||||

<ul>

|

||||

<li>The last update had some mistakes in the description of the update</li>

|

||||

</ul>

|

||||

<p>

|

||||

Please report any errors to the issues page, thank you.

|

||||

</p>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.5.1" date="2024-05-19">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.5.1</url>

|

||||

<description>

|

||||

<p>Another Daily Update</p>

|

||||

<ul>

|

||||

<li>Added full Spanish translation</li>

|

||||

<li>Added support for background pulling of multiple models</li>

|

||||

<li>Added interrupt button</li>

|

||||

<li>Added basic shortcuts</li>

|

||||

<li>Better translation support</li>

|

||||

<li>User can now leave chat name empty when creating a new one, it will add a placeholder name</li>

|

||||

<li>Better scalling for different window sizes</li>

|

||||

<li>Fixed: Can't close app if first time setup fails</li>

|

||||

</ul>

|

||||

<p>

|

||||

Please report any errors to the issues page, thank you.

|

||||

</p>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.5.0" date="2024-05-19">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.5.0</url>

|

||||

<description>

|

||||

<p>Really Big Update</p>

|

||||

<ul>

|

||||

<li>Added multiple chats support!</li>

|

||||

<li>Added Pango Markup support (bold, list, title, subtitle, monospace)</li>

|

||||

<li>Added autoscroll if the user is at the bottom of the chat</li>

|

||||

<li>Added support for multiple tags on a single model</li>

|

||||

<li>Added better model management dialog</li>

|

||||

<li>Added loading spinner when sending message</li>

|

||||

<li>Added notifications if app is not active and a model pull finishes</li>

|

||||

<li>Added new symbolic icon</li>

|

||||

<li>Added frame to message textview widget</li>

|

||||

<li>Fixed "code blocks shouldn't be editable"</li>

|

||||

</ul>

|

||||

<p>

|

||||

Please report any errors to the issues page, thank you.

|

||||

</p>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.4.0" date="2024-05-17">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.4.0</url>

|

||||

<description>

|

||||

|

||||

@ -1,677 +0,0 @@

|

||||

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

|

||||

<!-- Created with Inkscape (http://www.inkscape.org/) -->

|

||||

|

||||

<svg

|

||||

inkscape:export-ydpi="96"

|

||||

inkscape:export-xdpi="96"

|

||||

inkscape:export-filename="Template.png"

|

||||

width="192"

|

||||

height="152"

|

||||

id="svg11300"

|

||||

sodipodi:version="0.32"

|

||||

inkscape:version="1.3.2 (091e20ef0f, 2023-11-25)"

|

||||

sodipodi:docname="com.jeffser.Alpaca.Source.svg"

|

||||

inkscape:output_extension="org.inkscape.output.svg.inkscape"

|

||||

version="1.0"

|

||||

style="display:inline;enable-background:new"

|

||||

viewBox="0 0 192 152"

|

||||

xml:space="preserve"

|

||||

xmlns:inkscape="http://www.inkscape.org/namespaces/inkscape"

|

||||

xmlns:sodipodi="http://sodipodi.sourceforge.net/DTD/sodipodi-0.dtd"

|

||||

xmlns:xlink="http://www.w3.org/1999/xlink"

|

||||

xmlns="http://www.w3.org/2000/svg"

|

||||

xmlns:svg="http://www.w3.org/2000/svg"

|

||||

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

|

||||

xmlns:cc="http://creativecommons.org/ns#"

|

||||

xmlns:dc="http://purl.org/dc/elements/1.1/"><title

|

||||

id="title4162">Adwaita Icon Template</title><defs

|

||||

id="defs3"><linearGradient

|

||||

id="linearGradient74"

|

||||

inkscape:collect="always"><stop

|

||||

style="stop-color:#b6d1f2;stop-opacity:1"

|

||||

offset="0"

|

||||

id="stop73" /><stop

|

||||

style="stop-color:#e9eef4;stop-opacity:1"

|

||||

offset="1"

|