Compare commits

No commits in common. "main" and "2.0.2" have entirely different histories.

18

README.md

18

README.md

@ -8,6 +8,9 @@ Alpaca is an [Ollama](https://github.com/ollama/ollama) client where you can man

|

|||||||

|

|

||||||

---

|

---

|

||||||

|

|

||||||

|

> [!NOTE]

|

||||||

|

> Please checkout [this discussion](https://github.com/Jeffser/Alpaca/discussions/292), I want to start developing a new app alongside Alpaca but I need some suggestions, thanks!

|

||||||

|

|

||||||

> [!WARNING]

|

> [!WARNING]

|

||||||

> This project is not affiliated at all with Ollama, I'm not responsible for any damages to your device or software caused by running code given by any AI models.

|

> This project is not affiliated at all with Ollama, I'm not responsible for any damages to your device or software caused by running code given by any AI models.

|

||||||

|

|

||||||

@ -33,7 +36,7 @@ Alpaca is an [Ollama](https://github.com/ollama/ollama) client where you can man

|

|||||||

|

|

||||||

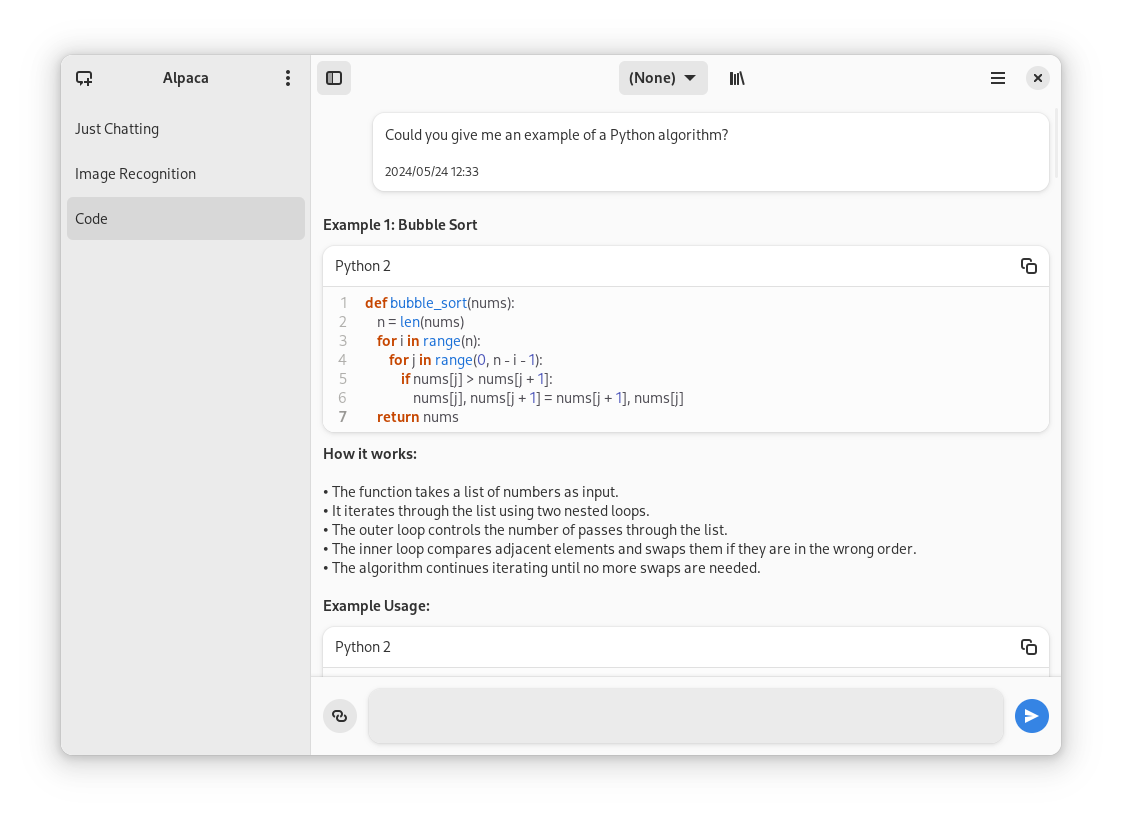

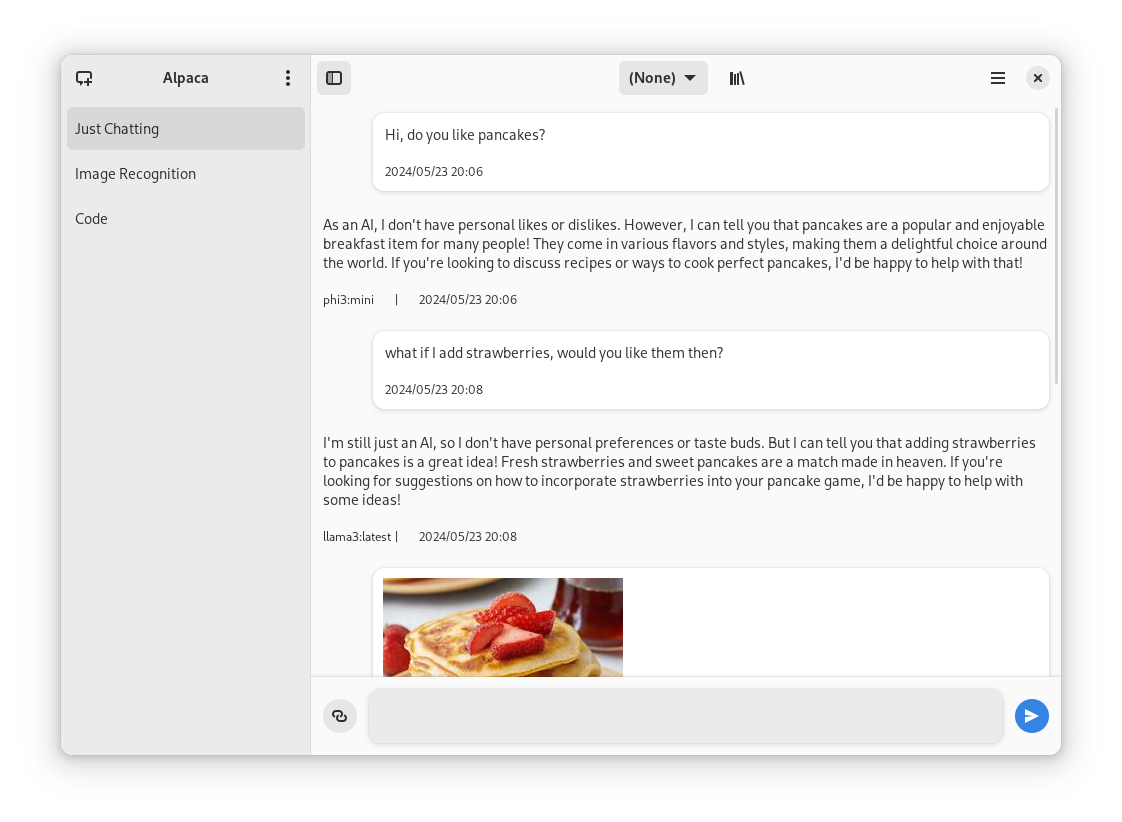

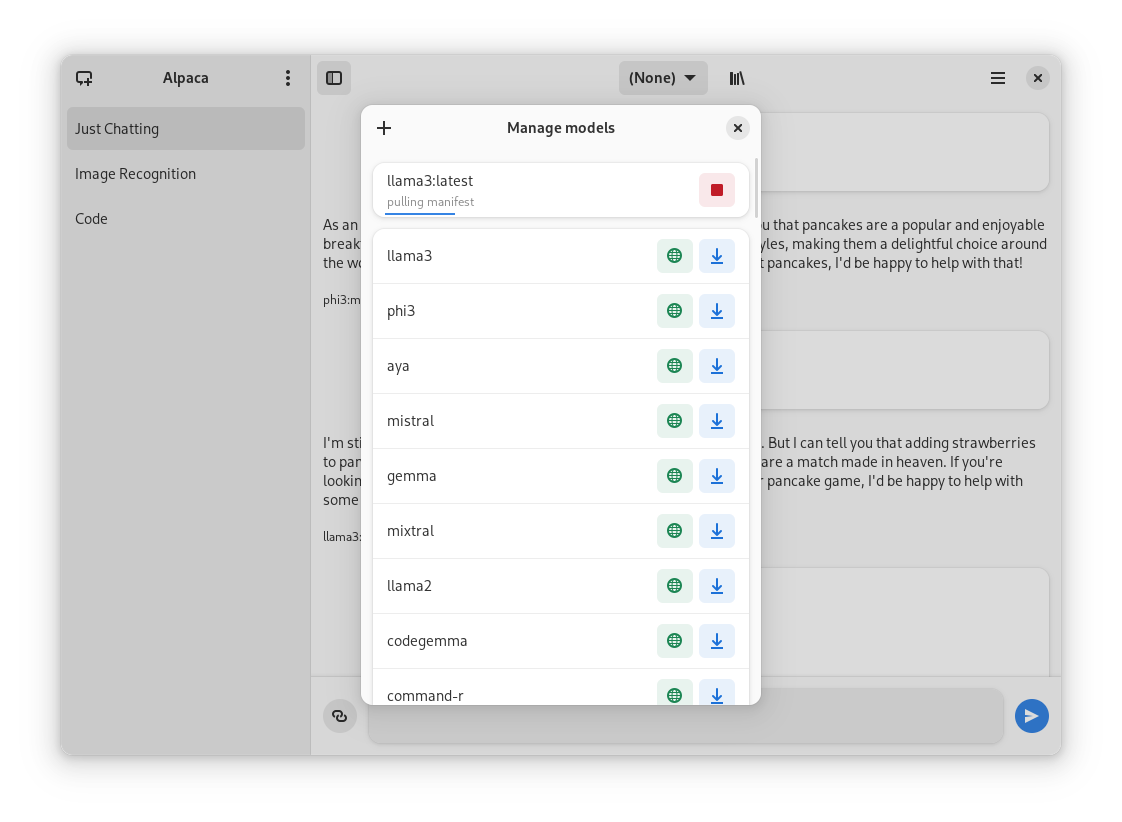

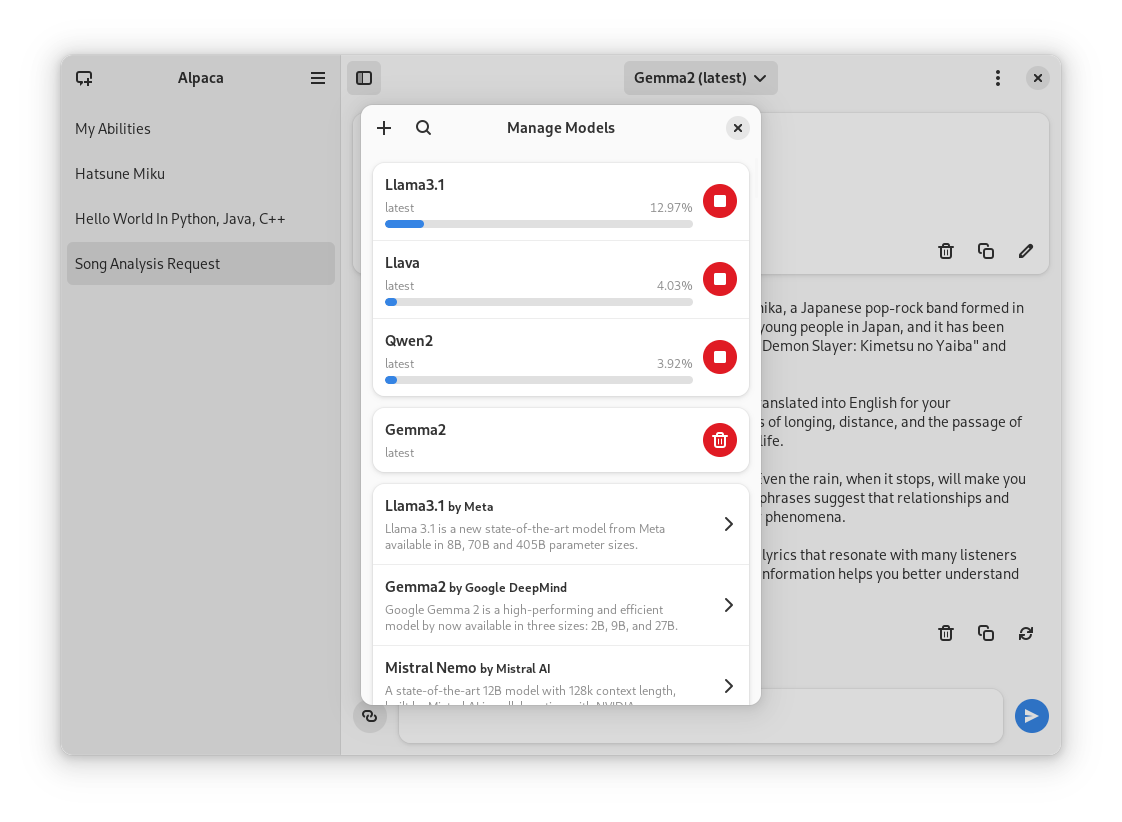

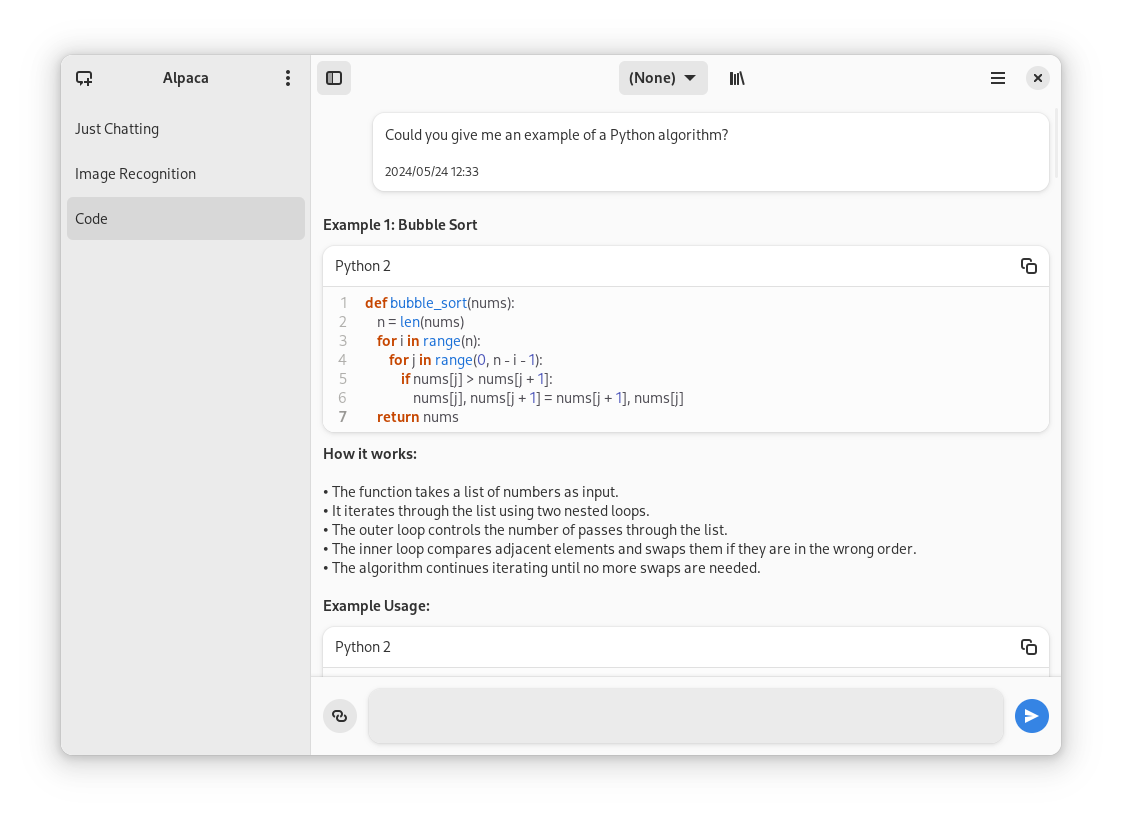

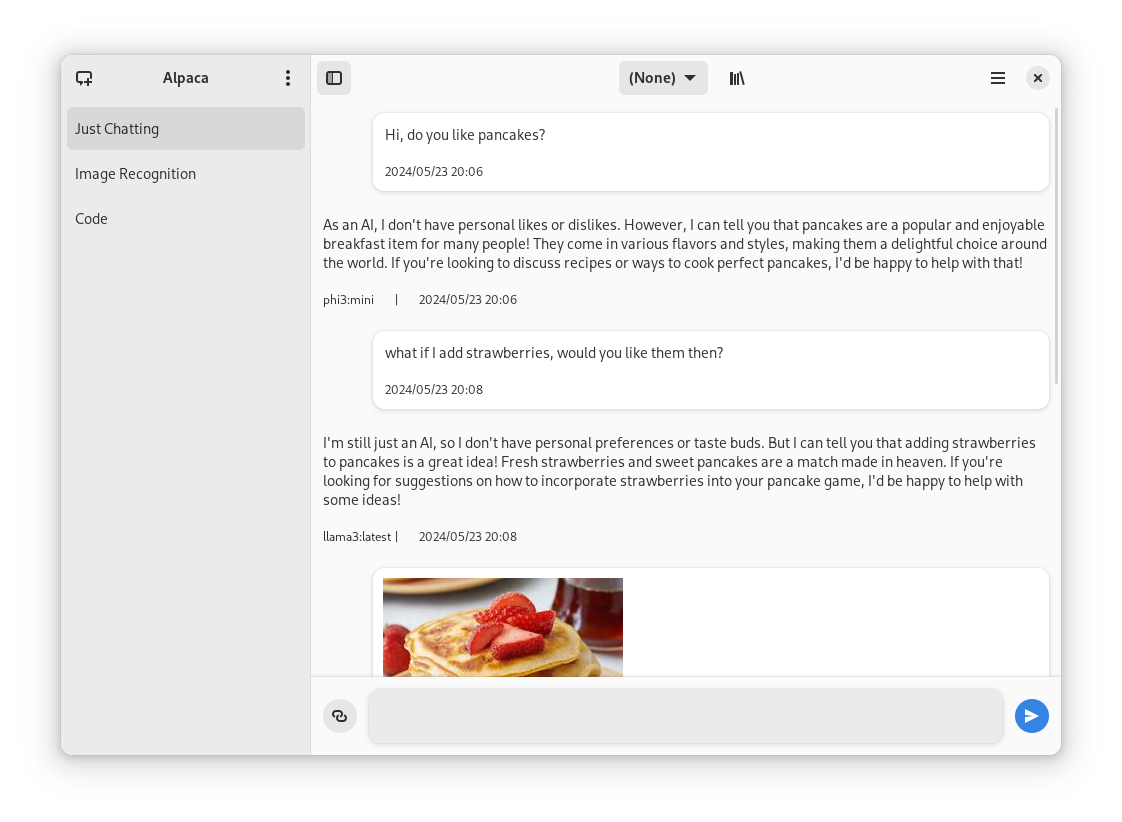

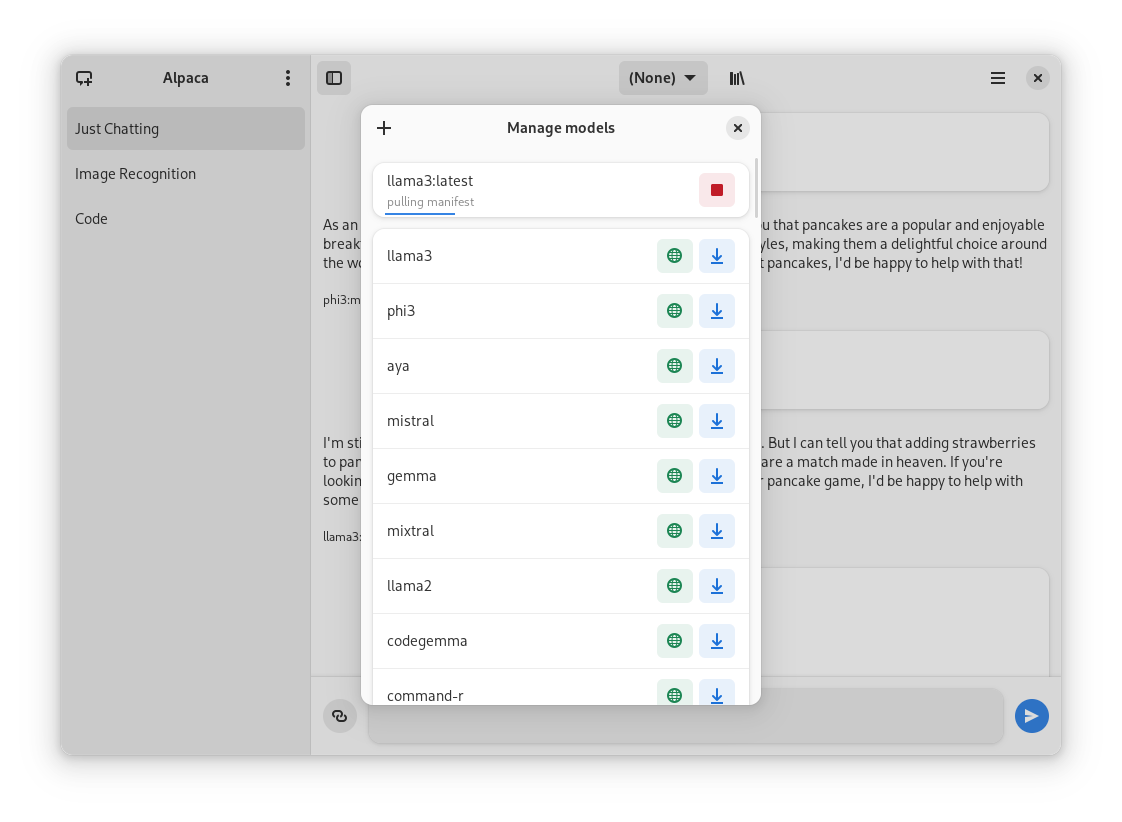

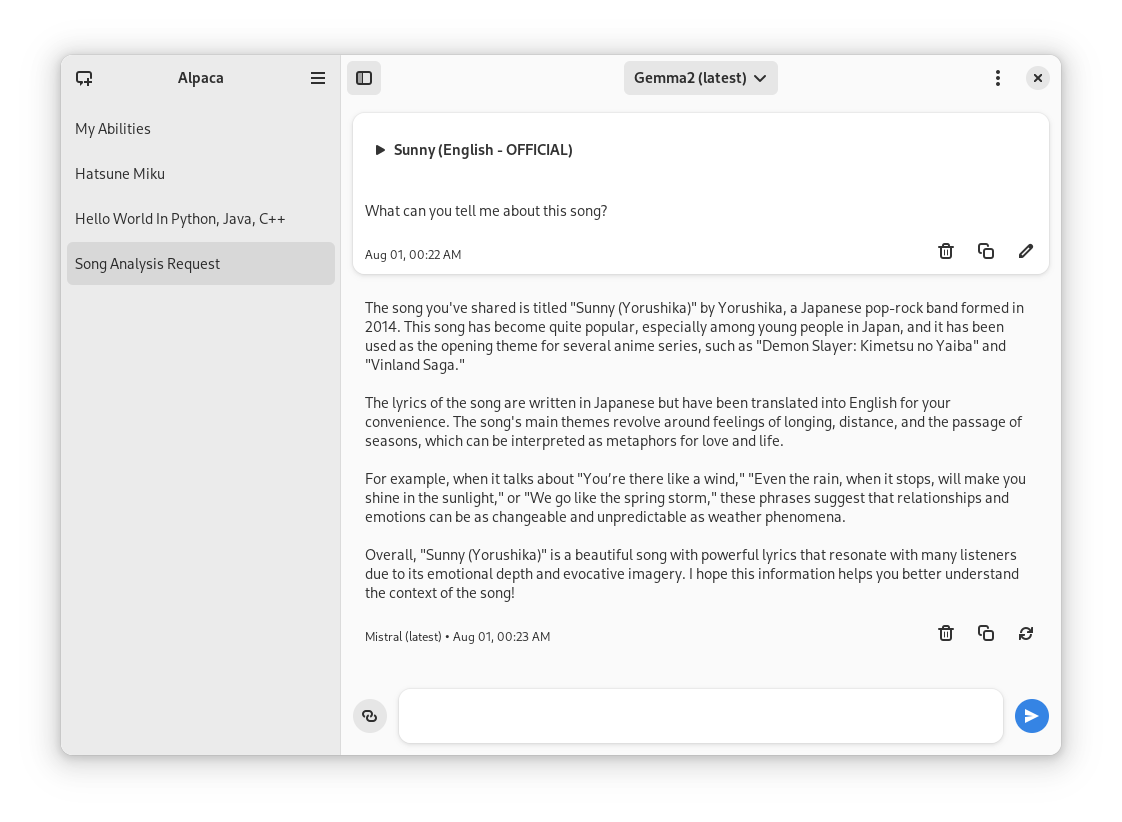

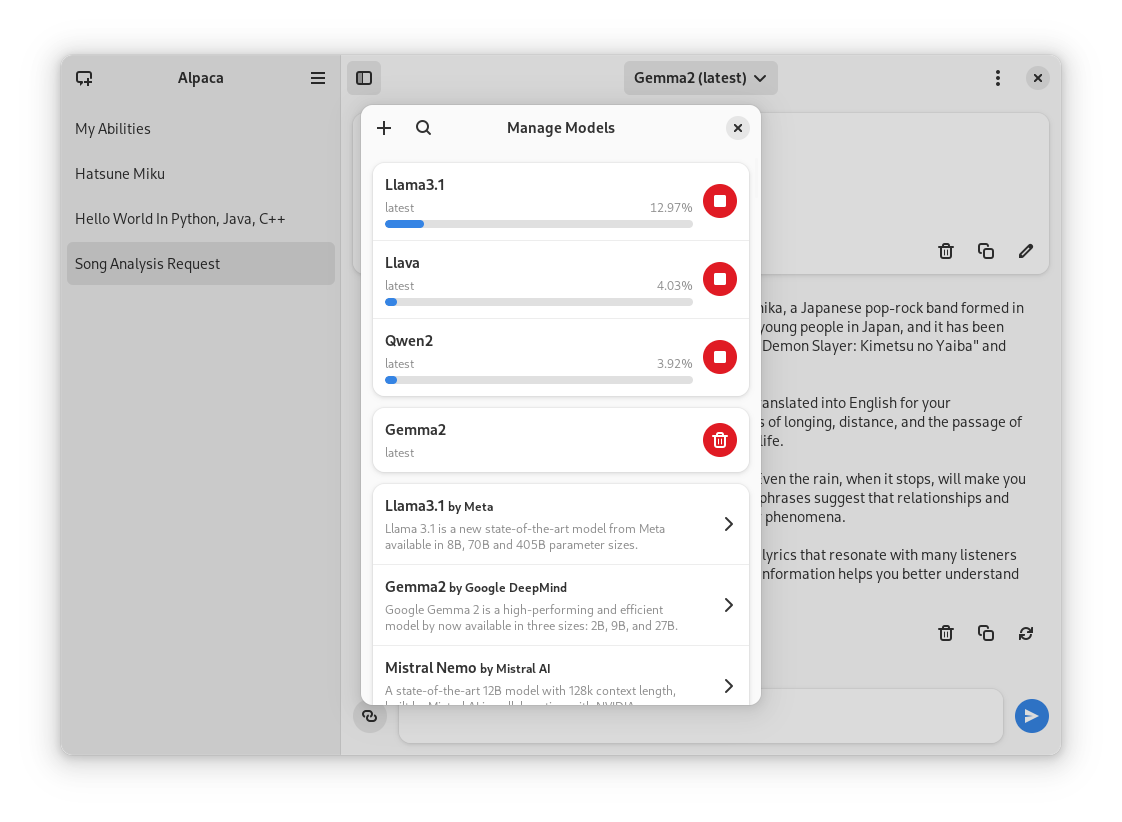

Normal conversation | Image recognition | Code highlighting | YouTube transcription | Model management

|

Normal conversation | Image recognition | Code highlighting | YouTube transcription | Model management

|

||||||

:------------------:|:-----------------:|:-----------------:|:---------------------:|:----------------:

|

:------------------:|:-----------------:|:-----------------:|:---------------------:|:----------------:

|

||||||

|  |  |  |

|

|  |  |  |

|

||||||

|

|

||||||

## Installation

|

## Installation

|

||||||

|

|

||||||

@ -45,14 +48,6 @@ You can find the latest stable version of the app on [Flathub](https://flathub.o

|

|||||||

|

|

||||||

Everytime a new version is published they become available on the [releases page](https://github.com/Jeffser/Alpaca/releases) of the repository

|

Everytime a new version is published they become available on the [releases page](https://github.com/Jeffser/Alpaca/releases) of the repository

|

||||||

|

|

||||||

### Snap Package

|

|

||||||

|

|

||||||

You can also find the Snap package on the [releases page](https://github.com/Jeffser/Alpaca/releases), to install it run this command:

|

|

||||||

```BASH

|

|

||||||

sudo snap install ./{package name} --dangerous

|

|

||||||

```

|

|

||||||

The `--dangerous` comes from the package being installed without any involvement of the SnapStore, I'm working on getting the app there, but for now you can test the app this way.

|

|

||||||

|

|

||||||

### Building Git Version

|

### Building Git Version

|

||||||

|

|

||||||

Note: This is not recommended since the prerelease versions of the app often present errors and general instability.

|

Note: This is not recommended since the prerelease versions of the app often present errors and general instability.

|

||||||

@ -68,7 +63,7 @@ Language | Contributors

|

|||||||

🇷🇺 Russian | [Alex K](https://github.com/alexkdeveloper)

|

🇷🇺 Russian | [Alex K](https://github.com/alexkdeveloper)

|

||||||

🇪🇸 Spanish | [Jeffry Samuel](https://github.com/jeffser)

|

🇪🇸 Spanish | [Jeffry Samuel](https://github.com/jeffser)

|

||||||

🇫🇷 French | [Louis Chauvet-Villaret](https://github.com/loulou64490) , [Théo FORTIN](https://github.com/topiga)

|

🇫🇷 French | [Louis Chauvet-Villaret](https://github.com/loulou64490) , [Théo FORTIN](https://github.com/topiga)

|

||||||

🇧🇷 Brazilian Portuguese | [Daimar Stein](https://github.com/not-a-dev-stein) , [Bruno Antunes](https://github.com/antun3s)

|

🇧🇷 Brazilian Portuguese | [Daimar Stein](https://github.com/not-a-dev-stein)

|

||||||

🇳🇴 Norwegian | [CounterFlow64](https://github.com/CounterFlow64)

|

🇳🇴 Norwegian | [CounterFlow64](https://github.com/CounterFlow64)

|

||||||

🇮🇳 Bengali | [Aritra Saha](https://github.com/olumolu)

|

🇮🇳 Bengali | [Aritra Saha](https://github.com/olumolu)

|

||||||

🇨🇳 Simplified Chinese | [Yuehao Sui](https://github.com/8ar10der) , [Aleksana](https://github.com/Aleksanaa)

|

🇨🇳 Simplified Chinese | [Yuehao Sui](https://github.com/8ar10der) , [Aleksana](https://github.com/Aleksanaa)

|

||||||

@ -76,8 +71,6 @@ Language | Contributors

|

|||||||

🇹🇷 Turkish | [YusaBecerikli](https://github.com/YusaBecerikli)

|

🇹🇷 Turkish | [YusaBecerikli](https://github.com/YusaBecerikli)

|

||||||

🇺🇦 Ukrainian | [Simon](https://github.com/OriginalSimon)

|

🇺🇦 Ukrainian | [Simon](https://github.com/OriginalSimon)

|

||||||

🇩🇪 German | [Marcel Margenberg](https://github.com/MehrzweckMandala)

|

🇩🇪 German | [Marcel Margenberg](https://github.com/MehrzweckMandala)

|

||||||

🇮🇱 Hebrew | [Yosef Or Boczko](https://github.com/yoseforb)

|

|

||||||

🇮🇳 Telugu | [Aryan Karamtoth](https://github.com/SpaciousCoder78)

|

|

||||||

|

|

||||||

Want to add a language? Visit [this discussion](https://github.com/Jeffser/Alpaca/discussions/153) to get started!

|

Want to add a language? Visit [this discussion](https://github.com/Jeffser/Alpaca/discussions/153) to get started!

|

||||||

|

|

||||||

@ -91,7 +84,6 @@ Want to add a language? Visit [this discussion](https://github.com/Jeffser/Alpac

|

|||||||

- [Nokse](https://github.com/Nokse22) for their contributions to the UI and table rendering

|

- [Nokse](https://github.com/Nokse22) for their contributions to the UI and table rendering

|

||||||

- [Louis Chauvet-Villaret](https://github.com/loulou64490) for their suggestions

|

- [Louis Chauvet-Villaret](https://github.com/loulou64490) for their suggestions

|

||||||

- [Aleksana](https://github.com/Aleksanaa) for her help with better handling of directories

|

- [Aleksana](https://github.com/Aleksanaa) for her help with better handling of directories

|

||||||

- [Gnome Builder Team](https://gitlab.gnome.org/GNOME/gnome-builder) for the awesome IDE I use to develop Alpaca

|

|

||||||

- Sponsors for giving me enough money to be able to take a ride to my campus every time I need to <3

|

- Sponsors for giving me enough money to be able to take a ride to my campus every time I need to <3

|

||||||

- Everyone that has shared kind words of encouragement!

|

- Everyone that has shared kind words of encouragement!

|

||||||

|

|

||||||

|

|||||||

@ -1,7 +1,7 @@

|

|||||||

{

|

{

|

||||||

"id" : "com.jeffser.Alpaca",

|

"id" : "com.jeffser.Alpaca",

|

||||||

"runtime" : "org.gnome.Platform",

|

"runtime" : "org.gnome.Platform",

|

||||||

"runtime-version" : "47",

|

"runtime-version" : "46",

|

||||||

"sdk" : "org.gnome.Sdk",

|

"sdk" : "org.gnome.Sdk",

|

||||||

"command" : "alpaca",

|

"command" : "alpaca",

|

||||||

"finish-args" : [

|

"finish-args" : [

|

||||||

@ -11,8 +11,7 @@

|

|||||||

"--device=all",

|

"--device=all",

|

||||||

"--socket=wayland",

|

"--socket=wayland",

|

||||||

"--filesystem=/sys/module/amdgpu:ro",

|

"--filesystem=/sys/module/amdgpu:ro",

|

||||||

"--env=LD_LIBRARY_PATH=/app/lib:/usr/lib/x86_64-linux-gnu/GL/default/lib:/usr/lib/x86_64-linux-gnu/openh264/extra:/usr/lib/x86_64-linux-gnu/openh264/extra:/usr/lib/sdk/llvm15/lib:/usr/lib/x86_64-linux-gnu/GL/default/lib:/usr/lib/ollama:/app/plugins/AMD/lib/ollama",

|

"--env=LD_LIBRARY_PATH=/app/lib:/usr/lib/x86_64-linux-gnu/GL/default/lib:/usr/lib/x86_64-linux-gnu/openh264/extra:/usr/lib/x86_64-linux-gnu/openh264/extra:/usr/lib/sdk/llvm15/lib:/usr/lib/x86_64-linux-gnu/GL/default/lib:/usr/lib/ollama:/app/plugins/AMD/lib/ollama"

|

||||||

"--env=GSK_RENDERER=ngl"

|

|

||||||

],

|

],

|

||||||

"add-extensions": {

|

"add-extensions": {

|

||||||

"com.jeffser.Alpaca.Plugins": {

|

"com.jeffser.Alpaca.Plugins": {

|

||||||

@ -111,45 +110,6 @@

|

|||||||

}

|

}

|

||||||

]

|

]

|

||||||

},

|

},

|

||||||

{

|

|

||||||

"name": "python3-youtube-transcript-api",

|

|

||||||

"buildsystem": "simple",

|

|

||||||

"build-commands": [

|

|

||||||

"pip3 install --verbose --exists-action=i --no-index --find-links=\"file://${PWD}\" --prefix=${FLATPAK_DEST} \"youtube-transcript-api\" --no-build-isolation"

|

|

||||||

],

|

|

||||||

"sources": [

|

|

||||||

{

|

|

||||||

"type": "file",

|

|

||||||

"url": "https://files.pythonhosted.org/packages/12/90/3c9ff0512038035f59d279fddeb79f5f1eccd8859f06d6163c58798b9487/certifi-2024.8.30-py3-none-any.whl",

|

|

||||||

"sha256": "922820b53db7a7257ffbda3f597266d435245903d80737e34f8a45ff3e3230d8"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"type": "file",

|

|

||||||

"url": "https://files.pythonhosted.org/packages/f2/4f/e1808dc01273379acc506d18f1504eb2d299bd4131743b9fc54d7be4df1e/charset_normalizer-3.4.0.tar.gz",

|

|

||||||

"sha256": "223217c3d4f82c3ac5e29032b3f1c2eb0fb591b72161f86d93f5719079dae93e"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"type": "file",

|

|

||||||

"url": "https://files.pythonhosted.org/packages/76/c6/c88e154df9c4e1a2a66ccf0005a88dfb2650c1dffb6f5ce603dfbd452ce3/idna-3.10-py3-none-any.whl",

|

|

||||||

"sha256": "946d195a0d259cbba61165e88e65941f16e9b36ea6ddb97f00452bae8b1287d3"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"type": "file",

|

|

||||||

"url": "https://files.pythonhosted.org/packages/f9/9b/335f9764261e915ed497fcdeb11df5dfd6f7bf257d4a6a2a686d80da4d54/requests-2.32.3-py3-none-any.whl",

|

|

||||||

"sha256": "70761cfe03c773ceb22aa2f671b4757976145175cdfca038c02654d061d6dcc6"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"type": "file",

|

|

||||||

"url": "https://files.pythonhosted.org/packages/ce/d9/5f4c13cecde62396b0d3fe530a50ccea91e7dfc1ccf0e09c228841bb5ba8/urllib3-2.2.3-py3-none-any.whl",

|

|

||||||

"sha256": "ca899ca043dcb1bafa3e262d73aa25c465bfb49e0bd9dd5d59f1d0acba2f8fac"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"type": "file",

|

|

||||||

"url": "https://files.pythonhosted.org/packages/52/42/5f57d37d56bdb09722f226ed81cc1bec63942da745aa27266b16b0e16a5d/youtube_transcript_api-0.6.2-py3-none-any.whl",

|

|

||||||

"sha256": "019dbf265c6a68a0591c513fff25ed5a116ce6525832aefdfb34d4df5567121c"

|

|

||||||

}

|

|

||||||

]

|

|

||||||

},

|

|

||||||

{

|

{

|

||||||

"name": "python3-html2text",

|

"name": "python3-html2text",

|

||||||

"buildsystem": "simple",

|

"buildsystem": "simple",

|

||||||

@ -174,16 +134,16 @@

|

|||||||

"sources": [

|

"sources": [

|

||||||

{

|

{

|

||||||

"type": "archive",

|

"type": "archive",

|

||||||

"url": "https://github.com/ollama/ollama/releases/download/v0.3.12/ollama-linux-amd64.tgz",

|

"url": "https://github.com/ollama/ollama/releases/download/v0.3.9/ollama-linux-amd64.tgz",

|

||||||

"sha256": "f0efa42f7ad77cd156bd48c40cd22109473801e5113173b0ad04f094a4ef522b",

|

"sha256": "b0062fbccd46134818d9d59cfa3867ad6849163653cb1171bc852c5f379b0851",

|

||||||

"only-arches": [

|

"only-arches": [

|

||||||

"x86_64"

|

"x86_64"

|

||||||

]

|

]

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"type": "archive",

|

"type": "archive",

|

||||||

"url": "https://github.com/ollama/ollama/releases/download/v0.3.12/ollama-linux-arm64.tgz",

|

"url": "https://github.com/ollama/ollama/releases/download/v0.3.9/ollama-linux-arm64.tgz",

|

||||||

"sha256": "da631cbe4dd2c168dae58d6868b1ff60e881e050f2d07578f2f736e689fec04c",

|

"sha256": "8979484bcb1448ab9b45107fbcb3b9f43c2af46f961487449b9ebf3518cd70eb",

|

||||||

"only-arches": [

|

"only-arches": [

|

||||||

"aarch64"

|

"aarch64"

|

||||||

]

|

]

|

||||||

@ -206,18 +166,6 @@

|

|||||||

}

|

}

|

||||||

]

|

]

|

||||||

},

|

},

|

||||||

{

|

|

||||||

"name": "vte",

|

|

||||||

"buildsystem": "meson",

|

|

||||||

"config-opts": ["-Dvapi=false"],

|

|

||||||

"sources": [

|

|

||||||

{

|

|

||||||

"type": "archive",

|

|

||||||

"url": "https://gitlab.gnome.org/GNOME/vte/-/archive/0.78.0/vte-0.78.0.tar.gz",

|

|

||||||

"sha256": "82e19d11780fed4b66400f000829ce5ca113efbbfb7975815f26ed93e4c05f2d"

|

|

||||||

}

|

|

||||||

]

|

|

||||||

},

|

|

||||||

{

|

{

|

||||||

"name" : "alpaca",

|

"name" : "alpaca",

|

||||||

"builddir" : true,

|

"builddir" : true,

|

||||||

|

|||||||

@ -63,14 +63,10 @@

|

|||||||

</screenshot>

|

</screenshot>

|

||||||

<screenshot>

|

<screenshot>

|

||||||

<image>https://jeffser.com/images/alpaca/screenie4.png</image>

|

<image>https://jeffser.com/images/alpaca/screenie4.png</image>

|

||||||

<caption>A Python script running inside integrated terminal</caption>

|

|

||||||

</screenshot>

|

|

||||||

<screenshot>

|

|

||||||

<image>https://jeffser.com/images/alpaca/screenie5.png</image>

|

|

||||||

<caption>A conversation involving a YouTube video transcript</caption>

|

<caption>A conversation involving a YouTube video transcript</caption>

|

||||||

</screenshot>

|

</screenshot>

|

||||||

<screenshot>

|

<screenshot>

|

||||||

<image>https://jeffser.com/images/alpaca/screenie6.png</image>

|

<image>https://jeffser.com/images/alpaca/screenie5.png</image>

|

||||||

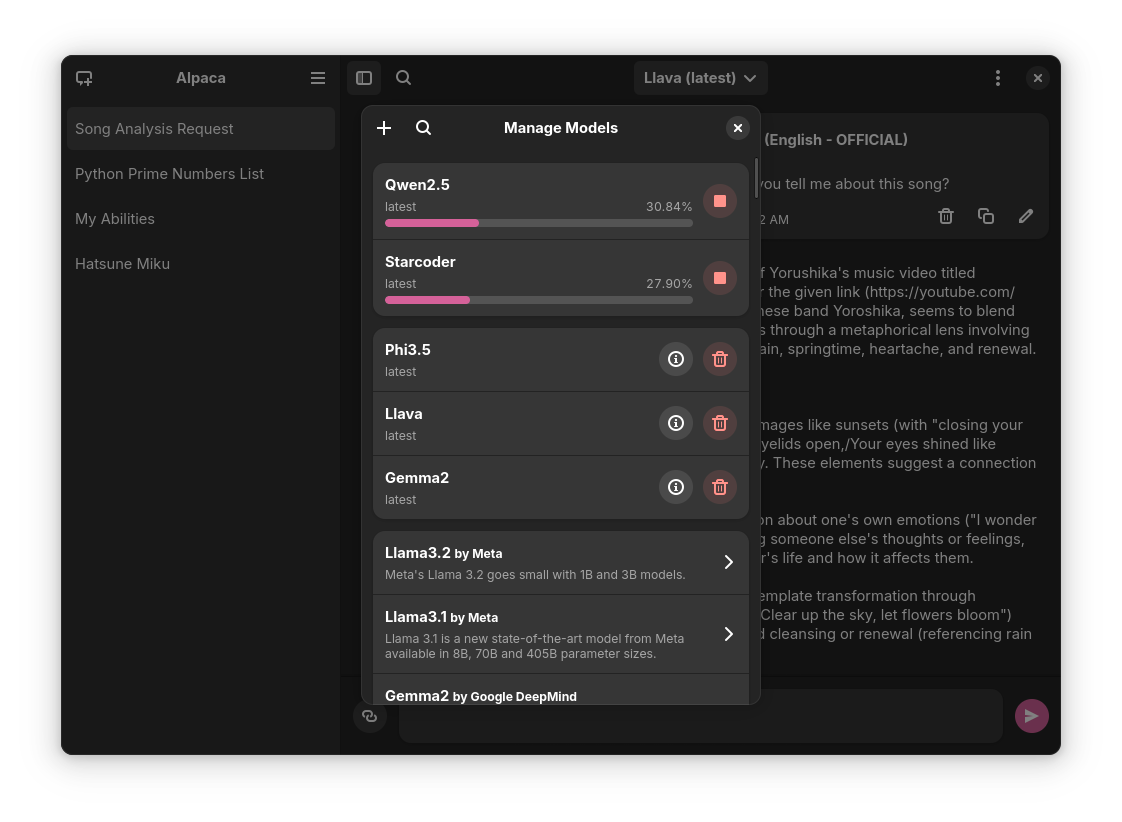

<caption>Multiple models being downloaded</caption>

|

<caption>Multiple models being downloaded</caption>

|

||||||

</screenshot>

|

</screenshot>

|

||||||

</screenshots>

|

</screenshots>

|

||||||

@ -82,133 +78,6 @@

|

|||||||

<url type="contribute">https://github.com/Jeffser/Alpaca/discussions/154</url>

|

<url type="contribute">https://github.com/Jeffser/Alpaca/discussions/154</url>

|

||||||

<url type="vcs-browser">https://github.com/Jeffser/Alpaca</url>

|

<url type="vcs-browser">https://github.com/Jeffser/Alpaca</url>

|

||||||

<releases>

|

<releases>

|

||||||

<release version="2.7.0" date="2024-10-15">

|

|

||||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.7.0</url>

|

|

||||||

<description>

|

|

||||||

<p>New</p>

|

|

||||||

<ul>

|

|

||||||

<li>User messages are now compacted into bubbles</li>

|

|

||||||

</ul>

|

|

||||||

<p>Fixes</p>

|

|

||||||

<ul>

|

|

||||||

<li>Fixed re connection dialog not working when 'use local instance' is selected</li>

|

|

||||||

<li>Fixed model manager not adapting to large system fonts</li>

|

|

||||||

</ul>

|

|

||||||

</description>

|

|

||||||

</release>

|

|

||||||

<release version="2.6.5" date="2024-10-13">

|

|

||||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.6.5</url>

|

|

||||||

<description>

|

|

||||||

<p>New</p>

|

|

||||||

<ul>

|

|

||||||

<li>Details page for models</li>

|

|

||||||

<li>Model selector gets replaced with 'manage models' button when there are no models downloaded</li>

|

|

||||||

<li>Added warning when model is too big for the device</li>

|

|

||||||

<li>Added AMD GPU indicator in preferences</li>

|

|

||||||

</ul>

|

|

||||||

</description>

|

|

||||||

</release>

|

|

||||||

<release version="2.6.0" date="2024-10-11">

|

|

||||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.6.0</url>

|

|

||||||

<description>

|

|

||||||

<p>New</p>

|

|

||||||

<ul>

|

|

||||||

<li>Better system for handling dialogs</li>

|

|

||||||

<li>Better system for handling instance switching</li>

|

|

||||||

<li>Remote connection dialog</li>

|

|

||||||

</ul>

|

|

||||||

<p>Fixes</p>

|

|

||||||

<ul>

|

|

||||||

<li>Fixed: Models get duplicated when switching remote and local instance</li>

|

|

||||||

<li>Better internal instance manager</li>

|

|

||||||

</ul>

|

|

||||||

</description>

|

|

||||||

</release>

|

|

||||||

<release version="2.5.1" date="2024-10-09">

|

|

||||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.5.1</url>

|

|

||||||

<description>

|

|

||||||

<p>New</p>

|

|

||||||

<ul>

|

|

||||||

<li>Added 'Cancel' and 'Save' buttons when editing a message</li>

|

|

||||||

</ul>

|

|

||||||

<p>Fixes</p>

|

|

||||||

<ul>

|

|

||||||

<li>Better handling of image recognition</li>

|

|

||||||

<li>Remove unused files when canceling a model download</li>

|

|

||||||

<li>Better message blocks rendering</li>

|

|

||||||

</ul>

|

|

||||||

</description>

|

|

||||||

</release>

|

|

||||||

<release version="2.5.0" date="2024-10-06">

|

|

||||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.5.0</url>

|

|

||||||

<description>

|

|

||||||

<p>New</p>

|

|

||||||

<ul>

|

|

||||||

<li>Run bash and python scripts straight from chat</li>

|

|

||||||

<li>Updated Ollama to 0.3.12</li>

|

|

||||||

<li>New models!</li>

|

|

||||||

</ul>

|

|

||||||

<p>Fixes</p>

|

|

||||||

<ul>

|

|

||||||

<li>Fixed and made faster the launch sequence</li>

|

|

||||||

<li>Better detection of code blocks in messages</li>

|

|

||||||

<li>Fixed app not loading in certain setups with Nvidia GPUs</li>

|

|

||||||

</ul>

|

|

||||||

</description>

|

|

||||||

</release>

|

|

||||||

<release version="2.0.6" date="2024-09-29">

|

|

||||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.6</url>

|

|

||||||

<description>

|

|

||||||

<p>Fixes</p>

|

|

||||||

<ul>

|

|

||||||

<li>Fixed message notification sometimes crashing text rendering because of them running on different threads</li>

|

|

||||||

</ul>

|

|

||||||

</description>

|

|

||||||

</release>

|

|

||||||

<release version="2.0.5" date="2024-09-25">

|

|

||||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.5</url>

|

|

||||||

<description>

|

|

||||||

<p>Fixes</p>

|

|

||||||

<ul>

|

|

||||||

<li>Fixed message generation sometimes failing</li>

|

|

||||||

</ul>

|

|

||||||

</description>

|

|

||||||

</release>

|

|

||||||

<release version="2.0.4" date="2024-09-22">

|

|

||||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.4</url>

|

|

||||||

<description>

|

|

||||||

<p>New</p>

|

|

||||||

<ul>

|

|

||||||

<li>Sidebar resizes with the window</li>

|

|

||||||

<li>New welcome dialog</li>

|

|

||||||

<li>Message search</li>

|

|

||||||

<li>Updated Ollama to v0.3.11</li>

|

|

||||||

<li>A lot of new models provided by Ollama repository</li>

|

|

||||||

</ul>

|

|

||||||

<p>Fixes</p>

|

|

||||||

<ul>

|

|

||||||

<li>Fixed text inside model manager when the accessibility option 'large text' is on</li>

|

|

||||||

<li>Fixed image recognition on unsupported models</li>

|

|

||||||

</ul>

|

|

||||||

</description>

|

|

||||||

</release>

|

|

||||||

<release version="2.0.3" date="2024-09-18">

|

|

||||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.3</url>

|

|

||||||

<description>

|

|

||||||

<p>Fixes</p>

|

|

||||||

<ul>

|

|

||||||

<li>Fixed spinner not hiding if the back end fails</li>

|

|

||||||

<li>Fixed image recognition with local images</li>

|

|

||||||

<li>Changed appearance of delete / stop model buttons</li>

|

|

||||||

<li>Fixed stop button crashing the app</li>

|

|

||||||

</ul>

|

|

||||||

<p>New</p>

|

|

||||||

<ul>

|

|

||||||

<li>Made sidebar resize a little when the window is smaller</li>

|

|

||||||

<li>Instant launch</li>

|

|

||||||

</ul>

|

|

||||||

</description>

|

|

||||||

</release>

|

|

||||||

<release version="2.0.2" date="2024-09-11">

|

<release version="2.0.2" date="2024-09-11">

|

||||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.2</url>

|

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.2</url>

|

||||||

<description>

|

<description>

|

||||||

|

|||||||

@ -1,5 +1,5 @@

|

|||||||

project('Alpaca', 'c',

|

project('Alpaca', 'c',

|

||||||

version: '2.7.0',

|

version: '2.0.2',

|

||||||

meson_version: '>= 0.62.0',

|

meson_version: '>= 0.62.0',

|

||||||

default_options: [ 'warning_level=2', 'werror=false', ],

|

default_options: [ 'warning_level=2', 'werror=false', ],

|

||||||

)

|

)

|

||||||

|

|||||||

@ -9,5 +9,3 @@ hi

|

|||||||

tr

|

tr

|

||||||

uk

|

uk

|

||||||

de

|

de

|

||||||

he

|

|

||||||

te

|

|

||||||

|

|||||||

@ -5,11 +5,9 @@ src/main.py

|

|||||||

src/window.py

|

src/window.py

|

||||||

src/available_models_descriptions.py

|

src/available_models_descriptions.py

|

||||||

src/connection_handler.py

|

src/connection_handler.py

|

||||||

|

src/dialogs.py

|

||||||

src/window.ui

|

src/window.ui

|

||||||

src/generic_actions.py

|

|

||||||

src/custom_widgets/chat_widget.py

|

src/custom_widgets/chat_widget.py

|

||||||

src/custom_widgets/message_widget.py

|

src/custom_widgets/message_widget.py

|

||||||

src/custom_widgets/model_widget.py

|

src/custom_widgets/model_widget.py

|

||||||

src/custom_widgets/table_widget.py

|

src/custom_widgets/table_widget.py

|

||||||

src/custom_widgets/dialog_widget.py

|

|

||||||

src/custom_widgets/terminal_widget.py

|

|

||||||

2139

po/alpaca.pot

2139

po/alpaca.pot

File diff suppressed because it is too large

Load Diff

2180

po/nb_NO.po

2180

po/nb_NO.po

File diff suppressed because it is too large

Load Diff

2680

po/pt_BR.po

2680

po/pt_BR.po

File diff suppressed because it is too large

Load Diff

2259

po/zh_Hans.po

2259

po/zh_Hans.po

File diff suppressed because it is too large

Load Diff

@ -1,93 +0,0 @@

|

|||||||

name: jeffser-alpaca

|

|

||||||

base: core24

|

|

||||||

adopt-info: alpaca

|

|

||||||

|

|

||||||

platforms:

|

|

||||||

amd64:

|

|

||||||

arm64:

|

|

||||||

|

|

||||||

confinement: strict

|

|

||||||

grade: stable

|

|

||||||

compression: lzo

|

|

||||||

|

|

||||||

slots:

|

|

||||||

dbus-alpaca:

|

|

||||||

interface: dbus

|

|

||||||

bus: session

|

|

||||||

name: com.jeffser.Alpaca

|

|

||||||

|

|

||||||

apps:

|

|

||||||

alpaca:

|

|

||||||

command: usr/bin/alpaca

|

|

||||||

common-id: com.jeffser.Alpaca

|

|

||||||

extensions:

|

|

||||||

- gnome

|

|

||||||

plugs:

|

|

||||||

- network

|

|

||||||

- network-bind

|

|

||||||

- home

|

|

||||||

- removable-media

|

|

||||||

|

|

||||||

ollama:

|

|

||||||

command: bin/ollama

|

|

||||||

plugs:

|

|

||||||

- home

|

|

||||||

- removable-media

|

|

||||||

- network

|

|

||||||

- network-bind

|

|

||||||

|

|

||||||

ollama-daemon:

|

|

||||||

command: bin/ollama serve

|

|

||||||

daemon: simple

|

|

||||||

install-mode: enable

|

|

||||||

restart-condition: on-failure

|

|

||||||

plugs:

|

|

||||||

- home

|

|

||||||

- removable-media

|

|

||||||

- network

|

|

||||||

- network-bind

|

|

||||||

|

|

||||||

parts:

|

|

||||||

# Python dependencies

|

|

||||||

python-deps:

|

|

||||||

plugin: python

|

|

||||||

source: .

|

|

||||||

python-packages:

|

|

||||||

- requests==2.31.0

|

|

||||||

- pillow==10.3.0

|

|

||||||

- pypdf==4.2.0

|

|

||||||

- pytube==15.0.0

|

|

||||||

- html2text==2024.2.26

|

|

||||||

|

|

||||||

# Ollama plugin

|

|

||||||

ollama:

|

|

||||||

plugin: dump

|

|

||||||

source:

|

|

||||||

- on amd64: https://github.com/ollama/ollama/releases/download/v0.3.12/ollama-linux-amd64.tgz

|

|

||||||

- on arm64: https://github.com/ollama/ollama/releases/download/v0.3.12/ollama-linux-arm64.tgz

|

|

||||||

|

|

||||||

# Alpaca app

|

|

||||||

alpaca:

|

|

||||||

plugin: meson

|

|

||||||

source-type: git

|

|

||||||

source: https://github.com/Jeffser/Alpaca.git

|

|

||||||

source-tag: 2.6.5

|

|

||||||

source-depth: 1

|

|

||||||

meson-parameters:

|

|

||||||

- --prefix=/snap/alpaca/current/usr

|

|

||||||

override-build: |

|

|

||||||

craftctl default

|

|

||||||

sed -i '1c#!/usr/bin/env python3' $CRAFT_PART_INSTALL/snap/alpaca/current/usr/bin/alpaca

|

|

||||||

parse-info:

|

|

||||||

- usr/share/metainfo/com.jeffser.Alpaca.metainfo.xml

|

|

||||||

organize:

|

|

||||||

snap/alpaca/current: .

|

|

||||||

after: [python-deps]

|

|

||||||

|

|

||||||

deps:

|

|

||||||

plugin: nil

|

|

||||||

after: [alpaca]

|

|

||||||

stage-packages:

|

|

||||||

- libnuma1

|

|

||||||

prime:

|

|

||||||

- usr/lib/*/libnuma.so.1*

|

|

||||||

@ -31,9 +31,6 @@

|

|||||||

<file alias="icons/scalable/status/update-symbolic.svg">icons/update-symbolic.svg</file>

|

<file alias="icons/scalable/status/update-symbolic.svg">icons/update-symbolic.svg</file>

|

||||||

<file alias="icons/scalable/status/down-symbolic.svg">icons/down-symbolic.svg</file>

|

<file alias="icons/scalable/status/down-symbolic.svg">icons/down-symbolic.svg</file>

|

||||||

<file alias="icons/scalable/status/chat-bubble-text-symbolic.svg">icons/chat-bubble-text-symbolic.svg</file>

|

<file alias="icons/scalable/status/chat-bubble-text-symbolic.svg">icons/chat-bubble-text-symbolic.svg</file>

|

||||||

<file alias="icons/scalable/status/execute-from-symbolic.svg">icons/execute-from-symbolic.svg</file>

|

|

||||||

<file alias="icons/scalable/status/cross-large-symbolic.svg">icons/cross-large-symbolic.svg</file>

|

|

||||||

<file alias="icons/scalable/status/info-outline-symbolic.svg">icons/info-outline-symbolic.svg</file>

|

|

||||||

<file preprocess="xml-stripblanks">window.ui</file>

|

<file preprocess="xml-stripblanks">window.ui</file>

|

||||||

<file preprocess="xml-stripblanks">gtk/help-overlay.ui</file>

|

<file preprocess="xml-stripblanks">gtk/help-overlay.ui</file>

|

||||||

</gresource>

|

</gresource>

|

||||||

|

|||||||

File diff suppressed because it is too large

Load Diff

@ -1,13 +1,11 @@

|

|||||||

descriptions = {

|

descriptions = {

|

||||||

'llama3.2': _("Meta's Llama 3.2 goes small with 1B and 3B models."),

|

|

||||||

'llama3.1': _("Llama 3.1 is a new state-of-the-art model from Meta available in 8B, 70B and 405B parameter sizes."),

|

'llama3.1': _("Llama 3.1 is a new state-of-the-art model from Meta available in 8B, 70B and 405B parameter sizes."),

|

||||||

'gemma2': _("Google Gemma 2 is a high-performing and efficient model available in three sizes: 2B, 9B, and 27B."),

|

'gemma2': _("Google Gemma 2 is a high-performing and efficient model by now available in three sizes: 2B, 9B, and 27B."),

|

||||||

'qwen2.5': _("Qwen2.5 models are pretrained on Alibaba's latest large-scale dataset, encompassing up to 18 trillion tokens. The model supports up to 128K tokens and has multilingual support."),

|

|

||||||

'phi3.5': _("A lightweight AI model with 3.8 billion parameters with performance overtaking similarly and larger sized models."),

|

|

||||||

'nemotron-mini': _("A commercial-friendly small language model by NVIDIA optimized for roleplay, RAG QA, and function calling."),

|

|

||||||

'mistral-small': _("Mistral Small is a lightweight model designed for cost-effective use in tasks like translation and summarization."),

|

|

||||||

'mistral-nemo': _("A state-of-the-art 12B model with 128k context length, built by Mistral AI in collaboration with NVIDIA."),

|

'mistral-nemo': _("A state-of-the-art 12B model with 128k context length, built by Mistral AI in collaboration with NVIDIA."),

|

||||||

|

'mistral-large': _("Mistral Large 2 is Mistral's new flagship model that is significantly more capable in code generation, mathematics, and reasoning with 128k context window and support for dozens of languages."),

|

||||||

|

'qwen2': _("Qwen2 is a new series of large language models from Alibaba group"),

|

||||||

'deepseek-coder-v2': _("An open-source Mixture-of-Experts code language model that achieves performance comparable to GPT4-Turbo in code-specific tasks."),

|

'deepseek-coder-v2': _("An open-source Mixture-of-Experts code language model that achieves performance comparable to GPT4-Turbo in code-specific tasks."),

|

||||||

|

'phi3': _("Phi-3 is a family of lightweight 3B (Mini) and 14B (Medium) state-of-the-art open models by Microsoft."),

|

||||||

'mistral': _("The 7B model released by Mistral AI, updated to version 0.3."),

|

'mistral': _("The 7B model released by Mistral AI, updated to version 0.3."),

|

||||||

'mixtral': _("A set of Mixture of Experts (MoE) model with open weights by Mistral AI in 8x7b and 8x22b parameter sizes."),

|

'mixtral': _("A set of Mixture of Experts (MoE) model with open weights by Mistral AI in 8x7b and 8x22b parameter sizes."),

|

||||||

'codegemma': _("CodeGemma is a collection of powerful, lightweight models that can perform a variety of coding tasks like fill-in-the-middle code completion, code generation, natural language understanding, mathematical reasoning, and instruction following."),

|

'codegemma': _("CodeGemma is a collection of powerful, lightweight models that can perform a variety of coding tasks like fill-in-the-middle code completion, code generation, natural language understanding, mathematical reasoning, and instruction following."),

|

||||||

@ -17,108 +15,98 @@ descriptions = {

|

|||||||

'llama3': _("Meta Llama 3: The most capable openly available LLM to date"),

|

'llama3': _("Meta Llama 3: The most capable openly available LLM to date"),

|

||||||

'gemma': _("Gemma is a family of lightweight, state-of-the-art open models built by Google DeepMind. Updated to version 1.1"),

|

'gemma': _("Gemma is a family of lightweight, state-of-the-art open models built by Google DeepMind. Updated to version 1.1"),

|

||||||

'qwen': _("Qwen 1.5 is a series of large language models by Alibaba Cloud spanning from 0.5B to 110B parameters"),

|

'qwen': _("Qwen 1.5 is a series of large language models by Alibaba Cloud spanning from 0.5B to 110B parameters"),

|

||||||

'qwen2': _("Qwen2 is a new series of large language models from Alibaba group"),

|

|

||||||

'phi3': _("Phi-3 is a family of lightweight 3B (Mini) and 14B (Medium) state-of-the-art open models by Microsoft."),

|

|

||||||

'llama2': _("Llama 2 is a collection of foundation language models ranging from 7B to 70B parameters."),

|

'llama2': _("Llama 2 is a collection of foundation language models ranging from 7B to 70B parameters."),

|

||||||

'codellama': _("A large language model that can use text prompts to generate and discuss code."),

|

'codellama': _("A large language model that can use text prompts to generate and discuss code."),

|

||||||

'nomic-embed-text': _("A high-performing open embedding model with a large token context window."),

|

'nomic-embed-text': _("A high-performing open embedding model with a large token context window."),

|

||||||

'mxbai-embed-large': _("State-of-the-art large embedding model from mixedbread.ai"),

|

|

||||||

'dolphin-mixtral': _("Uncensored, 8x7b and 8x22b fine-tuned models based on the Mixtral mixture of experts models that excels at coding tasks. Created by Eric Hartford."),

|

'dolphin-mixtral': _("Uncensored, 8x7b and 8x22b fine-tuned models based on the Mixtral mixture of experts models that excels at coding tasks. Created by Eric Hartford."),

|

||||||

'phi': _("Phi-2: a 2.7B language model by Microsoft Research that demonstrates outstanding reasoning and language understanding capabilities."),

|

'phi': _("Phi-2: a 2.7B language model by Microsoft Research that demonstrates outstanding reasoning and language understanding capabilities."),

|

||||||

'deepseek-coder': _("DeepSeek Coder is a capable coding model trained on two trillion code and natural language tokens."),

|

|

||||||

'starcoder2': _("StarCoder2 is the next generation of transparently trained open code LLMs that comes in three sizes: 3B, 7B and 15B parameters."),

|

|

||||||

'llama2-uncensored': _("Uncensored Llama 2 model by George Sung and Jarrad Hope."),

|

'llama2-uncensored': _("Uncensored Llama 2 model by George Sung and Jarrad Hope."),

|

||||||

'dolphin-mistral': _("The uncensored Dolphin model based on Mistral that excels at coding tasks. Updated to version 2.8."),

|

'deepseek-coder': _("DeepSeek Coder is a capable coding model trained on two trillion code and natural language tokens."),

|

||||||

|

'mxbai-embed-large': _("State-of-the-art large embedding model from mixedbread.ai"),

|

||||||

'zephyr': _("Zephyr is a series of fine-tuned versions of the Mistral and Mixtral models that are trained to act as helpful assistants."),

|

'zephyr': _("Zephyr is a series of fine-tuned versions of the Mistral and Mixtral models that are trained to act as helpful assistants."),

|

||||||

'yi': _("Yi 1.5 is a high-performing, bilingual language model."),

|

'dolphin-mistral': _("The uncensored Dolphin model based on Mistral that excels at coding tasks. Updated to version 2.8."),

|

||||||

'dolphin-llama3': _("Dolphin 2.9 is a new model with 8B and 70B sizes by Eric Hartford based on Llama 3 that has a variety of instruction, conversational, and coding skills."),

|

'starcoder2': _("StarCoder2 is the next generation of transparently trained open code LLMs that comes in three sizes: 3B, 7B and 15B parameters."),

|

||||||

'orca-mini': _("A general-purpose model ranging from 3 billion parameters to 70 billion, suitable for entry-level hardware."),

|

'orca-mini': _("A general-purpose model ranging from 3 billion parameters to 70 billion, suitable for entry-level hardware."),

|

||||||

'llava-llama3': _("A LLaVA model fine-tuned from Llama 3 Instruct with better scores in several benchmarks."),

|

'dolphin-llama3': _("Dolphin 2.9 is a new model with 8B and 70B sizes by Eric Hartford based on Llama 3 that has a variety of instruction, conversational, and coding skills."),

|

||||||

'qwen2.5-coder': _("The latest series of Code-Specific Qwen models, with significant improvements in code generation, code reasoning, and code fixing."),

|

'yi': _("Yi 1.5 is a high-performing, bilingual language model."),

|

||||||

'mistral-openorca': _("Mistral OpenOrca is a 7 billion parameter model, fine-tuned on top of the Mistral 7B model using the OpenOrca dataset."),

|

'mistral-openorca': _("Mistral OpenOrca is a 7 billion parameter model, fine-tuned on top of the Mistral 7B model using the OpenOrca dataset."),

|

||||||

|

'llava-llama3': _("A LLaVA model fine-tuned from Llama 3 Instruct with better scores in several benchmarks."),

|

||||||

'starcoder': _("StarCoder is a code generation model trained on 80+ programming languages."),

|

'starcoder': _("StarCoder is a code generation model trained on 80+ programming languages."),

|

||||||

|

'llama2-chinese': _("Llama 2 based model fine tuned to improve Chinese dialogue ability."),

|

||||||

|

'vicuna': _("General use chat model based on Llama and Llama 2 with 2K to 16K context sizes."),

|

||||||

'tinyllama': _("The TinyLlama project is an open endeavor to train a compact 1.1B Llama model on 3 trillion tokens."),

|

'tinyllama': _("The TinyLlama project is an open endeavor to train a compact 1.1B Llama model on 3 trillion tokens."),

|

||||||

'codestral': _("Codestral is Mistral AI’s first-ever code model designed for code generation tasks."),

|

'codestral': _("Codestral is Mistral AI’s first-ever code model designed for code generation tasks."),

|

||||||

'vicuna': _("General use chat model based on Llama and Llama 2 with 2K to 16K context sizes."),

|

|

||||||

'llama2-chinese': _("Llama 2 based model fine tuned to improve Chinese dialogue ability."),

|

|

||||||

'snowflake-arctic-embed': _("A suite of text embedding models by Snowflake, optimized for performance."),

|

|

||||||

'wizard-vicuna-uncensored': _("Wizard Vicuna Uncensored is a 7B, 13B, and 30B parameter model based on Llama 2 uncensored by Eric Hartford."),

|

'wizard-vicuna-uncensored': _("Wizard Vicuna Uncensored is a 7B, 13B, and 30B parameter model based on Llama 2 uncensored by Eric Hartford."),

|

||||||

'granite-code': _("A family of open foundation models by IBM for Code Intelligence"),

|

|

||||||

'codegeex4': _("A versatile model for AI software development scenarios, including code completion."),

|

|

||||||

'nous-hermes2': _("The powerful family of models by Nous Research that excels at scientific discussion and coding tasks."),

|

'nous-hermes2': _("The powerful family of models by Nous Research that excels at scientific discussion and coding tasks."),

|

||||||

'all-minilm': _("Embedding models on very large sentence level datasets."),

|

|

||||||

'openchat': _("A family of open-source models trained on a wide variety of data, surpassing ChatGPT on various benchmarks. Updated to version 3.5-0106."),

|

'openchat': _("A family of open-source models trained on a wide variety of data, surpassing ChatGPT on various benchmarks. Updated to version 3.5-0106."),

|

||||||

'aya': _("Aya 23, released by Cohere, is a new family of state-of-the-art, multilingual models that support 23 languages."),

|

'aya': _("Aya 23, released by Cohere, is a new family of state-of-the-art, multilingual models that support 23 languages."),

|

||||||

'codeqwen': _("CodeQwen1.5 is a large language model pretrained on a large amount of code data."),

|

|

||||||

'wizardlm2': _("State of the art large language model from Microsoft AI with improved performance on complex chat, multilingual, reasoning and agent use cases."),

|

'wizardlm2': _("State of the art large language model from Microsoft AI with improved performance on complex chat, multilingual, reasoning and agent use cases."),

|

||||||

'tinydolphin': _("An experimental 1.1B parameter model trained on the new Dolphin 2.8 dataset by Eric Hartford and based on TinyLlama."),

|

'tinydolphin': _("An experimental 1.1B parameter model trained on the new Dolphin 2.8 dataset by Eric Hartford and based on TinyLlama."),

|

||||||

|

'granite-code': _("A family of open foundation models by IBM for Code Intelligence"),

|

||||||

'wizardcoder': _("State-of-the-art code generation model"),

|

'wizardcoder': _("State-of-the-art code generation model"),

|

||||||

'stable-code': _("Stable Code 3B is a coding model with instruct and code completion variants on par with models such as Code Llama 7B that are 2.5x larger."),

|

'stable-code': _("Stable Code 3B is a coding model with instruct and code completion variants on par with models such as Code Llama 7B that are 2.5x larger."),

|

||||||

'openhermes': _("OpenHermes 2.5 is a 7B model fine-tuned by Teknium on Mistral with fully open datasets."),

|

'openhermes': _("OpenHermes 2.5 is a 7B model fine-tuned by Teknium on Mistral with fully open datasets."),

|

||||||

'qwen2-math': _("Qwen2 Math is a series of specialized math language models built upon the Qwen2 LLMs, which significantly outperforms the mathematical capabilities of open-source models and even closed-source models (e.g., GPT4o)."),

|

'all-minilm': _("Embedding models on very large sentence level datasets."),

|

||||||

'bakllava': _("BakLLaVA is a multimodal model consisting of the Mistral 7B base model augmented with the LLaVA architecture."),

|

'codeqwen': _("CodeQwen1.5 is a large language model pretrained on a large amount of code data."),

|

||||||

'stablelm2': _("Stable LM 2 is a state-of-the-art 1.6B and 12B parameter language model trained on multilingual data in English, Spanish, German, Italian, French, Portuguese, and Dutch."),

|

'stablelm2': _("Stable LM 2 is a state-of-the-art 1.6B and 12B parameter language model trained on multilingual data in English, Spanish, German, Italian, French, Portuguese, and Dutch."),

|

||||||

'llama3-gradient': _("This model extends LLama-3 8B's context length from 8k to over 1m tokens."),

|

|

||||||

'deepseek-llm': _("An advanced language model crafted with 2 trillion bilingual tokens."),

|

|

||||||

'wizard-math': _("Model focused on math and logic problems"),

|

'wizard-math': _("Model focused on math and logic problems"),

|

||||||

'glm4': _("A strong multi-lingual general language model with competitive performance to Llama 3."),

|

|

||||||

'neural-chat': _("A fine-tuned model based on Mistral with good coverage of domain and language."),

|

'neural-chat': _("A fine-tuned model based on Mistral with good coverage of domain and language."),

|

||||||

'reflection': _("A high-performing model trained with a new technique called Reflection-tuning that teaches a LLM to detect mistakes in its reasoning and correct course."),

|

'llama3-gradient': _("This model extends LLama-3 8B's context length from 8k to over 1m tokens."),

|

||||||

'llama3-chatqa': _("A model from NVIDIA based on Llama 3 that excels at conversational question answering (QA) and retrieval-augmented generation (RAG)."),

|

|

||||||

'mistral-large': _("Mistral Large 2 is Mistral's new flagship model that is significantly more capable in code generation, mathematics, and reasoning with 128k context window and support for dozens of languages."),

|

|

||||||

'moondream': _("moondream2 is a small vision language model designed to run efficiently on edge devices."),

|

|

||||||

'xwinlm': _("Conversational model based on Llama 2 that performs competitively on various benchmarks."),

|

|

||||||

'phind-codellama': _("Code generation model based on Code Llama."),

|

'phind-codellama': _("Code generation model based on Code Llama."),

|

||||||

'nous-hermes': _("General use models based on Llama and Llama 2 from Nous Research."),

|

'nous-hermes': _("General use models based on Llama and Llama 2 from Nous Research."),

|

||||||

'sqlcoder': _("SQLCoder is a code completion model fined-tuned on StarCoder for SQL generation tasks"),

|

|

||||||

'dolphincoder': _("A 7B and 15B uncensored variant of the Dolphin model family that excels at coding, based on StarCoder2."),

|

'dolphincoder': _("A 7B and 15B uncensored variant of the Dolphin model family that excels at coding, based on StarCoder2."),

|

||||||

|

'sqlcoder': _("SQLCoder is a code completion model fined-tuned on StarCoder for SQL generation tasks"),

|

||||||

|

'xwinlm': _("Conversational model based on Llama 2 that performs competitively on various benchmarks."),

|

||||||

|

'deepseek-llm': _("An advanced language model crafted with 2 trillion bilingual tokens."),

|

||||||

'yarn-llama2': _("An extension of Llama 2 that supports a context of up to 128k tokens."),

|

'yarn-llama2': _("An extension of Llama 2 that supports a context of up to 128k tokens."),

|

||||||

'smollm': _("🪐 A family of small models with 135M, 360M, and 1.7B parameters, trained on a new high-quality dataset."),

|

'llama3-chatqa': _("A model from NVIDIA based on Llama 3 that excels at conversational question answering (QA) and retrieval-augmented generation (RAG)."),

|

||||||

'wizardlm': _("General use model based on Llama 2."),

|

'wizardlm': _("General use model based on Llama 2."),

|

||||||

'deepseek-v2': _("A strong, economical, and efficient Mixture-of-Experts language model."),

|

|

||||||

'starling-lm': _("Starling is a large language model trained by reinforcement learning from AI feedback focused on improving chatbot helpfulness."),

|

'starling-lm': _("Starling is a large language model trained by reinforcement learning from AI feedback focused on improving chatbot helpfulness."),

|

||||||

'samantha-mistral': _("A companion assistant trained in philosophy, psychology, and personal relationships. Based on Mistral."),

|

'codegeex4': _("A versatile model for AI software development scenarios, including code completion."),

|

||||||

'solar': _("A compact, yet powerful 10.7B large language model designed for single-turn conversation."),

|

'snowflake-arctic-embed': _("A suite of text embedding models by Snowflake, optimized for performance."),

|

||||||

'orca2': _("Orca 2 is built by Microsoft research, and are a fine-tuned version of Meta's Llama 2 models. The model is designed to excel particularly in reasoning."),

|

'orca2': _("Orca 2 is built by Microsoft research, and are a fine-tuned version of Meta's Llama 2 models. The model is designed to excel particularly in reasoning."),

|

||||||

'stable-beluga': _("Llama 2 based model fine tuned on an Orca-style dataset. Originally called Free Willy."),

|

'solar': _("A compact, yet powerful 10.7B large language model designed for single-turn conversation."),

|

||||||

|

'samantha-mistral': _("A companion assistant trained in philosophy, psychology, and personal relationships. Based on Mistral."),

|

||||||

|

'moondream': _("moondream2 is a small vision language model designed to run efficiently on edge devices."),

|

||||||

|

'smollm': _("🪐 A family of small models with 135M, 360M, and 1.7B parameters, trained on a new high-quality dataset."),

|

||||||

|

'stable-beluga': _("🪐 A family of small models with 135M, 360M, and 1.7B parameters, trained on a new high-quality dataset."),

|

||||||

|

'qwen2-math': _("Qwen2 Math is a series of specialized math language models built upon the Qwen2 LLMs, which significantly outperforms the mathematical capabilities of open-source models and even closed-source models (e.g., GPT4o)."),

|

||||||

'dolphin-phi': _("2.7B uncensored Dolphin model by Eric Hartford, based on the Phi language model by Microsoft Research."),

|

'dolphin-phi': _("2.7B uncensored Dolphin model by Eric Hartford, based on the Phi language model by Microsoft Research."),

|

||||||

|

'deepseek-v2': _("A strong, economical, and efficient Mixture-of-Experts language model."),

|

||||||

|

'bakllava': _("BakLLaVA is a multimodal model consisting of the Mistral 7B base model augmented with the LLaVA architecture."),

|

||||||

|

'glm4': _("A strong multi-lingual general language model with competitive performance to Llama 3."),

|

||||||

'wizardlm-uncensored': _("Uncensored version of Wizard LM model"),

|

'wizardlm-uncensored': _("Uncensored version of Wizard LM model"),

|

||||||

'hermes3': _("Hermes 3 is the latest version of the flagship Hermes series of LLMs by Nous Research"),

|

|

||||||

'yi-coder': _("Yi-Coder is a series of open-source code language models that delivers state-of-the-art coding performance with fewer than 10 billion parameters."),

|

|

||||||

'llava-phi3': _("A new small LLaVA model fine-tuned from Phi 3 Mini."),

|

|

||||||

'internlm2': _("InternLM2.5 is a 7B parameter model tailored for practical scenarios with outstanding reasoning capability."),

|

|

||||||

'yarn-mistral': _("An extension of Mistral to support context windows of 64K or 128K."),

|

'yarn-mistral': _("An extension of Mistral to support context windows of 64K or 128K."),

|

||||||

'llama-pro': _("An expansion of Llama 2 that specializes in integrating both general language understanding and domain-specific knowledge, particularly in programming and mathematics."),

|

'phi3.5': _("A lightweight AI model with 3.8 billion parameters with performance overtaking similarly and larger sized models."),

|

||||||

'medllama2': _("Fine-tuned Llama 2 model to answer medical questions based on an open source medical dataset."),

|

'medllama2': _("Fine-tuned Llama 2 model to answer medical questions based on an open source medical dataset."),

|

||||||

|

'llama-pro': _("An expansion of Llama 2 that specializes in integrating both general language understanding and domain-specific knowledge, particularly in programming and mathematics."),

|

||||||

|

'llava-phi3': _("A new small LLaVA model fine-tuned from Phi 3 Mini."),

|

||||||

'meditron': _("Open-source medical large language model adapted from Llama 2 to the medical domain."),

|

'meditron': _("Open-source medical large language model adapted from Llama 2 to the medical domain."),

|

||||||

'nexusraven': _("Nexus Raven is a 13B instruction tuned model for function calling tasks."),

|

|

||||||

'nous-hermes2-mixtral': _("The Nous Hermes 2 model from Nous Research, now trained over Mixtral."),

|

'nous-hermes2-mixtral': _("The Nous Hermes 2 model from Nous Research, now trained over Mixtral."),

|

||||||

|

'nexusraven': _("Nexus Raven is a 13B instruction tuned model for function calling tasks."),

|

||||||

'codeup': _("Great code generation model based on Llama2."),

|

'codeup': _("Great code generation model based on Llama2."),

|

||||||

'llama3-groq-tool-use': _("A series of models from Groq that represent a significant advancement in open-source AI capabilities for tool use/function calling."),

|

|

||||||

'everythinglm': _("Uncensored Llama2 based model with support for a 16K context window."),

|

'everythinglm': _("Uncensored Llama2 based model with support for a 16K context window."),

|

||||||

|

'hermes3': _("Hermes 3 is the latest version of the flagship Hermes series of LLMs by Nous Research"),

|

||||||

|

'internlm2': _("InternLM2.5 is a 7B parameter model tailored for practical scenarios with outstanding reasoning capability."),

|

||||||

'magicoder': _("🎩 Magicoder is a family of 7B parameter models trained on 75K synthetic instruction data using OSS-Instruct, a novel approach to enlightening LLMs with open-source code snippets."),

|

'magicoder': _("🎩 Magicoder is a family of 7B parameter models trained on 75K synthetic instruction data using OSS-Instruct, a novel approach to enlightening LLMs with open-source code snippets."),

|

||||||

'stablelm-zephyr': _("A lightweight chat model allowing accurate, and responsive output without requiring high-end hardware."),

|

'stablelm-zephyr': _("A lightweight chat model allowing accurate, and responsive output without requiring high-end hardware."),

|

||||||

'codebooga': _("A high-performing code instruct model created by merging two existing code models."),

|

'codebooga': _("A high-performing code instruct model created by merging two existing code models."),

|

||||||

'wizard-vicuna': _("Wizard Vicuna is a 13B parameter model based on Llama 2 trained by MelodysDreamj."),

|

|

||||||

'mistrallite': _("MistralLite is a fine-tuned model based on Mistral with enhanced capabilities of processing long contexts."),

|

'mistrallite': _("MistralLite is a fine-tuned model based on Mistral with enhanced capabilities of processing long contexts."),

|

||||||

|

'llama3-groq-tool-use': _("A series of models from Groq that represent a significant advancement in open-source AI capabilities for tool use/function calling."),

|

||||||

'falcon2': _("Falcon2 is an 11B parameters causal decoder-only model built by TII and trained over 5T tokens."),

|

'falcon2': _("Falcon2 is an 11B parameters causal decoder-only model built by TII and trained over 5T tokens."),

|

||||||

|

'wizard-vicuna': _("Wizard Vicuna is a 13B parameter model based on Llama 2 trained by MelodysDreamj."),

|

||||||

'duckdb-nsql': _("7B parameter text-to-SQL model made by MotherDuck and Numbers Station."),

|

'duckdb-nsql': _("7B parameter text-to-SQL model made by MotherDuck and Numbers Station."),

|

||||||

'minicpm-v': _("A series of multimodal LLMs (MLLMs) designed for vision-language understanding."),

|

|

||||||

'megadolphin': _("MegaDolphin-2.2-120b is a transformation of Dolphin-2.2-70b created by interleaving the model with itself."),

|

'megadolphin': _("MegaDolphin-2.2-120b is a transformation of Dolphin-2.2-70b created by interleaving the model with itself."),

|

||||||

'notux': _("A top-performing mixture of experts model, fine-tuned with high-quality data."),

|

'notux': _("A top-performing mixture of experts model, fine-tuned with high-quality data."),

|

||||||

'goliath': _("A language model created by combining two fine-tuned Llama 2 70B models into one."),

|

'goliath': _("A language model created by combining two fine-tuned Llama 2 70B models into one."),

|

||||||

'open-orca-platypus2': _("Merge of the Open Orca OpenChat model and the Garage-bAInd Platypus 2 model. Designed for chat and code generation."),

|

'open-orca-platypus2': _("Merge of the Open Orca OpenChat model and the Garage-bAInd Platypus 2 model. Designed for chat and code generation."),

|

||||||

'notus': _("A 7B chat model fine-tuned with high-quality data and based on Zephyr."),

|

'notus': _("A 7B chat model fine-tuned with high-quality data and based on Zephyr."),

|

||||||

'bge-m3': _("BGE-M3 is a new model from BAAI distinguished for its versatility in Multi-Functionality, Multi-Linguality, and Multi-Granularity."),

|

|

||||||

'mathstral': _("MathΣtral: a 7B model designed for math reasoning and scientific discovery by Mistral AI."),

|

|

||||||

'dbrx': _("DBRX is an open, general-purpose LLM created by Databricks."),

|

'dbrx': _("DBRX is an open, general-purpose LLM created by Databricks."),

|

||||||

'solar-pro': _("Solar Pro Preview: an advanced large language model (LLM) with 22 billion parameters designed to fit into a single GPU"),

|

'mathstral': _("MathΣtral: a 7B model designed for math reasoning and scientific discovery by Mistral AI."),

|

||||||

'nuextract': _("A 3.8B model fine-tuned on a private high-quality synthetic dataset for information extraction, based on Phi-3."),

|

'bge-m3': _("BGE-M3 is a new model from BAAI distinguished for its versatility in Multi-Functionality, Multi-Linguality, and Multi-Granularity."),

|

||||||

'alfred': _("A robust conversational model designed to be used for both chat and instruct use cases."),

|

'alfred': _("A robust conversational model designed to be used for both chat and instruct use cases."),

|

||||||

'firefunction-v2': _("An open weights function calling model based on Llama 3, competitive with GPT-4o function calling capabilities."),

|

'firefunction-v2': _("An open weights function calling model based on Llama 3, competitive with GPT-4o function calling capabilities."),

|

||||||

'reader-lm': _("A series of models that convert HTML content to Markdown content, which is useful for content conversion tasks."),

|

'nuextract': _("A 3.8B model fine-tuned on a private high-quality synthetic dataset for information extraction, based on Phi-3."),

|

||||||

'bge-large': _("Embedding model from BAAI mapping texts to vectors."),

|

'bge-large': _("Embedding model from BAAI mapping texts to vectors."),

|

||||||

'deepseek-v2.5': _("An upgraded version of DeekSeek-V2 that integrates the general and coding abilities of both DeepSeek-V2-Chat and DeepSeek-Coder-V2-Instruct."),

|

|

||||||

'bespoke-minicheck': _("A state-of-the-art fact-checking model developed by Bespoke Labs."),

|

|

||||||

'paraphrase-multilingual': _("Sentence-transformers model that can be used for tasks like clustering or semantic search."),

|

'paraphrase-multilingual': _("Sentence-transformers model that can be used for tasks like clustering or semantic search."),

|

||||||

}

|

}

|

||||||

@ -11,8 +11,6 @@ logger = getLogger(__name__)

|

|||||||

|

|

||||||

window = None

|

window = None

|

||||||

|

|

||||||

AMD_support_label = "\n<a href='https://github.com/Jeffser/Alpaca/wiki/AMD-Support'>{}</a>".format(_('Alpaca Support'))

|

|

||||||

|

|

||||||

def log_output(pipe):

|

def log_output(pipe):

|

||||||

with open(os.path.join(data_dir, 'tmp.log'), 'a') as f:

|

with open(os.path.join(data_dir, 'tmp.log'), 'a') as f:

|

||||||

with pipe:

|

with pipe:

|

||||||

@ -21,18 +19,7 @@ def log_output(pipe):

|

|||||||

print(line, end='')

|

print(line, end='')

|

||||||

f.write(line)

|

f.write(line)

|

||||||

f.flush()

|

f.flush()

|

||||||

if 'msg="model request too large for system"' in line:

|

except:

|

||||||

window.show_toast(_("Model request too large for system"), window.main_overlay)

|

|

||||||

elif 'msg="amdgpu detected, but no compatible rocm library found.' in line:

|

|

||||||

if bool(os.getenv("FLATPAK_ID")):

|

|

||||||

window.ollama_information_label.set_label(_("AMD GPU detected but the extension is missing, Ollama will use CPU.") + AMD_support_label)

|

|

||||||

else:

|

|

||||||

window.ollama_information_label.set_label(_("AMD GPU detected but ROCm is missing, Ollama will use CPU.") + AMD_support_label)

|

|

||||||

window.ollama_information_label.set_css_classes(['dim-label', 'error'])

|

|

||||||

elif 'msg="amdgpu is supported"' in line:

|

|

||||||

window.ollama_information_label.set_label(_("Using AMD GPU type '{}'").format(line.split('=')[-1]))

|

|

||||||

window.ollama_information_label.set_css_classes(['dim-label', 'success'])

|

|

||||||

except Exception as e:

|

|

||||||

pass

|

pass

|

||||||

|

|

||||||

class instance():

|

class instance():

|

||||||

@ -105,7 +92,6 @@ class instance():

|

|||||||

self.idle_timer.start()

|

self.idle_timer.start()

|

||||||

|

|

||||||

def start(self):

|

def start(self):

|

||||||

self.stop()

|

|

||||||

if shutil.which('ollama'):

|

if shutil.which('ollama'):

|

||||||

if not os.path.isdir(os.path.join(cache_dir, 'tmp/ollama')):

|

if not os.path.isdir(os.path.join(cache_dir, 'tmp/ollama')):

|

||||||

os.mkdir(os.path.join(cache_dir, 'tmp/ollama'))

|

os.mkdir(os.path.join(cache_dir, 'tmp/ollama'))

|

||||||

@ -129,10 +115,10 @@ class instance():

|

|||||||

self.instance = instance

|

self.instance = instance

|

||||||

if not self.idle_timer:

|

if not self.idle_timer:

|

||||||

self.start_timer()

|

self.start_timer()

|

||||||

window.ollama_information_label.set_label(_("Integrated Ollama instance is running"))

|

|

||||||

window.ollama_information_label.set_css_classes(['dim-label', 'success'])

|

|

||||||

else:

|

else:

|

||||||

self.remote = True

|

self.remote = True

|

||||||

|

if not self.remote_url:

|

||||||

|

window.remote_connection_entry.set_text('http://0.0.0.0:11434')

|

||||||

window.remote_connection_switch.set_sensitive(True)

|

window.remote_connection_switch.set_sensitive(True)

|

||||||

window.remote_connection_switch.set_active(True)

|

window.remote_connection_switch.set_active(True)

|

||||||

|

|

||||||

@ -145,8 +131,6 @@ class instance():

|

|||||||

self.instance.terminate()

|

self.instance.terminate()

|

||||||

self.instance.wait()

|

self.instance.wait()

|

||||||

self.instance = None

|

self.instance = None

|

||||||

window.ollama_information_label.set_label(_("Integrated Ollama instance is not running"))

|

|

||||||

window.ollama_information_label.set_css_classes(['dim-label'])

|

|

||||||

logger.info("Stopped Alpaca's Ollama instance")

|

logger.info("Stopped Alpaca's Ollama instance")

|

||||||

|

|

||||||

def reset(self):

|

def reset(self):

|

||||||

|

|||||||

@ -6,7 +6,7 @@ Handles the chat widget (testing)

|

|||||||

import gi

|

import gi

|

||||||

gi.require_version('Gtk', '4.0')

|

gi.require_version('Gtk', '4.0')

|

||||||

gi.require_version('GtkSource', '5')

|

gi.require_version('GtkSource', '5')

|

||||||

from gi.repository import Gtk, Gio, Adw, Gdk, GLib

|

from gi.repository import Gtk, Gio, Adw, Gdk

|

||||||

import logging, os, datetime, shutil, random, tempfile, tarfile, json

|

import logging, os, datetime, shutil, random, tempfile, tarfile, json

|

||||||

from ..internal import data_dir

|

from ..internal import data_dir

|

||||||

from .message_widget import message

|

from .message_widget import message

|

||||||

@ -66,15 +66,13 @@ class chat(Gtk.ScrolledWindow):

|

|||||||

vexpand=True,

|

vexpand=True,

|

||||||

hexpand=True,

|

hexpand=True,

|

||||||

css_classes=["undershoot-bottom"],

|

css_classes=["undershoot-bottom"],

|

||||||

name=name,

|

name=name

|

||||||

hscrollbar_policy=2

|

|

||||||

)

|

)

|

||||||

self.messages = {}

|

self.messages = {}

|

||||||

self.welcome_screen = None

|

self.welcome_screen = None

|

||||||

self.regenerate_button = None

|

self.regenerate_button = None

|

||||||

self.busy = False

|

self.busy = False

|

||||||

#self.get_vadjustment().connect('notify::page-size', lambda va, *_: va.set_value(va.get_upper() - va.get_page_size()) if va.get_value() == 0 else None)

|

self.get_vadjustment().connect('notify::page-size', lambda va, *_: va.set_value(va.get_upper() - va.get_page_size()) if va.get_value() == 0 else None)

|

||||||

##TODO Figure out how to do this with the search thing

|

|

||||||

|

|

||||||

def stop_message(self):

|

def stop_message(self):

|

||||||

self.busy = False

|

self.busy = False

|

||||||

@ -87,8 +85,6 @@ class chat(Gtk.ScrolledWindow):

|

|||||||

self.stop_message()

|

self.stop_message()

|

||||||

for widget in list(self.container):

|

for widget in list(self.container):

|

||||||

self.container.remove(widget)

|

self.container.remove(widget)

|

||||||

self.show_welcome_screen(len(window.model_manager.get_model_list()) > 0)

|

|

||||||

print('clear chat for some reason')

|

|

||||||

|

|

||||||

def add_message(self, message_id:str, model:str=None):

|

def add_message(self, message_id:str, model:str=None):

|

||||||

msg = message(message_id, model)

|

msg = message(message_id, model)

|

||||||

@ -105,9 +101,7 @@ class chat(Gtk.ScrolledWindow):

|

|||||||

if self.welcome_screen:

|

if self.welcome_screen:

|

||||||

self.container.remove(self.welcome_screen)

|

self.container.remove(self.welcome_screen)

|

||||||

self.welcome_screen = None

|

self.welcome_screen = None

|

||||||

if len(list(self.container)) > 0:

|

self.clear_chat()

|

||||||

self.clear_chat()

|

|

||||||

return

|

|

||||||

button_container = Gtk.Box(

|

button_container = Gtk.Box(

|

||||||

orientation=1,

|

orientation=1,

|

||||||

spacing=10,

|

spacing=10,

|

||||||

@ -127,7 +121,7 @@ class chat(Gtk.ScrolledWindow):

|

|||||||

tooltip_text=_("Open Model Manager"),

|

tooltip_text=_("Open Model Manager"),

|

||||||

css_classes=["suggested-action", "pill"]

|

css_classes=["suggested-action", "pill"]

|

||||||

)

|

)

|

||||||

button.set_action_name('app.manage_models')

|

button.connect('clicked', lambda *_ : window.manage_models_dialog.present(window))

|

||||||

button_container.append(button)

|

button_container.append(button)

|

||||||

|

|

||||||

self.welcome_screen = Adw.StatusPage(

|

self.welcome_screen = Adw.StatusPage(

|

||||||

@ -159,8 +153,8 @@ class chat(Gtk.ScrolledWindow):

|

|||||||

for file_name, file_type in message_data['files'].items():

|

for file_name, file_type in message_data['files'].items():

|

||||||

files[os.path.join(data_dir, "chats", self.get_name(), message_id, file_name)] = file_type

|

files[os.path.join(data_dir, "chats", self.get_name(), message_id, file_name)] = file_type

|

||||||

message_element.add_attachments(files)

|

message_element.add_attachments(files)

|

||||||

GLib.idle_add(message_element.set_text, message_data['content'])

|

message_element.set_text(message_data['content'])

|

||||||