Compare commits

14 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

e4360925b6 | ||

|

|

425e1b0211 | ||

|

|

529687ffdb | ||

|

|

34e3511d62 | ||

|

|

70e2c81eff | ||

|

|

d8ba1f5696 | ||

|

|

b21f7490ec | ||

|

|

33eed32a15 | ||

|

|

b9887d9286 | ||

|

|

d1fbdad486 | ||

|

|

28d0860522 | ||

|

|

a4981b8e9c | ||

|

|

76486da3d4 | ||

|

|

c7303cd278 |

12

README.md

12

README.md

@@ -21,24 +21,24 @@ An [Ollama](https://github.com/ollama/ollama) client made with GTK4 and Adwaita.

|

|||||||

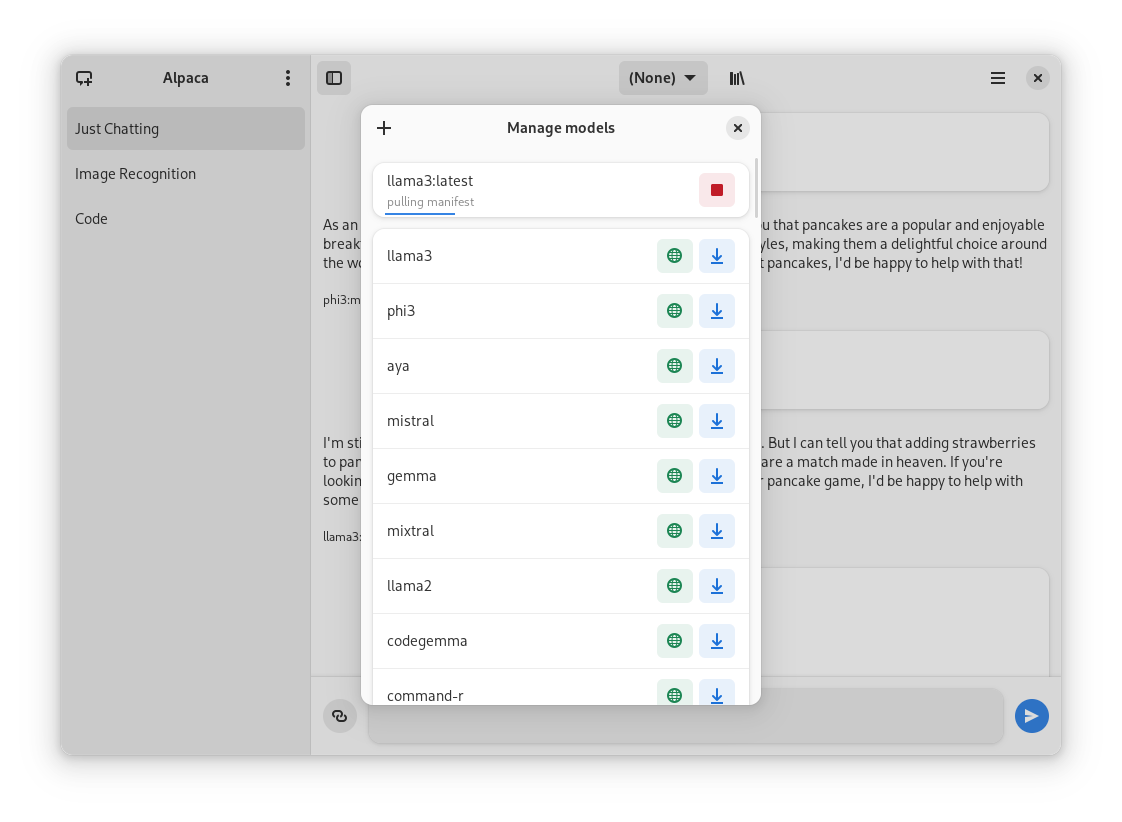

- Pull and delete models from the app

|

- Pull and delete models from the app

|

||||||

|

|

||||||

## Future features!

|

## Future features!

|

||||||

- Persistent conversations

|

|

||||||

- Multiple conversations

|

- Multiple conversations

|

||||||

- Image / document recognition

|

- Image / document recognition

|

||||||

|

- Notifications

|

||||||

|

- Code highlighting

|

||||||

|

|

||||||

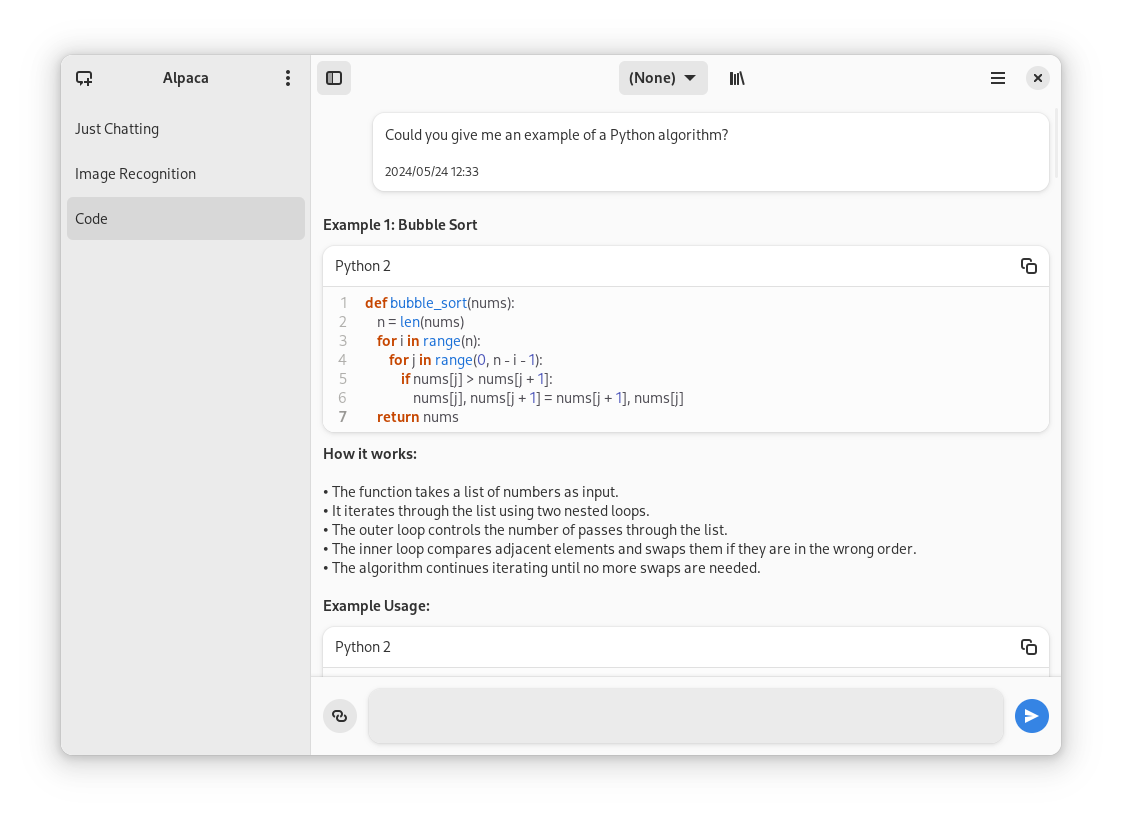

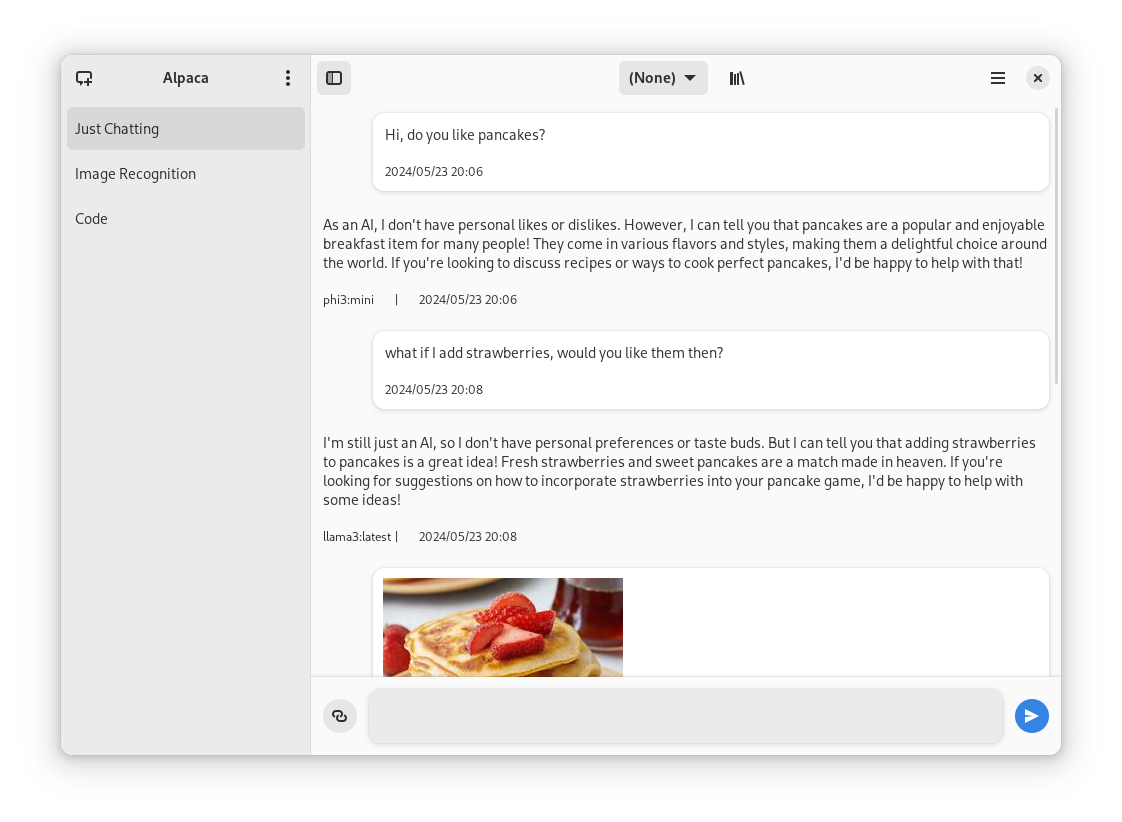

## Screenies

|

## Screenies

|

||||||

Login to Ollama instance | Chatting with models | Managing models

|

Login to Ollama instance | Chatting with models | Managing models

|

||||||

:-------------------------:|:-------------------------:|:-------------------------:

|

:-------------------------:|:-------------------------:|:-------------------------:

|

||||||

|  |

|

|  |

|

||||||

|

|

||||||

## Preview

|

## Preview

|

||||||

1. Clone repo using Gnome Builder

|

1. Clone repo using Gnome Builder

|

||||||

2. Press the `run` button

|

2. Press the `run` button

|

||||||

|

|

||||||

## Instalation

|

## Instalation

|

||||||

1. Clone repo using Gnome Builder

|

1. Go to the `releases` page

|

||||||

2. Build the app using the `build` button

|

2. Download the latest flatpak package

|

||||||

3. Prepare the file using the `install` button (it doesn't actually install it, idk)

|

3. Open it

|

||||||

4. Then press the `export` button, it will export a `com.jeffser.Alpaca.flatpak` file, you can install it just by opening it

|

|

||||||

|

|

||||||

## Usage

|

## Usage

|

||||||

- You'll need an Ollama instance, I recommend using the [Docker image](https://ollama.com/blog/ollama-is-now-available-as-an-official-docker-image)

|

- You'll need an Ollama instance, I recommend using the [Docker image](https://ollama.com/blog/ollama-is-now-available-as-an-official-docker-image)

|

||||||

|

|||||||

@@ -64,8 +64,7 @@

|

|||||||

"sources" : [

|

"sources" : [

|

||||||

{

|

{

|

||||||

"type" : "git",

|

"type" : "git",

|

||||||

"url" : "https://github.com/Jeffser/Alpaca.git",

|

"url" : "file:///home/tentri/Documents/Alpaca"

|

||||||

"tag": "0.2.0"

|

|

||||||

}

|

}

|

||||||

]

|

]

|

||||||

}

|

}

|

||||||

|

|||||||

@@ -7,7 +7,8 @@

|

|||||||

<name>Alpaca</name>

|

<name>Alpaca</name>

|

||||||

<summary>An Ollama client</summary>

|

<summary>An Ollama client</summary>

|

||||||

<description>

|

<description>

|

||||||

<p>Made with GTK4 and Adwaita.</p>

|

<p>Chat with multiple AI models</p>

|

||||||

|

<p>An Ollama client</p>

|

||||||

<p>Features</p>

|

<p>Features</p>

|

||||||

<ul>

|

<ul>

|

||||||

<li>Talk to multiple models in the same conversation</li>

|

<li>Talk to multiple models in the same conversation</li>

|

||||||

@@ -29,8 +30,8 @@

|

|||||||

<category>Chat</category>

|

<category>Chat</category>

|

||||||

</categories>

|

</categories>

|

||||||

<branding>

|

<branding>

|

||||||

<color type="primary" scheme_preference="light">#ff00ff</color>

|

<color type="primary" scheme_preference="light">#8cdef5</color>

|

||||||

<color type="primary" scheme_preference="dark">#993d3d</color>

|

<color type="primary" scheme_preference="dark">#0f2b78</color>

|

||||||

</branding>

|

</branding>

|

||||||

<screenshots>

|

<screenshots>

|

||||||

<screenshot type="default">

|

<screenshot type="default">

|

||||||

@@ -51,6 +52,46 @@

|

|||||||

<url type="homepage">https://github.com/Jeffser/Alpaca</url>

|

<url type="homepage">https://github.com/Jeffser/Alpaca</url>

|

||||||

<url type="donation">https://github.com/sponsors/Jeffser</url>

|

<url type="donation">https://github.com/sponsors/Jeffser</url>

|

||||||

<releases>

|

<releases>

|

||||||

|

<release version="0.3.0" date="2024-05-16">

|

||||||

|

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.3.0</url>

|

||||||

|

<description>

|

||||||

|

<p>Fixes and features</p>

|

||||||

|

<ul>

|

||||||

|

<li>Russian translation (thanks github/alexkdeveloper)</li>

|

||||||

|

<li>Fixed: Cannot close app on first setup</li>

|

||||||

|

<li>Fixed: Brand colors for Flathub</li>

|

||||||

|

<li>Fixed: App description</li>

|

||||||

|

<li>Fixed: Only show 'save changes dialog' when you actually change the url</li>

|

||||||

|

</ul>

|

||||||

|

<p>

|

||||||

|

Please report any errors to the issues page, thank you.

|

||||||

|

</p>

|

||||||

|

</description>

|

||||||

|

</release>

|

||||||

|

<release version="0.2.2" date="2024-05-14">

|

||||||

|

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.2.1</url>

|

||||||

|

<description>

|

||||||

|

<p>0.2.2 Bug fixes</p>

|

||||||

|

<ul>

|

||||||

|

<li>Toast messages appearing behind dialogs</li>

|

||||||

|

<li>Local model list not updating when changing servers</li>

|

||||||

|

<li>Closing the setup dialog closes the whole app</li>

|

||||||

|

</ul>

|

||||||

|

<p>

|

||||||

|

Please report any errors to the issues page, thank you.

|

||||||

|

</p>

|

||||||

|

</description>

|

||||||

|

</release>

|

||||||

|

<release version="0.2.1" date="2024-05-14">

|

||||||

|

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.2.1</url>

|

||||||

|

<description>

|

||||||

|

<p>0.2.1 Data saving fix</p>

|

||||||

|

<p>The app didn't save the config files and chat history to the right directory, this is now fixed</p>

|

||||||

|

<p>

|

||||||

|

Please report any errors to the issues page, thank you.

|

||||||

|

</p>

|

||||||

|

</description>

|

||||||

|

</release>

|

||||||

<release version="0.2.0" date="2024-05-14">

|

<release version="0.2.0" date="2024-05-14">

|

||||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.2.0</url>

|

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.2.0</url>

|

||||||

<description>

|

<description>

|

||||||

|

|||||||

@@ -1,5 +1,5 @@

|

|||||||

project('Alpaca',

|

project('Alpaca',

|

||||||

version: '0.2.0',

|

version: '0.2.2',

|

||||||

meson_version: '>= 0.62.0',

|

meson_version: '>= 0.62.0',

|

||||||

default_options: [ 'warning_level=2', 'werror=false', ],

|

default_options: [ 'warning_level=2', 'werror=false', ],

|

||||||

)

|

)

|

||||||

|

|||||||

@@ -0,0 +1 @@

|

|||||||

|

ru

|

||||||

112

po/ru.po

Normal file

112

po/ru.po

Normal file

@@ -0,0 +1,112 @@

|

|||||||

|

msgid ""

|

||||||

|

msgstr ""

|

||||||

|

"Project-Id-Version: \n"

|

||||||

|

"POT-Creation-Date: 2024-05-16 19:29+0800\n"

|

||||||

|

"PO-Revision-Date: 2024-05-16 19:59+0800\n"

|

||||||

|

"Last-Translator: \n"

|

||||||

|

"Language-Team: \n"

|

||||||

|

"Language: ru_RU\n"

|

||||||

|

"MIME-Version: 1.0\n"

|

||||||

|

"Content-Type: text/plain; charset=UTF-8\n"

|

||||||

|

"Content-Transfer-Encoding: 8bit\n"

|

||||||

|

"X-Generator: Poedit 3.4.2\n"

|

||||||

|

"X-Poedit-Basepath: ../src\n"

|

||||||

|

"X-Poedit-SearchPath-0: .\n"

|

||||||

|

|

||||||

|

#: gtk/help-overlay.ui:11

|

||||||

|

msgctxt "shortcut window"

|

||||||

|

msgid "General"

|

||||||

|

msgstr "Общие"

|

||||||

|

|

||||||

|

#: gtk/help-overlay.ui:14

|

||||||

|

msgctxt "shortcut window"

|

||||||

|

msgid "Show Shortcuts"

|

||||||

|

msgstr "Показывать ярлыки"

|

||||||

|

|

||||||

|

#: gtk/help-overlay.ui:20

|

||||||

|

msgctxt "shortcut window"

|

||||||

|

msgid "Quit"

|

||||||

|

msgstr "Выйти"

|

||||||

|

|

||||||

|

#: window.ui:30

|

||||||

|

msgid "Manage models"

|

||||||

|

msgstr "Управление моделями"

|

||||||

|

|

||||||

|

#: window.ui:44

|

||||||

|

msgid "Menu"

|

||||||

|

msgstr "Меню"

|

||||||

|

|

||||||

|

#: window.ui:106

|

||||||

|

msgid "Send"

|

||||||

|

msgstr "Отправить"

|

||||||

|

|

||||||

|

#: window.ui:137

|

||||||

|

msgid "Pulling Model"

|

||||||

|

msgstr "Тянущая модель"

|

||||||

|

|

||||||

|

#: window.ui:218

|

||||||

|

msgid "Previous"

|

||||||

|

msgstr "Предыдущий"

|

||||||

|

|

||||||

|

#: window.ui:233

|

||||||

|

msgid "Next"

|

||||||

|

msgstr "Следующий"

|

||||||

|

|

||||||

|

#: window.ui:259

|

||||||

|

msgid "Welcome to Alpaca"

|

||||||

|

msgstr "Добро пожаловать в Alpaca"

|

||||||

|

|

||||||

|

#: window.ui:260

|

||||||

|

msgid ""

|

||||||

|

"To get started, please ensure you have an Ollama instance set up. You can "

|

||||||

|

"either run Ollama locally on your machine or connect to a remote instance."

|

||||||

|

msgstr ""

|

||||||

|

"Для начала, пожалуйста, убедитесь, что у вас настроен экземпляр Ollama. Вы "

|

||||||

|

"можете либо запустить Ollama локально на своем компьютере, либо "

|

||||||

|

"подключиться к удаленному экземпляру."

|

||||||

|

|

||||||

|

#: window.ui:263

|

||||||

|

msgid "Ollama Website"

|

||||||

|

msgstr "Веб-сайт Ollama"

|

||||||

|

|

||||||

|

#: window.ui:279

|

||||||

|

msgid "Disclaimer"

|

||||||

|

msgstr "Отказ от ответственности"

|

||||||

|

|

||||||

|

#: window.ui:280

|

||||||

|

msgid ""

|

||||||

|

"Alpaca and its developers are not liable for any damages to devices or "

|

||||||

|

"software resulting from the execution of code generated by an AI model. "

|

||||||

|

"Please exercise caution and review the code carefully before running it."

|

||||||

|

msgstr ""

|

||||||

|

"Alpaca и ее разработчики не несут ответственности за любой ущерб, "

|

||||||

|

"причиненный устройствам или программному обеспечению в результате "

|

||||||

|

"выполнения кода, сгенерированного с помощью модели искусственного "

|

||||||

|

"интеллекта. Пожалуйста, будьте осторожны и внимательно ознакомьтесь с кодом "

|

||||||

|

"перед его запуском."

|

||||||

|

|

||||||

|

#: window.ui:292

|

||||||

|

msgid "Setup"

|

||||||

|

msgstr "Установка"

|

||||||

|

|

||||||

|

#: window.ui:293

|

||||||

|

msgid ""

|

||||||

|

"If you are running an Ollama instance locally and haven't modified the "

|

||||||

|

"default ports, you can use the default URL. Otherwise, please enter the URL "

|

||||||

|

"of your Ollama instance."

|

||||||

|

msgstr ""

|

||||||

|

"Если вы запускаете локальный экземпляр Ollama и не изменили порты по "

|

||||||

|

"умолчанию, вы можете использовать URL-адрес по умолчанию. В противном "

|

||||||

|

"случае, пожалуйста, введите URL-адрес вашего экземпляра Ollama."

|

||||||

|

|

||||||

|

#: window.ui:313

|

||||||

|

msgid "_Clear Conversation"

|

||||||

|

msgstr "_Очистить разговор"

|

||||||

|

|

||||||

|

#: window.ui:317

|

||||||

|

msgid "_Change Server"

|

||||||

|

msgstr "_Изменить Сервер"

|

||||||

|

|

||||||

|

#: window.ui:321

|

||||||

|

msgid "_About Alpaca"

|

||||||

|

msgstr "_О Программе"

|

||||||

@@ -7,9 +7,9 @@ def simple_get(connection_url:str) -> dict:

|

|||||||

if response.status_code == 200:

|

if response.status_code == 200:

|

||||||

return {"status": "ok", "text": response.text, "status_code": response.status_code}

|

return {"status": "ok", "text": response.text, "status_code": response.status_code}

|

||||||

else:

|

else:

|

||||||

return {"status": "error", "text": f"Failed to connect to {connection_url}. Status code: {response.status_code}", "status_code": response.status_code}

|

return {"status": "error", "status_code": response.status_code}

|

||||||

except Exception as e:

|

except Exception as e:

|

||||||

return {"status": "error", "text": f"An error occurred while trying to connect to {connection_url}", "status_code": 0}

|

return {"status": "error", "status_code": 0}

|

||||||

|

|

||||||

def simple_delete(connection_url:str, data) -> dict:

|

def simple_delete(connection_url:str, data) -> dict:

|

||||||

try:

|

try:

|

||||||

@@ -19,7 +19,7 @@ def simple_delete(connection_url:str, data) -> dict:

|

|||||||

else:

|

else:

|

||||||

return {"status": "error", "text": "Failed to delete", "status_code": response.status_code}

|

return {"status": "error", "text": "Failed to delete", "status_code": response.status_code}

|

||||||

except Exception as e:

|

except Exception as e:

|

||||||

return {"status": "error", "text": f"An error occurred while trying to connect to {connection_url}", "status_code": 0}

|

return {"status": "error", "status_code": 0}

|

||||||

|

|

||||||

def stream_post(connection_url:str, data, callback:callable) -> dict:

|

def stream_post(connection_url:str, data, callback:callable) -> dict:

|

||||||

try:

|

try:

|

||||||

@@ -31,11 +31,11 @@ def stream_post(connection_url:str, data, callback:callable) -> dict:

|

|||||||

for line in response.iter_lines():

|

for line in response.iter_lines():

|

||||||

if line:

|

if line:

|

||||||

callback(json.loads(line.decode("utf-8")))

|

callback(json.loads(line.decode("utf-8")))

|

||||||

return {"status": "ok", "text": "All good", "status_code": response.status_code}

|

return {"status": "ok", "status_code": response.status_code}

|

||||||

else:

|

else:

|

||||||

return {"status": "error", "text": "Error posting data", "status_code": response.status_code}

|

return {"status": "error", "status_code": response.status_code}

|

||||||

except Exception as e:

|

except Exception as e:

|

||||||

return {"status": "error", "text": f"An error occurred while trying to connect to {connection_url}", "status_code": 0}

|

return {"status": "error", "status_code": 0}

|

||||||

|

|

||||||

|

|

||||||

from time import sleep

|

from time import sleep

|

||||||

@@ -58,4 +58,4 @@ def stream_post_fake(connection_url:str, data, callback:callable) -> dict:

|

|||||||

sleep(.1)

|

sleep(.1)

|

||||||

data = {"status": msg}

|

data = {"status": msg}

|

||||||

callback(data)

|

callback(data)

|

||||||

return {"status": "ok", "text": "All good", "status_code": 200}

|

return {"status": "ok", "status_code": 200}

|

||||||

|

|||||||

@@ -48,9 +48,10 @@ class AlpacaApplication(Adw.Application):

|

|||||||

application_name='Alpaca',

|

application_name='Alpaca',

|

||||||

application_icon='com.jeffser.Alpaca',

|

application_icon='com.jeffser.Alpaca',

|

||||||

developer_name='Jeffry Samuel Eduarte Rojas',

|

developer_name='Jeffry Samuel Eduarte Rojas',

|

||||||

version='0.2.0',

|

version='0.2.2',

|

||||||

developers=['Jeffser https://jeffser.com'],

|

developers=['Jeffser https://jeffser.com'],

|

||||||

designers=['Jeffser https://jeffser.com'],

|

designers=['Jeffser https://jeffser.com'],

|

||||||

|

translator_credits='Alex K (Russian) https://github.com/alexkdeveloper',

|

||||||

copyright='© 2024 Jeffser',

|

copyright='© 2024 Jeffser',

|

||||||

issue_url='https://github.com/Jeffser/Alpaca/issues')

|

issue_url='https://github.com/Jeffser/Alpaca/issues')

|

||||||

about.present()

|

about.present()

|

||||||

|

|||||||

128

src/window.py

128

src/window.py

@@ -18,7 +18,6 @@

|

|||||||

# SPDX-License-Identifier: GPL-3.0-or-later

|

# SPDX-License-Identifier: GPL-3.0-or-later

|

||||||

|

|

||||||

import gi

|

import gi

|

||||||

gi.require_version("Soup", "3.0")

|

|

||||||

from gi.repository import Adw, Gtk, GLib

|

from gi.repository import Adw, Gtk, GLib

|

||||||

import json, requests, threading, os

|

import json, requests, threading, os

|

||||||

from datetime import datetime

|

from datetime import datetime

|

||||||

@@ -27,8 +26,8 @@ from .available_models import available_models

|

|||||||

|

|

||||||

@Gtk.Template(resource_path='/com/jeffser/Alpaca/window.ui')

|

@Gtk.Template(resource_path='/com/jeffser/Alpaca/window.ui')

|

||||||

class AlpacaWindow(Adw.ApplicationWindow):

|

class AlpacaWindow(Adw.ApplicationWindow):

|

||||||

|

config_dir = os.path.join(os.getenv("XDG_CONFIG_HOME"), "/", os.path.expanduser("~/.var/app/com.jeffser.Alpaca/config"))

|

||||||

__gtype_name__ = 'AlpacaWindow'

|

__gtype_name__ = 'AlpacaWindow'

|

||||||

|

|

||||||

#Variables

|

#Variables

|

||||||

ollama_url = None

|

ollama_url = None

|

||||||

local_models = []

|

local_models = []

|

||||||

@@ -43,7 +42,10 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

connection_previous_button = Gtk.Template.Child()

|

connection_previous_button = Gtk.Template.Child()

|

||||||

connection_next_button = Gtk.Template.Child()

|

connection_next_button = Gtk.Template.Child()

|

||||||

connection_url_entry = Gtk.Template.Child()

|

connection_url_entry = Gtk.Template.Child()

|

||||||

overlay = Gtk.Template.Child()

|

main_overlay = Gtk.Template.Child()

|

||||||

|

pull_overlay = Gtk.Template.Child()

|

||||||

|

manage_models_overlay = Gtk.Template.Child()

|

||||||

|

connection_overlay = Gtk.Template.Child()

|

||||||

chat_container = Gtk.Template.Child()

|

chat_container = Gtk.Template.Child()

|

||||||

chat_window = Gtk.Template.Child()

|

chat_window = Gtk.Template.Child()

|

||||||

message_entry = Gtk.Template.Child()

|

message_entry = Gtk.Template.Child()

|

||||||

@@ -59,12 +61,33 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

pull_model_status_page = Gtk.Template.Child()

|

pull_model_status_page = Gtk.Template.Child()

|

||||||

pull_model_progress_bar = Gtk.Template.Child()

|

pull_model_progress_bar = Gtk.Template.Child()

|

||||||

|

|

||||||

def show_toast(self, msg:str):

|

toast_messages = {

|

||||||

|

"error": [

|

||||||

|

"An error occurred",

|

||||||

|

"Failed to connect to server",

|

||||||

|

"Could not list local models",

|

||||||

|

"Could not delete model",

|

||||||

|

"Could not pull model"

|

||||||

|

],

|

||||||

|

"info": [

|

||||||

|

"Please select a model before chatting",

|

||||||

|

"Conversation cannot be cleared while receiving a message"

|

||||||

|

],

|

||||||

|

"good": [

|

||||||

|

"Model deleted successfully",

|

||||||

|

"Model pulled successfully"

|

||||||

|

]

|

||||||

|

}

|

||||||

|

|

||||||

|

def show_toast(self, message_type:str, message_id:int, overlay):

|

||||||

|

if message_type not in self.toast_messages or message_id > len(self.toast_messages[message_type] or message_id < 0):

|

||||||

|

message_type = "error"

|

||||||

|

message_id = 0

|

||||||

toast = Adw.Toast(

|

toast = Adw.Toast(

|

||||||

title=msg,

|

title=self.toast_messages[message_type][message_id],

|

||||||

timeout=2

|

timeout=2

|

||||||

)

|

)

|

||||||

self.overlay.add_toast(toast)

|

overlay.add_toast(toast)

|

||||||

|

|

||||||

def show_message(self, msg:str, bot:bool, footer:str=None):

|

def show_message(self, msg:str, bot:bool, footer:str=None):

|

||||||

message_text = Gtk.TextView(

|

message_text = Gtk.TextView(

|

||||||

@@ -92,6 +115,8 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

def update_list_local_models(self):

|

def update_list_local_models(self):

|

||||||

self.local_models = []

|

self.local_models = []

|

||||||

response = simple_get(self.ollama_url + "/api/tags")

|

response = simple_get(self.ollama_url + "/api/tags")

|

||||||

|

for i in range(self.model_string_list.get_n_items() -1, -1, -1):

|

||||||

|

self.model_string_list.remove(i)

|

||||||

if response['status'] == 'ok':

|

if response['status'] == 'ok':

|

||||||

for model in json.loads(response['text'])['models']:

|

for model in json.loads(response['text'])['models']:

|

||||||

self.model_string_list.append(model["name"])

|

self.model_string_list.append(model["name"])

|

||||||

@@ -99,29 +124,19 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

self.model_drop_down.set_selected(0)

|

self.model_drop_down.set_selected(0)

|

||||||

return

|

return

|

||||||

else:

|

else:

|

||||||

self.show_toast(response['text'])

|

|

||||||

self.show_connection_dialog(True)

|

self.show_connection_dialog(True)

|

||||||

|

self.show_toast("error", 2, self.connection_overlay)

|

||||||

|

|

||||||

def verify_connection(self):

|

def verify_connection(self):

|

||||||

response = simple_get(self.ollama_url)

|

response = simple_get(self.ollama_url)

|

||||||

if response['status'] == 'ok':

|

if response['status'] == 'ok':

|

||||||

if "Ollama is running" in response['text']:

|

if "Ollama is running" in response['text']:

|

||||||

with open("server.conf", "w+") as f: f.write(self.ollama_url)

|

with open(os.path.join(self.config_dir, "server.conf"), "w+") as f: f.write(self.ollama_url)

|

||||||

self.message_entry.grab_focus_without_selecting()

|

self.message_entry.grab_focus_without_selecting()

|

||||||

self.update_list_local_models()

|

self.update_list_local_models()

|

||||||

return True

|

return True

|

||||||

else:

|

|

||||||

response = {"status": "error", "text": f"Unexpected response from {self.ollama_url} : {response['text']}"}

|

|

||||||

self.show_toast(response['text'])

|

|

||||||

return False

|

return False

|

||||||

|

|

||||||

def dialog_response(self, dialog, task):

|

|

||||||

self.ollama_url = dialog.get_extra_child().get_text()

|

|

||||||

if dialog.choose_finish(task) == "login":

|

|

||||||

self.verify_connection()

|

|

||||||

else:

|

|

||||||

self.destroy()

|

|

||||||

|

|

||||||

def update_bot_message(self, data):

|

def update_bot_message(self, data):

|

||||||

if data['done']:

|

if data['done']:

|

||||||

formated_datetime = datetime.now().strftime("%Y/%m/%d %H:%M")

|

formated_datetime = datetime.now().strftime("%Y/%m/%d %H:%M")

|

||||||

@@ -147,7 +162,7 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

def send_message(self):

|

def send_message(self):

|

||||||

current_model = self.model_drop_down.get_selected_item()

|

current_model = self.model_drop_down.get_selected_item()

|

||||||

if current_model is None:

|

if current_model is None:

|

||||||

GLib.idle_add(self.show_toast, "Please pull a model")

|

GLib.idle_add(self.show_toast, "info", 0, self.main_overlay)

|

||||||

return

|

return

|

||||||

formated_datetime = datetime.now().strftime("%Y/%m/%d %H:%M")

|

formated_datetime = datetime.now().strftime("%Y/%m/%d %H:%M")

|

||||||

self.chats["chats"][self.current_chat_id]["messages"].append({

|

self.chats["chats"][self.current_chat_id]["messages"].append({

|

||||||

@@ -169,8 +184,8 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

GLib.idle_add(self.send_button.set_sensitive, True)

|

GLib.idle_add(self.send_button.set_sensitive, True)

|

||||||

GLib.idle_add(self.message_entry.set_sensitive, True)

|

GLib.idle_add(self.message_entry.set_sensitive, True)

|

||||||

if response['status'] == 'error':

|

if response['status'] == 'error':

|

||||||

self.show_toast(f"{response['text']}")

|

GLib.idle_add(self.show_toast, 'error', 1, self.connection_overlay)

|

||||||

self.show_connection_dialog(True)

|

GLib.idle_add(self.show_connection_dialog, True)

|

||||||

|

|

||||||

def send_button_activate(self, button):

|

def send_button_activate(self, button):

|

||||||

if not self.message_entry.get_text(): return

|

if not self.message_entry.get_text(): return

|

||||||

@@ -180,20 +195,17 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

def delete_model(self, dialog, task, model_name, button):

|

def delete_model(self, dialog, task, model_name, button):

|

||||||

if dialog.choose_finish(task) == "delete":

|

if dialog.choose_finish(task) == "delete":

|

||||||

response = simple_delete(self.ollama_url + "/api/delete", data={"name": model_name})

|

response = simple_delete(self.ollama_url + "/api/delete", data={"name": model_name})

|

||||||

print(response)

|

|

||||||

if response['status'] == 'ok':

|

if response['status'] == 'ok':

|

||||||

button.set_icon_name("folder-download-symbolic")

|

button.set_icon_name("folder-download-symbolic")

|

||||||

button.set_css_classes(["accent", "pull"])

|

button.set_css_classes(["accent", "pull"])

|

||||||

self.show_toast(f"Model '{model_name}' deleted successfully")

|

self.show_toast("good", 0, self.manage_models_overlay)

|

||||||

for i in range(self.model_string_list.get_n_items()):

|

for i in range(self.model_string_list.get_n_items()):

|

||||||

if self.model_string_list.get_string(i) == model_name:

|

if self.model_string_list.get_string(i) == model_name:

|

||||||

self.model_string_list.remove(i)

|

self.model_string_list.remove(i)

|

||||||

self.model_drop_down.set_selected(0)

|

self.model_drop_down.set_selected(0)

|

||||||

break

|

break

|

||||||

elif response['status_code'] == '404':

|

|

||||||

self.show_toast(f"Delete request failed: Model was not found")

|

|

||||||

else:

|

else:

|

||||||

self.show_toast(response['text'])

|

self.show_toast("error", 3, self.connection_overlay)

|

||||||

self.manage_models_dialog.close()

|

self.manage_models_dialog.close()

|

||||||

self.show_connection_dialog(True)

|

self.show_connection_dialog(True)

|

||||||

|

|

||||||

@@ -218,11 +230,12 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

GLib.idle_add(button.set_icon_name, "user-trash-symbolic")

|

GLib.idle_add(button.set_icon_name, "user-trash-symbolic")

|

||||||

GLib.idle_add(button.set_css_classes, ["error", "delete"])

|

GLib.idle_add(button.set_css_classes, ["error", "delete"])

|

||||||

GLib.idle_add(self.model_string_list.append, model_name)

|

GLib.idle_add(self.model_string_list.append, model_name)

|

||||||

GLib.idle_add(self.show_toast, f"Model '{model_name}' pulled successfully")

|

GLib.idle_add(self.show_toast, "good", 1, self.manage_models_overlay)

|

||||||

else:

|

else:

|

||||||

GLib.idle_add(self.show_toast, response['text'])

|

GLib.idle_add(self.show_toast, "error", 4, self.connection_overlay)

|

||||||

GLib.idle_add(self.manage_models_dialog.close)

|

GLib.idle_add(self.manage_models_dialog.close)

|

||||||

GLib.idle_add(self.show_connection_dialog, True)

|

GLib.idle_add(self.show_connection_dialog, True)

|

||||||

|

print("pull fail")

|

||||||

|

|

||||||

|

|

||||||

def pull_model_start(self, dialog, task, model_name, button):

|

def pull_model_start(self, dialog, task, model_name, button):

|

||||||

@@ -265,9 +278,11 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

self.model_list_box.append(model)

|

self.model_list_box.append(model)

|

||||||

|

|

||||||

def manage_models_button_activate(self, button):

|

def manage_models_button_activate(self, button):

|

||||||

|

|

||||||

self.manage_models_dialog.present(self)

|

self.manage_models_dialog.present(self)

|

||||||

self.update_list_available_models()

|

self.update_list_available_models()

|

||||||

|

|

||||||

|

|

||||||

def connection_carousel_page_changed(self, carousel, index):

|

def connection_carousel_page_changed(self, carousel, index):

|

||||||

if index == 0: self.connection_previous_button.set_sensitive(False)

|

if index == 0: self.connection_previous_button.set_sensitive(False)

|

||||||

else: self.connection_previous_button.set_sensitive(True)

|

else: self.connection_previous_button.set_sensitive(True)

|

||||||

@@ -284,10 +299,12 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

if self.verify_connection():

|

if self.verify_connection():

|

||||||

self.connection_dialog.force_close()

|

self.connection_dialog.force_close()

|

||||||

else:

|

else:

|

||||||

show_connection_dialog(True)

|

self.show_connection_dialog(True)

|

||||||

|

self.show_toast("error", 1, self.connection_overlay)

|

||||||

|

|

||||||

def show_connection_dialog(self, error:bool=False):

|

def show_connection_dialog(self, error:bool=False):

|

||||||

self.connection_carousel.scroll_to(self.connection_carousel.get_nth_page(self.connection_carousel.get_n_pages()-1),False)

|

self.connection_carousel.scroll_to(self.connection_carousel.get_nth_page(self.connection_carousel.get_n_pages()-1),False)

|

||||||

|

if self.ollama_url is not None: self.connection_url_entry.set_text(self.ollama_url)

|

||||||

if error: self.connection_url_entry.set_css_classes(["error"])

|

if error: self.connection_url_entry.set_css_classes(["error"])

|

||||||

else: self.connection_url_entry.set_css_classes([])

|

else: self.connection_url_entry.set_css_classes([])

|

||||||

self.connection_dialog.present(self)

|

self.connection_dialog.present(self)

|

||||||

@@ -303,7 +320,7 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

|

|

||||||

def clear_conversation_dialog(self):

|

def clear_conversation_dialog(self):

|

||||||

if self.bot_message is not None:

|

if self.bot_message is not None:

|

||||||

self.show_toast("Conversation cannot be cleared while receiving a message")

|

self.show_toast("info", 1, self.main_overlay)

|

||||||

return

|

return

|

||||||

dialog = Adw.AlertDialog(

|

dialog = Adw.AlertDialog(

|

||||||

heading=f"Clear Conversation",

|

heading=f"Clear Conversation",

|

||||||

@@ -320,14 +337,14 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

)

|

)

|

||||||

|

|

||||||

def save_history(self):

|

def save_history(self):

|

||||||

with open("chats.json", "w+") as f:

|

with open(os.path.join(self.config_dir, "chats.json"), "w+") as f:

|

||||||

json.dump(self.chats, f, indent=4)

|

json.dump(self.chats, f, indent=4)

|

||||||

|

|

||||||

def load_history(self):

|

def load_history(self):

|

||||||

if os.path.exists("chats.json"):

|

if os.path.exists(os.path.join(self.config_dir, "chats.json")):

|

||||||

self.clear_conversation()

|

self.clear_conversation()

|

||||||

try:

|

try:

|

||||||

with open("chats.json", "r") as f:

|

with open(os.path.join(self.config_dir, "chats.json"), "r") as f:

|

||||||

self.chats = json.load(f)

|

self.chats = json.load(f)

|

||||||

except Exception as e:

|

except Exception as e:

|

||||||

self.chats = {"chats": {"0": {"messages": []}}}

|

self.chats = {"chats": {"0": {"messages": []}}}

|

||||||

@@ -338,6 +355,42 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

self.show_message(message['content'], True, f"\n\n<small>{message['model']}\t|\t{message['date']}</small>")

|

self.show_message(message['content'], True, f"\n\n<small>{message['model']}\t|\t{message['date']}</small>")

|

||||||

self.bot_message = None

|

self.bot_message = None

|

||||||

|

|

||||||

|

def closing_connection_dialog_response(self, dialog, task):

|

||||||

|

result = dialog.choose_finish(task)

|

||||||

|

if result == "cancel": return

|

||||||

|

if result == "save":

|

||||||

|

self.ollama_url = self.connection_url_entry.get_text()

|

||||||

|

elif result == "discard" and self.ollama_url is None: self.destroy()

|

||||||

|

self.connection_dialog.force_close()

|

||||||

|

if self.ollama_url is None or self.verify_connection() == False:

|

||||||

|

self.show_connection_dialog(True)

|

||||||

|

self.show_toast("error", 1, self.connection_overlay)

|

||||||

|

|

||||||

|

|

||||||

|

def closing_connection_dialog(self, dialog):

|

||||||

|

if self.ollama_url is None: self.destroy()

|

||||||

|

if self.ollama_url == self.connection_url_entry.get_text():

|

||||||

|

self.connection_dialog.force_close()

|

||||||

|

if self.ollama_url is None or self.verify_connection() == False:

|

||||||

|

self.show_connection_dialog(True)

|

||||||

|

self.show_toast("error", 1, self.connection_overlay)

|

||||||

|

return

|

||||||

|

dialog = Adw.AlertDialog(

|

||||||

|

heading=f"Save Changes?",

|

||||||

|

body=f"Do you want to save the URL change?",

|

||||||

|

close_response="cancel"

|

||||||

|

)

|

||||||

|

dialog.add_response("cancel", "Cancel")

|

||||||

|

dialog.add_response("discard", "Discard")

|

||||||

|

dialog.add_response("save", "Save")

|

||||||

|

dialog.set_response_appearance("discard", Adw.ResponseAppearance.DESTRUCTIVE)

|

||||||

|

dialog.set_response_appearance("save", Adw.ResponseAppearance.SUGGESTED)

|

||||||

|

dialog.choose(

|

||||||

|

parent = self,

|

||||||

|

cancellable = None,

|

||||||

|

callback = self.closing_connection_dialog_response

|

||||||

|

)

|

||||||

|

|

||||||

def __init__(self, **kwargs):

|

def __init__(self, **kwargs):

|

||||||

super().__init__(**kwargs)

|

super().__init__(**kwargs)

|

||||||

self.manage_models_button.connect("clicked", self.manage_models_button_activate)

|

self.manage_models_button.connect("clicked", self.manage_models_button_activate)

|

||||||

@@ -348,13 +401,14 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

|||||||

self.connection_previous_button.connect("clicked", self.connection_previous_button_activate)

|

self.connection_previous_button.connect("clicked", self.connection_previous_button_activate)

|

||||||

self.connection_next_button.connect("clicked", self.connection_next_button_activate)

|

self.connection_next_button.connect("clicked", self.connection_next_button_activate)

|

||||||

self.connection_url_entry.connect("changed", lambda entry: entry.set_css_classes([]))

|

self.connection_url_entry.connect("changed", lambda entry: entry.set_css_classes([]))

|

||||||

self.connection_dialog.connect("close-attempt", lambda dialog: self.destroy())

|

self.connection_dialog.connect("close-attempt", self.closing_connection_dialog)

|

||||||

self.load_history()

|

self.load_history()

|

||||||

if os.path.exists("server.conf"):

|

if os.path.exists(os.path.join(self.config_dir, "server.conf")):

|

||||||

with open("server.conf", "r") as f:

|

with open(os.path.join(self.config_dir, "server.conf"), "r") as f:

|

||||||

self.ollama_url = f.read()

|

self.ollama_url = f.read()

|

||||||

if self.verify_connection() is False: self.show_connection_dialog()

|

if self.verify_connection() is False: self.show_connection_dialog(True)

|

||||||

else: self.connection_dialog.present(self)

|

else: self.connection_dialog.present(self)

|

||||||

|

self.show_toast("funny", True, self.manage_models_overlay)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@@ -5,7 +5,7 @@

|

|||||||

<template class="AlpacaWindow" parent="AdwApplicationWindow">

|

<template class="AlpacaWindow" parent="AdwApplicationWindow">

|

||||||

<property name="resizable">True</property>

|

<property name="resizable">True</property>

|

||||||

<property name="content">

|

<property name="content">

|

||||||

<object class="AdwToastOverlay" id="overlay">

|

<object class="AdwToastOverlay" id="main_overlay">

|

||||||

<child>

|

<child>

|

||||||

<object class="AdwToolbarView">

|

<object class="AdwToolbarView">

|

||||||

<child type="top">

|

<child type="top">

|

||||||

@@ -118,9 +118,12 @@

|

|||||||

</child>

|

</child>

|

||||||

</object>

|

</object>

|

||||||

</property>

|

</property>

|

||||||

|

|

||||||

<object class="AdwDialog" id="pull_model_dialog">

|

<object class="AdwDialog" id="pull_model_dialog">

|

||||||

<property name="can-close">false</property>

|

<property name="can-close">false</property>

|

||||||

<property name="width-request">400</property>

|

<property name="width-request">400</property>

|

||||||

|

<child>

|

||||||

|

<object class="AdwToastOverlay" id="pull_overlay">

|

||||||

<child>

|

<child>

|

||||||

<object class="AdwToolbarView">

|

<object class="AdwToolbarView">

|

||||||

<child>

|

<child>

|

||||||

@@ -142,10 +145,15 @@

|

|||||||

</object>

|

</object>

|

||||||

</child>

|

</child>

|

||||||

</object>

|

</object>

|

||||||

|

</child>

|

||||||

|

</object>

|

||||||

|

|

||||||

<object class="AdwDialog" id="manage_models_dialog">

|

<object class="AdwDialog" id="manage_models_dialog">

|

||||||

<property name="can-close">true</property>

|

<property name="can-close">true</property>

|

||||||

<property name="width-request">400</property>

|

<property name="width-request">400</property>

|

||||||

<property name="height-request">600</property>

|

<property name="height-request">600</property>

|

||||||

|

<child>

|

||||||

|

<object class="AdwToastOverlay" id="manage_models_overlay">

|

||||||

<child>

|

<child>

|

||||||

<object class="AdwToolbarView">

|

<object class="AdwToolbarView">

|

||||||

<child type="top">

|

<child type="top">

|

||||||

@@ -184,10 +192,15 @@

|

|||||||

</object>

|

</object>

|

||||||

</child>

|

</child>

|

||||||

</object>

|

</object>

|

||||||

|

</child>

|

||||||

|

</object>

|

||||||

|

|

||||||

<object class="AdwDialog" id="connection_dialog">

|

<object class="AdwDialog" id="connection_dialog">

|

||||||

<property name="can-close">false</property>

|

<property name="can-close">false</property>

|

||||||

<property name="width-request">450</property>

|

<property name="width-request">450</property>

|

||||||

<property name="height-request">450</property>

|

<property name="height-request">450</property>

|

||||||

|

<child>

|

||||||

|

<object class="AdwToastOverlay" id="connection_overlay">

|

||||||

<child>

|

<child>

|

||||||

<object class="AdwToolbarView">

|

<object class="AdwToolbarView">

|

||||||

<child type="top">

|

<child type="top">

|

||||||

@@ -225,8 +238,8 @@

|

|||||||

</object>

|

</object>

|

||||||

</child>

|

</child>

|

||||||

</object>

|

</object>

|

||||||

|

|

||||||

</child>

|

</child>

|

||||||

|

|

||||||

<child>

|

<child>

|

||||||

<object class="AdwCarousel" id="connection_carousel">

|

<object class="AdwCarousel" id="connection_carousel">

|

||||||

<property name="hexpand">true</property>

|

<property name="hexpand">true</property>

|

||||||

@@ -290,6 +303,9 @@

|

|||||||

</object>

|

</object>

|

||||||

</child>

|

</child>

|

||||||

</object>

|

</object>

|

||||||

|

</child>

|

||||||

|

</object>

|

||||||

|

|

||||||

</template>

|

</template>

|

||||||

<menu id="primary_menu">

|

<menu id="primary_menu">

|

||||||

<section>

|

<section>

|

||||||

|

|||||||

Reference in New Issue

Block a user