Compare commits

30 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

5e7d590447 | ||

|

|

ed4fbc7950 | ||

|

|

07994db0a5 | ||

|

|

2290105ac1 | ||

|

|

3b695031bc | ||

|

|

83cb2c3b90 | ||

|

|

e4360925b6 | ||

|

|

425e1b0211 | ||

|

|

529687ffdb | ||

|

|

34e3511d62 | ||

|

|

70e2c81eff | ||

|

|

d8ba1f5696 | ||

|

|

b21f7490ec | ||

|

|

33eed32a15 | ||

|

|

b9887d9286 | ||

|

|

d1fbdad486 | ||

|

|

28d0860522 | ||

|

|

a4981b8e9c | ||

|

|

76486da3d4 | ||

|

|

c7303cd278 | ||

|

|

5866c5d4fc | ||

|

|

190bf7017f | ||

|

|

43b2e469ef | ||

|

|

0614ddc39b | ||

|

|

d813a54a64 | ||

|

|

6c9e7d4bdb | ||

|

|

f24b56cc1c | ||

|

|

8f2bbf2501 | ||

|

|

6869197693 | ||

|

|

0feb9ac700 |

32

README.md

32

README.md

@@ -1,40 +1,44 @@

|

||||

<img src="https://jeffser.com/images/alpaca/logo.svg">

|

||||

|

||||

# Alpaca

|

||||

|

||||

An [Ollama](https://github.com/ollama/ollama) client made with GTK4 and Adwaita.

|

||||

|

||||

## Disclaimer

|

||||

|

||||

This project is not affiliated at all with Ollama, I'm not responsible for any damages to your device or software caused by running code given by any models.

|

||||

|

||||

## ‼️I NEED AN ICON‼️

|

||||

I'm not a graphic designer, it would mean the world to me if someone could make a [GNOME icon](https://developer.gnome.org/hig/guidelines/app-icons.html) for this app.

|

||||

|

||||

## ⚠️THIS IS UNDER DEVELOPMENT⚠️

|

||||

This is my first GTK4 / Adwaita / Python app, so it might crash and some features are still under development, please report any errors if you can, thank you!

|

||||

|

||||

---

|

||||

|

||||

> [!WARNING]

|

||||

> This project is not affiliated at all with Ollama, I'm not responsible for any damages to your device or software caused by running code given by any models.

|

||||

|

||||

> [!important]

|

||||

> This is my first GTK4 / Adwaita / Python app, so it might crash and some features are still under development, please report any errors if you can, thank you!

|

||||

|

||||

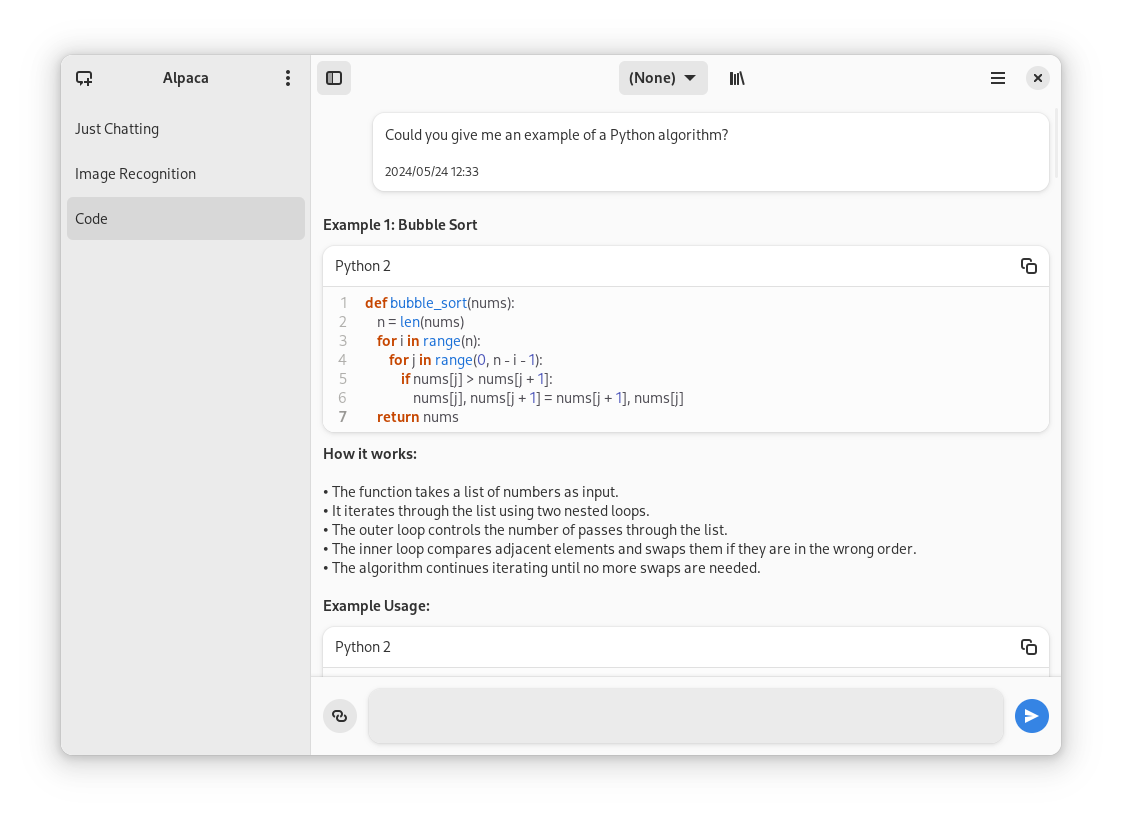

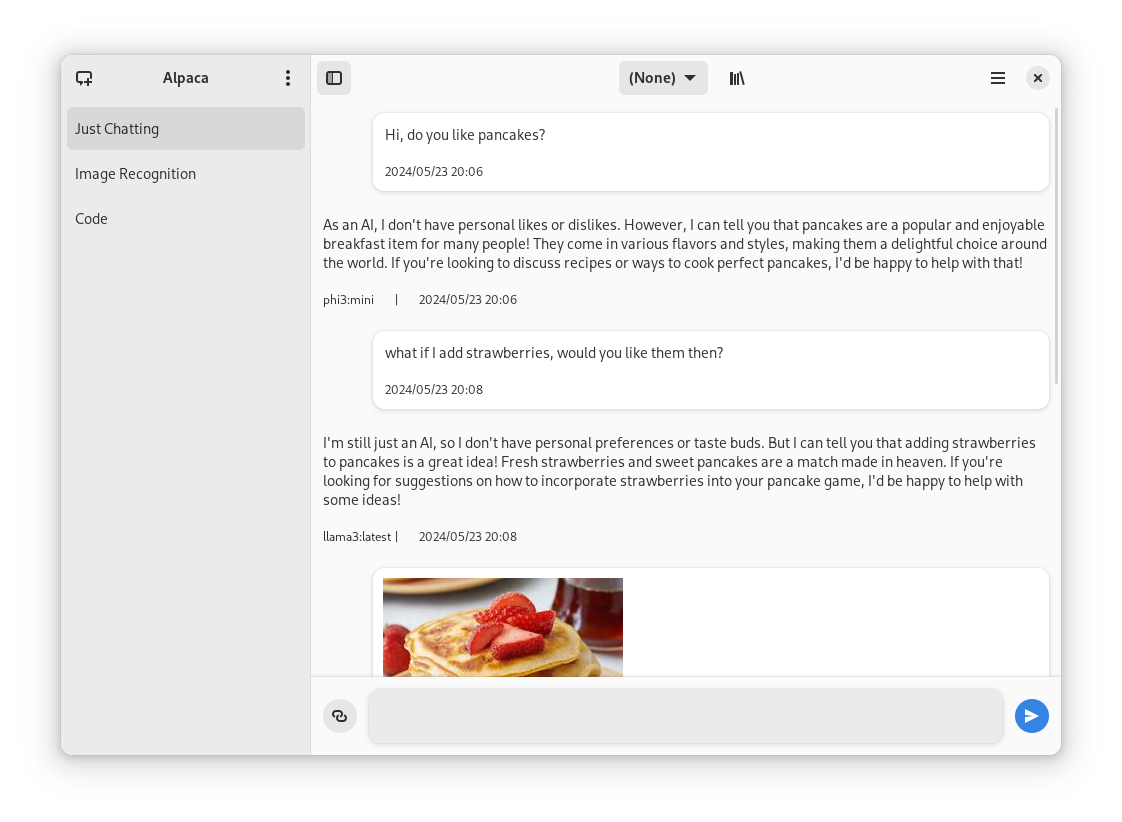

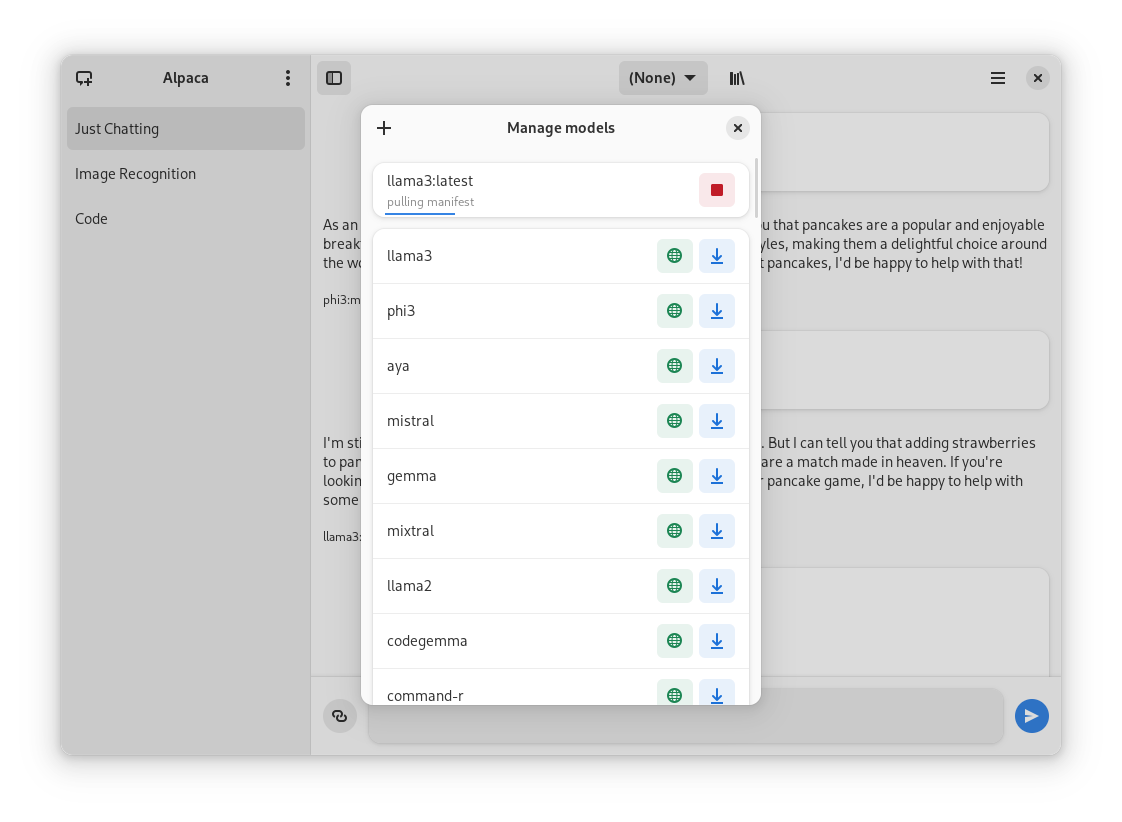

## Features!

|

||||

- Talk to multiple models in the same conversation

|

||||

- Pull and delete models from the app

|

||||

|

||||

## Future features!

|

||||

- Persistent conversations

|

||||

- Multiple conversations

|

||||

- Image / document recognition

|

||||

- Notifications

|

||||

- Code highlighting

|

||||

|

||||

## Screenies

|

||||

|

||||

|

||||

|

||||

Login to Ollama instance | Chatting with models | Managing models

|

||||

:-------------------------:|:-------------------------:|:-------------------------:

|

||||

|  |

|

||||

|

||||

## Preview

|

||||

1. Clone repo using Gnome Builder

|

||||

2. Press the `run` button

|

||||

|

||||

## Instalation

|

||||

1. Clone repo using Gnome Builder

|

||||

2. Build the app using the `build` button

|

||||

3. Prepare the file using the `install` button (it doesn't actually install it, idk)

|

||||

4. Then press the `export` button, it will export a `com.jeffser.Alpaca.flatpak` file, you can install it just by opening it

|

||||

1. Go to the `releases` page

|

||||

2. Download the latest flatpak package

|

||||

3. Open it

|

||||

|

||||

## Usage

|

||||

- You'll need an Ollama instance, I recommend using the [Docker image](https://ollama.com/blog/ollama-is-now-available-as-an-official-docker-image)

|

||||

|

||||

@@ -23,6 +23,54 @@

|

||||

"*.a"

|

||||

],

|

||||

"modules" : [

|

||||

{

|

||||

"name": "python3-requests",

|

||||

"buildsystem": "simple",

|

||||

"build-commands": [

|

||||

"pip3 install --verbose --exists-action=i --no-index --find-links=\"file://${PWD}\" --prefix=${FLATPAK_DEST} \"requests\" --no-build-isolation"

|

||||

],

|

||||

"sources": [

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/ba/06/a07f096c664aeb9f01624f858c3add0a4e913d6c96257acb4fce61e7de14/certifi-2024.2.2-py3-none-any.whl",

|

||||

"sha256": "dc383c07b76109f368f6106eee2b593b04a011ea4d55f652c6ca24a754d1cdd1"

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/63/09/c1bc53dab74b1816a00d8d030de5bf98f724c52c1635e07681d312f20be8/charset-normalizer-3.3.2.tar.gz",

|

||||

"sha256": "f30c3cb33b24454a82faecaf01b19c18562b1e89558fb6c56de4d9118a032fd5"

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/e5/3e/741d8c82801c347547f8a2a06aa57dbb1992be9e948df2ea0eda2c8b79e8/idna-3.7-py3-none-any.whl",

|

||||

"sha256": "82fee1fc78add43492d3a1898bfa6d8a904cc97d8427f683ed8e798d07761aa0"

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/70/8e/0e2d847013cb52cd35b38c009bb167a1a26b2ce6cd6965bf26b47bc0bf44/requests-2.31.0-py3-none-any.whl",

|

||||

"sha256": "58cd2187c01e70e6e26505bca751777aa9f2ee0b7f4300988b709f44e013003f"

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/a2/73/a68704750a7679d0b6d3ad7aa8d4da8e14e151ae82e6fee774e6e0d05ec8/urllib3-2.2.1-py3-none-any.whl",

|

||||

"sha256": "450b20ec296a467077128bff42b73080516e71b56ff59a60a02bef2232c4fa9d"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "python3-pillow",

|

||||

"buildsystem": "simple",

|

||||

"build-commands": [

|

||||

"pip3 install --verbose --exists-action=i --no-index --find-links=\"file://${PWD}\" --prefix=${FLATPAK_DEST} \"pillow\" --no-build-isolation"

|

||||

],

|

||||

"sources": [

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/ef/43/c50c17c5f7d438e836c169e343695534c38c77f60e7c90389bd77981bc21/pillow-10.3.0.tar.gz",

|

||||

"sha256": "9d2455fbf44c914840c793e89aa82d0e1763a14253a000743719ae5946814b2d"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name" : "alpaca",

|

||||

"builddir" : true,

|

||||

@@ -30,11 +78,9 @@

|

||||

"sources" : [

|

||||

{

|

||||

"type" : "git",

|

||||

"url" : "https://github.com/Jeffser/Alpaca.git",

|

||||

"branch": "main"

|

||||

"url" : "file:///home/tentri/Documents/Alpaca"

|

||||

}

|

||||

]

|

||||

},

|

||||

"python3-requests.json"

|

||||

}

|

||||

]

|

||||

}

|

||||

|

||||

@@ -4,5 +4,5 @@ Exec=alpaca

|

||||

Icon=com.jeffser.Alpaca

|

||||

Terminal=false

|

||||

Type=Application

|

||||

Categories=GTK;

|

||||

Categories=Utility;Development;Chat;

|

||||

StartupNotify=true

|

||||

|

||||

@@ -7,13 +7,14 @@

|

||||

<name>Alpaca</name>

|

||||

<summary>An Ollama client</summary>

|

||||

<description>

|

||||

<p>Made with GTK4 and Adwaita.</p>

|

||||

<p><b>Features</b></p>

|

||||

<p>Chat with multiple AI models</p>

|

||||

<p>An Ollama client</p>

|

||||

<p>Features</p>

|

||||

<ul>

|

||||

<li>Talk to multiple models in the same conversation</li>

|

||||

<li>Pull and delete models from the app</li>

|

||||

</ul>

|

||||

<p><b>Disclaimer</b></p>

|

||||

<p>Disclaimer</p>

|

||||

<p>This project is not affiliated at all with Ollama, I'm not responsible for any damages to your device or software caused by running code given by any models.</p>

|

||||

</description>

|

||||

<developer id="tld.vendor">

|

||||

@@ -23,28 +24,129 @@

|

||||

<binary>alpaca</binary>

|

||||

</provides>

|

||||

<icon type="stock">com.jeffser.Alpaca</icon>

|

||||

<categories>

|

||||

<category>Utility</category>

|

||||

<category>Development</category>

|

||||

<category>Chat</category>

|

||||

</categories>

|

||||

<branding>

|

||||

<color type="primary" scheme_preference="light">#ff00ff</color>

|

||||

<color type="primary" scheme_preference="dark">#993d3d</color>

|

||||

<color type="primary" scheme_preference="light">#8cdef5</color>

|

||||

<color type="primary" scheme_preference="dark">#0f2b78</color>

|

||||

</branding>

|

||||

<screenshots>

|

||||

<screenshot type="default">

|

||||

<image>https://jeffser.com/images/alpaca/screenie1.png</image>

|

||||

<caption>Login into an Ollama instance</caption>

|

||||

<caption>Welcome dialog</caption>

|

||||

</screenshot>

|

||||

<screenshot type="default">

|

||||

<screenshot>

|

||||

<image>https://jeffser.com/images/alpaca/screenie2.png</image>

|

||||

<caption>A conversation involving multiple models</caption>

|

||||

</screenshot>

|

||||

<screenshot type="default">

|

||||

<screenshot>

|

||||

<image>https://jeffser.com/images/alpaca/screenie3.png</image>

|

||||

<caption>Managing models</caption>

|

||||

</screenshot>

|

||||

</screenshots>

|

||||

<content_rating type="oars-1.1">

|

||||

<content_attribute id="money-purchasing">mild</content_attribute>

|

||||

</content_rating>

|

||||

<content_rating type="oars-1.1" />

|

||||

<url type="bugtracker">https://github.com/Jeffser/Alpaca/issues</url>

|

||||

<url type="homepage">https://github.com/Jeffser/Alpaca</url>

|

||||

<url type="donation">https://github.com/sponsors/Jeffser</url>

|

||||

<releases>

|

||||

<release version="0.4.0" date="2024-05-17">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.4.0</url>

|

||||

<description>

|

||||

<p>Big Update</p>

|

||||

<ul>

|

||||

<li>Added code highlighting</li>

|

||||

<li>Added image recognition (llava model)</li>

|

||||

<li>Added multiline prompt</li>

|

||||

<li>Fixed some small bugs</li>

|

||||

<li>General optimization</li>

|

||||

</ul>

|

||||

<p>

|

||||

Please report any errors to the issues page, thank you.

|

||||

</p>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.3.0" date="2024-05-16">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.3.0</url>

|

||||

<description>

|

||||

<p>Fixes and features</p>

|

||||

<ul>

|

||||

<li>Russian translation (thanks github/alexkdeveloper)</li>

|

||||

<li>Fixed: Cannot close app on first setup</li>

|

||||

<li>Fixed: Brand colors for Flathub</li>

|

||||

<li>Fixed: App description</li>

|

||||

<li>Fixed: Only show 'save changes dialog' when you actually change the url</li>

|

||||

</ul>

|

||||

<p>

|

||||

Please report any errors to the issues page, thank you.

|

||||

</p>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.2.2" date="2024-05-14">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.2.2</url>

|

||||

<description>

|

||||

<p>0.2.2 Bug fixes</p>

|

||||

<ul>

|

||||

<li>Toast messages appearing behind dialogs</li>

|

||||

<li>Local model list not updating when changing servers</li>

|

||||

<li>Closing the setup dialog closes the whole app</li>

|

||||

</ul>

|

||||

<p>

|

||||

Please report any errors to the issues page, thank you.

|

||||

</p>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.2.1" date="2024-05-14">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.2.1</url>

|

||||

<description>

|

||||

<p>0.2.1 Data saving fix</p>

|

||||

<p>The app didn't save the config files and chat history to the right directory, this is now fixed</p>

|

||||

<p>

|

||||

Please report any errors to the issues page, thank you.

|

||||

</p>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.2.0" date="2024-05-14">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.2.0</url>

|

||||

<description>

|

||||

<p>0.2.0</p>

|

||||

<p>Big Update</p>

|

||||

<p>New Features</p>

|

||||

<ul>

|

||||

<li>Restore chat after closing the app</li>

|

||||

<li>A button to clear the chat</li>

|

||||

<li>Fixed multiple bugs involving how messages are shown</li>

|

||||

<li>Added welcome dialog</li>

|

||||

<li>More stability</li>

|

||||

</ul>

|

||||

<p>

|

||||

Please report any errors to the issues page, thank you.

|

||||

</p>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.1.2" date="2024-05-13">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.1.2</url>

|

||||

<description>

|

||||

<p>0.1.2 Quick fixes</p>

|

||||

<p>This release fixes some metadata needed to have a proper Flatpak application</p>

|

||||

</description>

|

||||

</release>

|

||||

<release version="0.1.1" date="2024-05-12">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/0.1.1</url>

|

||||

<description>

|

||||

<p>0.1.1 Stable Release</p>

|

||||

<p>This is the first public version of Alpaca</p>

|

||||

<p>Features</p>

|

||||

<ul>

|

||||

<li>Talk to multiple models in the same conversation</li>

|

||||

<li>Pull and delete models from the app</li>

|

||||

</ul>

|

||||

<p>

|

||||

Please report any errors to the issues page, thank you.

|

||||

</p>

|

||||

</description>

|

||||

</release>

|

||||

</releases>

|

||||

</component>

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

project('Alpaca',

|

||||

version: '0.1.0',

|

||||

version: '0.4.0',

|

||||

meson_version: '>= 0.62.0',

|

||||

default_options: [ 'warning_level=2', 'werror=false', ],

|

||||

)

|

||||

|

||||

@@ -0,0 +1 @@

|

||||

ru

|

||||

112

po/ru.po

Normal file

112

po/ru.po

Normal file

@@ -0,0 +1,112 @@

|

||||

msgid ""

|

||||

msgstr ""

|

||||

"Project-Id-Version: \n"

|

||||

"POT-Creation-Date: 2024-05-16 19:29+0800\n"

|

||||

"PO-Revision-Date: 2024-05-16 19:59+0800\n"

|

||||

"Last-Translator: \n"

|

||||

"Language-Team: \n"

|

||||

"Language: ru_RU\n"

|

||||

"MIME-Version: 1.0\n"

|

||||

"Content-Type: text/plain; charset=UTF-8\n"

|

||||

"Content-Transfer-Encoding: 8bit\n"

|

||||

"X-Generator: Poedit 3.4.2\n"

|

||||

"X-Poedit-Basepath: ../src\n"

|

||||

"X-Poedit-SearchPath-0: .\n"

|

||||

|

||||

#: gtk/help-overlay.ui:11

|

||||

msgctxt "shortcut window"

|

||||

msgid "General"

|

||||

msgstr "Общие"

|

||||

|

||||

#: gtk/help-overlay.ui:14

|

||||

msgctxt "shortcut window"

|

||||

msgid "Show Shortcuts"

|

||||

msgstr "Показывать ярлыки"

|

||||

|

||||

#: gtk/help-overlay.ui:20

|

||||

msgctxt "shortcut window"

|

||||

msgid "Quit"

|

||||

msgstr "Выйти"

|

||||

|

||||

#: window.ui:30

|

||||

msgid "Manage models"

|

||||

msgstr "Управление моделями"

|

||||

|

||||

#: window.ui:44

|

||||

msgid "Menu"

|

||||

msgstr "Меню"

|

||||

|

||||

#: window.ui:106

|

||||

msgid "Send"

|

||||

msgstr "Отправить"

|

||||

|

||||

#: window.ui:137

|

||||

msgid "Pulling Model"

|

||||

msgstr "Тянущая модель"

|

||||

|

||||

#: window.ui:218

|

||||

msgid "Previous"

|

||||

msgstr "Предыдущий"

|

||||

|

||||

#: window.ui:233

|

||||

msgid "Next"

|

||||

msgstr "Следующий"

|

||||

|

||||

#: window.ui:259

|

||||

msgid "Welcome to Alpaca"

|

||||

msgstr "Добро пожаловать в Alpaca"

|

||||

|

||||

#: window.ui:260

|

||||

msgid ""

|

||||

"To get started, please ensure you have an Ollama instance set up. You can "

|

||||

"either run Ollama locally on your machine or connect to a remote instance."

|

||||

msgstr ""

|

||||

"Для начала, пожалуйста, убедитесь, что у вас настроен экземпляр Ollama. Вы "

|

||||

"можете либо запустить Ollama локально на своем компьютере, либо "

|

||||

"подключиться к удаленному экземпляру."

|

||||

|

||||

#: window.ui:263

|

||||

msgid "Ollama Website"

|

||||

msgstr "Веб-сайт Ollama"

|

||||

|

||||

#: window.ui:279

|

||||

msgid "Disclaimer"

|

||||

msgstr "Отказ от ответственности"

|

||||

|

||||

#: window.ui:280

|

||||

msgid ""

|

||||

"Alpaca and its developers are not liable for any damages to devices or "

|

||||

"software resulting from the execution of code generated by an AI model. "

|

||||

"Please exercise caution and review the code carefully before running it."

|

||||

msgstr ""

|

||||

"Alpaca и ее разработчики не несут ответственности за любой ущерб, "

|

||||

"причиненный устройствам или программному обеспечению в результате "

|

||||

"выполнения кода, сгенерированного с помощью модели искусственного "

|

||||

"интеллекта. Пожалуйста, будьте осторожны и внимательно ознакомьтесь с кодом "

|

||||

"перед его запуском."

|

||||

|

||||

#: window.ui:292

|

||||

msgid "Setup"

|

||||

msgstr "Установка"

|

||||

|

||||

#: window.ui:293

|

||||

msgid ""

|

||||

"If you are running an Ollama instance locally and haven't modified the "

|

||||

"default ports, you can use the default URL. Otherwise, please enter the URL "

|

||||

"of your Ollama instance."

|

||||

msgstr ""

|

||||

"Если вы запускаете локальный экземпляр Ollama и не изменили порты по "

|

||||

"умолчанию, вы можете использовать URL-адрес по умолчанию. В противном "

|

||||

"случае, пожалуйста, введите URL-адрес вашего экземпляра Ollama."

|

||||

|

||||

#: window.ui:313

|

||||

msgid "_Clear Conversation"

|

||||

msgstr "_Очистить разговор"

|

||||

|

||||

#: window.ui:317

|

||||

msgid "_Change Server"

|

||||

msgstr "_Изменить Сервер"

|

||||

|

||||

#: window.ui:321

|

||||

msgid "_About Alpaca"

|

||||

msgstr "_О Программе"

|

||||

@@ -1,34 +0,0 @@

|

||||

{

|

||||

"name": "python3-requests",

|

||||

"buildsystem": "simple",

|

||||

"build-commands": [

|

||||

"pip3 install --verbose --exists-action=i --no-index --find-links=\"file://${PWD}\" --prefix=${FLATPAK_DEST} \"requests\" --no-build-isolation"

|

||||

],

|

||||

"sources": [

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/ba/06/a07f096c664aeb9f01624f858c3add0a4e913d6c96257acb4fce61e7de14/certifi-2024.2.2-py3-none-any.whl",

|

||||

"sha256": "dc383c07b76109f368f6106eee2b593b04a011ea4d55f652c6ca24a754d1cdd1"

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/63/09/c1bc53dab74b1816a00d8d030de5bf98f724c52c1635e07681d312f20be8/charset-normalizer-3.3.2.tar.gz",

|

||||

"sha256": "f30c3cb33b24454a82faecaf01b19c18562b1e89558fb6c56de4d9118a032fd5"

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/e5/3e/741d8c82801c347547f8a2a06aa57dbb1992be9e948df2ea0eda2c8b79e8/idna-3.7-py3-none-any.whl",

|

||||

"sha256": "82fee1fc78add43492d3a1898bfa6d8a904cc97d8427f683ed8e798d07761aa0"

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/70/8e/0e2d847013cb52cd35b38c009bb167a1a26b2ce6cd6965bf26b47bc0bf44/requests-2.31.0-py3-none-any.whl",

|

||||

"sha256": "58cd2187c01e70e6e26505bca751777aa9f2ee0b7f4300988b709f44e013003f"

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://files.pythonhosted.org/packages/a2/73/a68704750a7679d0b6d3ad7aa8d4da8e14e151ae82e6fee774e6e0d05ec8/urllib3-2.2.1-py3-none-any.whl",

|

||||

"sha256": "450b20ec296a467077128bff42b73080516e71b56ff59a60a02bef2232c4fa9d"

|

||||

}

|

||||

]

|

||||

}

|

||||

@@ -89,3 +89,4 @@ available_models = {

|

||||

"llava-phi3":"A new small LLaVA model fine-tuned from Phi 3 Mini."

|

||||

}

|

||||

|

||||

|

||||

|

||||

@@ -7,9 +7,9 @@ def simple_get(connection_url:str) -> dict:

|

||||

if response.status_code == 200:

|

||||

return {"status": "ok", "text": response.text, "status_code": response.status_code}

|

||||

else:

|

||||

return {"status": "error", "text": f"Failed to connect to {connection_url}. Status code: {response.status_code}", "status_code": response.status_code}

|

||||

return {"status": "error", "status_code": response.status_code}

|

||||

except Exception as e:

|

||||

return {"status": "error", "text": f"An error occurred while trying to connect to {connection_url}", "status_code": 0}

|

||||

return {"status": "error", "status_code": 0}

|

||||

|

||||

def simple_delete(connection_url:str, data) -> dict:

|

||||

try:

|

||||

@@ -19,7 +19,7 @@ def simple_delete(connection_url:str, data) -> dict:

|

||||

else:

|

||||

return {"status": "error", "text": "Failed to delete", "status_code": response.status_code}

|

||||

except Exception as e:

|

||||

return {"status": "error", "text": f"An error occurred while trying to connect to {connection_url}", "status_code": 0}

|

||||

return {"status": "error", "status_code": 0}

|

||||

|

||||

def stream_post(connection_url:str, data, callback:callable) -> dict:

|

||||

try:

|

||||

@@ -31,11 +31,11 @@ def stream_post(connection_url:str, data, callback:callable) -> dict:

|

||||

for line in response.iter_lines():

|

||||

if line:

|

||||

callback(json.loads(line.decode("utf-8")))

|

||||

return {"status": "ok", "text": "All good", "status_code": response.status_code}

|

||||

return {"status": "ok", "status_code": response.status_code}

|

||||

else:

|

||||

return {"status": "error", "text": "Error posting data", "status_code": response.status_code}

|

||||

return {"status": "error", "status_code": response.status_code}

|

||||

except Exception as e:

|

||||

return {"status": "error", "text": f"An error occurred while trying to connect to {connection_url}", "status_code": 0}

|

||||

return {"status": "error", "status_code": 0}

|

||||

|

||||

|

||||

from time import sleep

|

||||

@@ -58,4 +58,4 @@ def stream_post_fake(connection_url:str, data, callback:callable) -> dict:

|

||||

sleep(.1)

|

||||

data = {"status": msg}

|

||||

callback(data)

|

||||

return {"status": "ok", "text": "All good", "status_code": 200}

|

||||

return {"status": "ok", "status_code": 200}

|

||||

|

||||

@@ -33,8 +33,9 @@ class AlpacaApplication(Adw.Application):

|

||||

super().__init__(application_id='com.jeffser.Alpaca',

|

||||

flags=Gio.ApplicationFlags.DEFAULT_FLAGS)

|

||||

self.create_action('quit', lambda *_: self.quit(), ['<primary>q'])

|

||||

self.create_action('clear', lambda *_: AlpacaWindow.clear_conversation_dialog(self.props.active_window), ['<primary>e'])

|

||||

self.create_action('reconnect', lambda *_: AlpacaWindow.show_connection_dialog(self.props.active_window), ['<primary>r'])

|

||||

self.create_action('about', self.on_about_action)

|

||||

self.create_action('preferences', self.on_preferences_action)

|

||||

|

||||

def do_activate(self):

|

||||

win = self.props.active_window

|

||||

@@ -47,16 +48,14 @@ class AlpacaApplication(Adw.Application):

|

||||

application_name='Alpaca',

|

||||

application_icon='com.jeffser.Alpaca',

|

||||

developer_name='Jeffry Samuel Eduarte Rojas',

|

||||

version='0.1.1',

|

||||

version='0.4.0',

|

||||

developers=['Jeffser https://jeffser.com'],

|

||||

designers=['Jeffser https://jeffser.com'],

|

||||

translator_credits='Alex K (Russian) https://github.com/alexkdeveloper',

|

||||

copyright='© 2024 Jeffser',

|

||||

issue_url='https://github.com/Jeffser/Alpaca/issues')

|

||||

about.present()

|

||||

|

||||

def on_preferences_action(self, widget, _):

|

||||

print('app.preferences action activated')

|

||||

|

||||

def create_action(self, name, callback, shortcuts=None):

|

||||

action = Gio.SimpleAction.new(name, None)

|

||||

action.connect("activate", callback)

|

||||

|

||||

487

src/window.py

487

src/window.py

@@ -18,28 +18,47 @@

|

||||

# SPDX-License-Identifier: GPL-3.0-or-later

|

||||

|

||||

import gi

|

||||

gi.require_version("Soup", "3.0")

|

||||

from gi.repository import Adw, Gtk, GLib

|

||||

import json, requests, threading

|

||||

gi.require_version('GtkSource', '5')

|

||||

gi.require_version('GdkPixbuf', '2.0')

|

||||

from gi.repository import Adw, Gtk, Gdk, GLib, GtkSource, Gio, GdkPixbuf

|

||||

import json, requests, threading, os, re, base64

|

||||

from io import BytesIO

|

||||

from PIL import Image

|

||||

from datetime import datetime

|

||||

from .connection_handler import simple_get, simple_delete, stream_post, stream_post_fake

|

||||

from .available_models import available_models

|

||||

|

||||

@Gtk.Template(resource_path='/com/jeffser/Alpaca/window.ui')

|

||||

class AlpacaWindow(Adw.ApplicationWindow):

|

||||

config_dir = os.path.join(os.getenv("XDG_CONFIG_HOME"), "/", os.path.expanduser("~/.var/app/com.jeffser.Alpaca/config"))

|

||||

__gtype_name__ = 'AlpacaWindow'

|

||||

|

||||

#Variables

|

||||

ollama_url = None

|

||||

local_models = []

|

||||

messages_history = []

|

||||

#In the future I will at multiple chats, for now I'll save it like this so that past chats don't break in the future

|

||||

current_chat_id="0"

|

||||

chats = {"chats": {"0": {"messages": []}}}

|

||||

attached_image = {"path": None, "base64": None}

|

||||

|

||||

#Elements

|

||||

bot_message : Gtk.TextBuffer = None

|

||||

overlay = Gtk.Template.Child()

|

||||

bot_message_box : Gtk.Box = None

|

||||

bot_message_view : Gtk.TextView = None

|

||||

connection_dialog = Gtk.Template.Child()

|

||||

connection_carousel = Gtk.Template.Child()

|

||||

connection_previous_button = Gtk.Template.Child()

|

||||

connection_next_button = Gtk.Template.Child()

|

||||

connection_url_entry = Gtk.Template.Child()

|

||||

main_overlay = Gtk.Template.Child()

|

||||

pull_overlay = Gtk.Template.Child()

|

||||

manage_models_overlay = Gtk.Template.Child()

|

||||

connection_overlay = Gtk.Template.Child()

|

||||

chat_container = Gtk.Template.Child()

|

||||

message_entry = Gtk.Template.Child()

|

||||

chat_window = Gtk.Template.Child()

|

||||

message_text_view = Gtk.Template.Child()

|

||||

send_button = Gtk.Template.Child()

|

||||

image_button = Gtk.Template.Child()

|

||||

file_filter_image = Gtk.Template.Child()

|

||||

model_drop_down = Gtk.Template.Child()

|

||||

model_string_list = Gtk.Template.Child()

|

||||

|

||||

@@ -51,14 +70,36 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

pull_model_status_page = Gtk.Template.Child()

|

||||

pull_model_progress_bar = Gtk.Template.Child()

|

||||

|

||||

def show_toast(self, msg:str):

|

||||

toast_messages = {

|

||||

"error": [

|

||||

"An error occurred",

|

||||

"Failed to connect to server",

|

||||

"Could not list local models",

|

||||

"Could not delete model",

|

||||

"Could not pull model",

|

||||

"Cannot open image"

|

||||

],

|

||||

"info": [

|

||||

"Please select a model before chatting",

|

||||

"Conversation cannot be cleared while receiving a message"

|

||||

],

|

||||

"good": [

|

||||

"Model deleted successfully",

|

||||

"Model pulled successfully"

|

||||

]

|

||||

}

|

||||

|

||||

def show_toast(self, message_type:str, message_id:int, overlay):

|

||||

if message_type not in self.toast_messages or message_id > len(self.toast_messages[message_type] or message_id < 0):

|

||||

message_type = "error"

|

||||

message_id = 0

|

||||

toast = Adw.Toast(

|

||||

title=msg,

|

||||

title=self.toast_messages[message_type][message_id],

|

||||

timeout=2

|

||||

)

|

||||

self.overlay.add_toast(toast)

|

||||

overlay.add_toast(toast)

|

||||

|

||||

def show_message(self, msg:str, bot:bool):

|

||||

def show_message(self, msg:str, bot:bool, footer:str=None, image_base64:str=None):

|

||||

message_text = Gtk.TextView(

|

||||

editable=False,

|

||||

focusable=False,

|

||||

@@ -67,133 +108,211 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

margin_bottom=12,

|

||||

margin_start=12,

|

||||

margin_end=12,

|

||||

hexpand=True,

|

||||

css_classes=["flat"]

|

||||

)

|

||||

message_buffer = message_text.get_buffer()

|

||||

message_buffer.insert(message_buffer.get_end_iter(), msg)

|

||||

message_box = Adw.Bin(

|

||||

child=message_text,

|

||||

css_classes=["card" if bot else None]

|

||||

if footer is not None: message_buffer.insert_markup(message_buffer.get_end_iter(), footer, len(footer))

|

||||

|

||||

message_box = Gtk.Box(

|

||||

orientation=1,

|

||||

css_classes=[None if bot else "card"]

|

||||

)

|

||||

message_text.set_valign(Gtk.Align.CENTER)

|

||||

self.chat_container.append(message_box)

|

||||

if bot: self.bot_message = message_buffer

|

||||

|

||||

if image_base64 is not None:

|

||||

image_data = base64.b64decode(image_base64)

|

||||

loader = GdkPixbuf.PixbufLoader.new()

|

||||

loader.write(image_data)

|

||||

loader.close()

|

||||

|

||||

pixbuf = loader.get_pixbuf()

|

||||

texture = Gdk.Texture.new_for_pixbuf(pixbuf)

|

||||

|

||||

image = Gtk.Image.new_from_paintable(texture)

|

||||

image.set_size_request(360, 360)

|

||||

message_box.append(image)

|

||||

|

||||

message_box.append(message_text)

|

||||

|

||||

if bot:

|

||||

self.bot_message = message_buffer

|

||||

self.bot_message_view = message_text

|

||||

self.bot_message_box = message_box

|

||||

|

||||

def verify_if_image_can_be_used(self, pspec=None, user_data=None):

|

||||

if self.model_drop_down.get_selected_item() == None: return True

|

||||

selected = self.model_drop_down.get_selected_item().get_string().split(":")[0]

|

||||

if selected in ['llava']:

|

||||

self.image_button.set_sensitive(True)

|

||||

return True

|

||||

else:

|

||||

self.image_button.set_sensitive(False)

|

||||

self.image_button.set_css_classes([])

|

||||

self.image_button.get_child().set_icon_name("image-x-generic-symbolic")

|

||||

self.attached_image = {"path": None, "base64": None}

|

||||

return False

|

||||

|

||||

def update_list_local_models(self):

|

||||

self.local_models = []

|

||||

response = simple_get(self.ollama_url + "/api/tags")

|

||||

for i in range(self.model_string_list.get_n_items() -1, -1, -1):

|

||||

self.model_string_list.remove(i)

|

||||

if response['status'] == 'ok':

|

||||

for model in json.loads(response['text'])['models']:

|

||||

self.model_string_list.append(model["name"])

|

||||

self.local_models.append(model["name"])

|

||||

self.model_drop_down.set_selected(0)

|

||||

self.verify_if_image_can_be_used()

|

||||

return

|

||||

#IF IT CONTINUES THEN THERE WAS EN ERROR

|

||||

self.show_toast(response['text'])

|

||||

self.show_connection_dialog()

|

||||

|

||||

def dialog_response(self, dialog, task):

|

||||

self.ollama_url = dialog.get_extra_child().get_text()

|

||||

if dialog.choose_finish(task) == "login":

|

||||

response = simple_get(self.ollama_url)

|

||||

if response['status'] == 'ok':

|

||||

if "Ollama is running" in response['text']:

|

||||

self.message_entry.grab_focus_without_selecting()

|

||||

self.update_list_local_models()

|

||||

return

|

||||

else:

|

||||

response = {"status": "error", "text": f"Unexpected response from {self.ollama_url} : {response['text']}"}

|

||||

#IF IT CONTINUES THEN THERE WAS EN ERROR

|

||||

self.show_toast(response['text'])

|

||||

self.show_connection_dialog()

|

||||

else:

|

||||

self.destroy()

|

||||

self.show_connection_dialog(True)

|

||||

self.show_toast("error", 2, self.connection_overlay)

|

||||

|

||||

def show_connection_dialog(self):

|

||||

dialog = Adw.AlertDialog(

|

||||

heading="Login",

|

||||

body="Please enter the Ollama instance URL",

|

||||

close_response="cancel"

|

||||

)

|

||||

dialog.add_response("cancel", "Cancel")

|

||||

dialog.add_response("login", "Login")

|

||||

dialog.set_response_appearance("login", Adw.ResponseAppearance.SUGGESTED)

|

||||

def verify_connection(self):

|

||||

response = simple_get(self.ollama_url)

|

||||

if response['status'] == 'ok':

|

||||

if "Ollama is running" in response['text']:

|

||||

with open(os.path.join(self.config_dir, "server.conf"), "w+") as f: f.write(self.ollama_url)

|

||||

#self.message_text_view.grab_focus_without_selecting()

|

||||

self.update_list_local_models()

|

||||

return True

|

||||

return False

|

||||

|

||||

entry = Gtk.Entry(text="http://localhost:11434") #FOR TESTING PURPOSES

|

||||

dialog.set_extra_child(entry)

|

||||

|

||||

dialog.choose(parent = self, cancellable = None, callback = self.dialog_response)

|

||||

def add_code_blocks(self):

|

||||

text = self.bot_message.get_text(self.bot_message.get_start_iter(), self.bot_message.get_end_iter(), True)

|

||||

GLib.idle_add(self.bot_message_view.get_parent().remove, self.bot_message_view)

|

||||

# Define a regular expression pattern to match code blocks

|

||||

code_block_pattern = re.compile(r'```(\w+)\n(.*?)\n```', re.DOTALL)

|

||||

parts = []

|

||||

pos = 0

|

||||

for match in code_block_pattern.finditer(text):

|

||||

start, end = match.span()

|

||||

if pos < start:

|

||||

normal_text = text[pos:start]

|

||||

parts.append({"type": "normal", "text": normal_text.strip()})

|

||||

language = match.group(1)

|

||||

code_text = match.group(2)

|

||||

parts.append({"type": "code", "text": code_text, "language": language})

|

||||

pos = end

|

||||

# Extract any remaining normal text after the last code block

|

||||

if pos < len(text):

|

||||

normal_text = text[pos:]

|

||||

if normal_text.strip():

|

||||

parts.append({"type": "normal", "text": normal_text.strip()})

|

||||

for part in parts:

|

||||

if part['type'] == 'normal':

|

||||

message_text = Gtk.TextView(

|

||||

editable=False,

|

||||

focusable=False,

|

||||

wrap_mode= Gtk.WrapMode.WORD,

|

||||

margin_top=12,

|

||||

margin_bottom=12,

|

||||

margin_start=12,

|

||||

margin_end=12,

|

||||

hexpand=True,

|

||||

css_classes=["flat"]

|

||||

)

|

||||

message_buffer = message_text.get_buffer()

|

||||

if part['text'].split("\n")[-1] == parts[-1]['text'].split("\n")[-1]:

|

||||

footer = "\n<small>" + part['text'].split('\n')[-1] + "</small>"

|

||||

part['text'] = '\n'.join(part['text'].split("\n")[:-1])

|

||||

message_buffer.insert(message_buffer.get_end_iter(), part['text'])

|

||||

message_buffer.insert_markup(message_buffer.get_end_iter(), footer, len(footer))

|

||||

else:

|

||||

message_buffer.insert(message_buffer.get_end_iter(), part['text'])

|

||||

self.bot_message_box.append(message_text)

|

||||

else:

|

||||

language = GtkSource.LanguageManager.get_default().get_language(part['language'])

|

||||

buffer = GtkSource.Buffer.new_with_language(language)

|

||||

buffer.set_text(part['text'])

|

||||

buffer.set_style_scheme(GtkSource.StyleSchemeManager.get_default().get_scheme('classic-dark'))

|

||||

source_view = GtkSource.View(

|

||||

auto_indent=True, indent_width=4, buffer=buffer, show_line_numbers=True

|

||||

)

|

||||

source_view.get_style_context().add_class("card")

|

||||

self.bot_message_box.append(source_view)

|

||||

self.bot_message = None

|

||||

self.bot_message_box = None

|

||||

|

||||

def update_bot_message(self, data):

|

||||

if data['done']:

|

||||

try:

|

||||

api_datetime = data['created_at']

|

||||

api_datetime = api_datetime[:-4] + api_datetime[-1]

|

||||

formated_datetime = datetime.strptime(api_datetime, "%Y-%m-%dT%H:%M:%S.%fZ").strftime("%Y/%m/%d %H:%M")

|

||||

text = f"\n\n<small>{data['model']}\t|\t{formated_datetime}</small>"

|

||||

GLib.idle_add(self.bot_message.insert_markup, self.bot_message.get_end_iter(), text, len(text))

|

||||

except Exception as e: print(e)

|

||||

self.bot_message = None

|

||||

formated_datetime = datetime.now().strftime("%Y/%m/%d %H:%M")

|

||||

text = f"\n<small>{data['model']}\t|\t{formated_datetime}</small>"

|

||||

GLib.idle_add(self.bot_message.insert_markup, self.bot_message.get_end_iter(), text, len(text))

|

||||

vadjustment = self.chat_window.get_vadjustment()

|

||||

GLib.idle_add(vadjustment.set_value, vadjustment.get_upper())

|

||||

self.save_history()

|

||||

else:

|

||||

if self.bot_message is None:

|

||||

GLib.idle_add(self.show_message, data['message']['content'], True)

|

||||

self.messages_history.append({

|

||||

if self.chats["chats"][self.current_chat_id]["messages"][-1]['role'] == "user":

|

||||

self.chats["chats"][self.current_chat_id]["messages"].append({

|

||||

"role": "assistant",

|

||||

"content": data['message']['content']

|

||||

"model": data['model'],

|

||||

"date": datetime.now().strftime("%Y/%m/%d %H:%M"),

|

||||

"content": ''

|

||||

})

|

||||

else:

|

||||

GLib.idle_add(self.bot_message.insert_at_cursor, data['message']['content'], len(data['message']['content']))

|

||||

self.messages_history[-1]['content'] += data['message']['content']

|

||||

#else: GLib.idle_add(self.bot_message.insert, self.bot_message.get_end_iter(), data['message']['content'])

|

||||

GLib.idle_add(self.bot_message.insert, self.bot_message.get_end_iter(), data['message']['content'])

|

||||

self.chats["chats"][self.current_chat_id]["messages"][-1]['content'] += data['message']['content']

|

||||

|

||||

def send_message(self):

|

||||

def run_message(self, messages, model):

|

||||

response = stream_post(f"{self.ollama_url}/api/chat", data=json.dumps({"model": model, "messages": messages}), callback=self.update_bot_message)

|

||||

GLib.idle_add(self.add_code_blocks)

|

||||

GLib.idle_add(self.send_button.set_sensitive, True)

|

||||

GLib.idle_add(self.image_button.set_sensitive, True)

|

||||

GLib.idle_add(self.image_button.set_css_classes, [])

|

||||

GLib.idle_add(self.image_button.get_child().set_icon_name, "image-x-generic-symbolic")

|

||||

self.attached_image = {"path": None, "base64": None}

|

||||

GLib.idle_add(self.message_text_view.set_sensitive, True)

|

||||

if response['status'] == 'error':

|

||||

GLib.idle_add(self.show_toast, 'error', 1, self.connection_overlay)

|

||||

GLib.idle_add(self.show_connection_dialog, True)

|

||||

|

||||

def send_message(self, button):

|

||||

if not self.message_text_view.get_buffer().get_text(self.message_text_view.get_buffer().get_start_iter(), self.message_text_view.get_buffer().get_end_iter(), False): return

|

||||

current_model = self.model_drop_down.get_selected_item()

|

||||

if current_model is None:

|

||||

GLib.idle_add(self.show_toast, "Please pull a model")

|

||||

self.show_toast("info", 0, self.main_overlay)

|

||||

return

|

||||

self.messages_history.append({

|

||||

formated_datetime = datetime.now().strftime("%Y/%m/%d %H:%M")

|

||||

self.chats["chats"][self.current_chat_id]["messages"].append({

|

||||

"role": "user",

|

||||

"content": self.message_entry.get_text()

|

||||

"model": "User",

|

||||

"date": formated_datetime,

|

||||

"content": self.message_text_view.get_buffer().get_text(self.message_text_view.get_buffer().get_start_iter(), self.message_text_view.get_buffer().get_end_iter(), False)

|

||||

})

|

||||

data = {

|

||||

"model": current_model.get_string(),

|

||||

"messages": self.messages_history

|

||||

"messages": self.chats["chats"][self.current_chat_id]["messages"]

|

||||

}

|

||||

GLib.idle_add(self.message_entry.set_sensitive, False)

|

||||

GLib.idle_add(self.send_button.set_sensitive, False)

|

||||

GLib.idle_add(self.show_message, self.message_entry.get_text(), False)

|

||||

GLib.idle_add(self.message_entry.get_buffer().set_text, "", 0)

|

||||

response = stream_post(f"{self.ollama_url}/api/chat", data=json.dumps(data), callback=self.update_bot_message)

|

||||

GLib.idle_add(self.send_button.set_sensitive, True)

|

||||

GLib.idle_add(self.message_entry.set_sensitive, True)

|

||||

if response['status'] == 'error':

|

||||

self.show_toast(f"{response['text']}")

|

||||

self.show_connection_dialog()

|

||||

|

||||

def send_button_activate(self, button):

|

||||

if not self.message_entry.get_text(): return

|

||||

thread = threading.Thread(target=self.send_message)

|

||||

if self.verify_if_image_can_be_used() and self.attached_image["base64"] is not None:

|

||||

data["messages"][-1]["images"] = [self.attached_image["base64"]]

|

||||

self.message_text_view.set_sensitive(False)

|

||||

self.send_button.set_sensitive(False)

|

||||

self.image_button.set_sensitive(False)

|

||||

self.show_message(self.message_text_view.get_buffer().get_text(self.message_text_view.get_buffer().get_start_iter(), self.message_text_view.get_buffer().get_end_iter(), False), False, f"\n\n<small>{formated_datetime}</small>", self.attached_image["base64"])

|

||||

self.message_text_view.get_buffer().set_text("", 0)

|

||||

self.show_message("", True)

|

||||

thread = threading.Thread(target=self.run_message, args=(data['messages'], data['model']))

|

||||

thread.start()

|

||||

|

||||

def delete_model(self, dialog, task, model_name, button):

|

||||

if dialog.choose_finish(task) == "delete":

|

||||

response = simple_delete(self.ollama_url + "/api/delete", data={"name": model_name})

|

||||

print(response)

|

||||

if response['status'] == 'ok':

|

||||

button.set_icon_name("folder-download-symbolic")

|

||||

button.set_css_classes(["accent", "pull"])

|

||||

self.show_toast(f"Model '{model_name}' deleted successfully")

|

||||

self.show_toast("good", 0, self.manage_models_overlay)

|

||||

for i in range(self.model_string_list.get_n_items()):

|

||||

if self.model_string_list.get_string(i) == model_name:

|

||||

self.model_string_list.remove(i)

|

||||

self.model_drop_down.set_selected(0)

|

||||

break

|

||||

elif response['status_code'] == '404':

|

||||

self.show_toast(f"Delete request failed: Model was not found")

|

||||

else:

|

||||

self.show_toast(response['text'])

|

||||

self.show_toast("error", 3, self.connection_overlay)

|

||||

self.manage_models_dialog.close()

|

||||

self.show_connection_dialog()

|

||||

self.show_connection_dialog(True)

|

||||

|

||||

def pull_model_update(self, data):

|

||||

try:

|

||||

@@ -216,11 +335,11 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

GLib.idle_add(button.set_icon_name, "user-trash-symbolic")

|

||||

GLib.idle_add(button.set_css_classes, ["error", "delete"])

|

||||

GLib.idle_add(self.model_string_list.append, model_name)

|

||||

GLib.idle_add(self.show_toast, f"Model '{model_name}' pulled successfully")

|

||||

GLib.idle_add(self.show_toast, "good", 1, self.manage_models_overlay)

|

||||

else:

|

||||

GLib.idle_add(self.show_toast, response['text'])

|

||||

GLib.idle_add(self.show_toast, "error", 4, self.connection_overlay)

|

||||

GLib.idle_add(self.manage_models_dialog.close)

|

||||

GLib.idle_add(self.show_connection_dialog)

|

||||

GLib.idle_add(self.show_connection_dialog, True)

|

||||

|

||||

|

||||

def pull_model_start(self, dialog, task, model_name, button):

|

||||

@@ -230,7 +349,6 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

|

||||

def model_action_button_activate(self, button, model_name):

|

||||

action = list(set(button.get_css_classes()) & set(["delete", "pull"]))[0]

|

||||

print(f"action: {action}")

|

||||

dialog = Adw.AlertDialog(

|

||||

heading=f"{action.capitalize()} Model",

|

||||

body=f"Are you sure you want to {action} '{model_name}'?",

|

||||

@@ -253,7 +371,7 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

title = model_name,

|

||||

subtitle = model_description,

|

||||

)

|

||||

model_name += ":latest"

|

||||

if ":" not in model_name: model_name += ":latest"

|

||||

button = Gtk.Button(

|

||||

icon_name = "folder-download-symbolic" if model_name not in self.local_models else "user-trash-symbolic",

|

||||

vexpand = False,

|

||||

@@ -268,14 +386,189 @@ class AlpacaWindow(Adw.ApplicationWindow):

|

||||

self.update_list_available_models()

|

||||

|

||||

|

||||

def connection_carousel_page_changed(self, carousel, index):

|

||||

if index == 0: self.connection_previous_button.set_sensitive(False)

|

||||

else: self.connection_previous_button.set_sensitive(True)

|

||||

if index == carousel.get_n_pages()-1: self.connection_next_button.set_label("Connect")

|

||||

else: self.connection_next_button.set_label("Next")

|

||||

|

||||

def connection_previous_button_activate(self, button):

|

||||

self.connection_carousel.scroll_to(self.connection_carousel.get_nth_page(self.connection_carousel.get_position()-1), True)

|

||||

|

||||

def connection_next_button_activate(self, button):

|

||||

if button.get_label() == "Next": self.connection_carousel.scroll_to(self.connection_carousel.get_nth_page(self.connection_carousel.get_position()+1), True)

|

||||

else:

|

||||

self.ollama_url = self.connection_url_entry.get_text()

|

||||

if self.verify_connection():

|

||||

self.connection_dialog.force_close()

|

||||

else:

|

||||

self.show_connection_dialog(True)

|

||||

self.show_toast("error", 1, self.connection_overlay)

|

||||

|

||||

def show_connection_dialog(self, error:bool=False):

|

||||

self.connection_carousel.scroll_to(self.connection_carousel.get_nth_page(self.connection_carousel.get_n_pages()-1),False)

|

||||

if self.ollama_url is not None: self.connection_url_entry.set_text(self.ollama_url)

|

||||

if error: self.connection_url_entry.set_css_classes(["error"])

|

||||

else: self.connection_url_entry.set_css_classes([])

|

||||

self.connection_dialog.present(self)

|

||||

|

||||

def clear_conversation(self):

|

||||

for widget in list(self.chat_container): self.chat_container.remove(widget)

|

||||

self.chats["chats"][self.current_chat_id]["messages"] = []

|

||||

|

||||

def clear_conversation_dialog_response(self, dialog, task):

|

||||

if dialog.choose_finish(task) == "empty":

|

||||

self.clear_conversation()

|

||||

self.save_history()

|

||||

|

||||

def clear_conversation_dialog(self):

|

||||

if self.bot_message is not None:

|

||||

self.show_toast("info", 1, self.main_overlay)

|

||||

return

|

||||

dialog = Adw.AlertDialog(

|

||||

heading=f"Clear Conversation",

|

||||

body=f"Are you sure you want to clear the conversation?",

|

||||

close_response="cancel"

|

||||

)

|

||||

dialog.add_response("cancel", "Cancel")

|

||||

dialog.add_response("empty", "Empty")

|

||||

dialog.set_response_appearance("empty", Adw.ResponseAppearance.DESTRUCTIVE)

|

||||

dialog.choose(

|

||||

parent = self,

|

||||

cancellable = None,

|

||||

callback = self.clear_conversation_dialog_response

|

||||

)

|

||||

|

||||

def save_history(self):

|

||||

with open(os.path.join(self.config_dir, "chats.json"), "w+") as f:

|

||||

json.dump(self.chats, f, indent=4)

|

||||

|

||||

def load_history(self):

|

||||

if os.path.exists(os.path.join(self.config_dir, "chats.json")):

|

||||

self.clear_conversation()

|

||||

try:

|

||||

with open(os.path.join(self.config_dir, "chats.json"), "r") as f:

|

||||

self.chats = json.load(f)

|

||||

except Exception as e:

|

||||

self.chats = {"chats": {"0": {"messages": []}}}

|

||||

for message in self.chats['chats'][self.current_chat_id]['messages']:

|

||||

if message['role'] == 'user':

|

||||

self.show_message(message['content'], False, f"\n\n<small>{message['date']}</small>", message['images'][0] if 'images' in message and len(message['images']) > 0 else None)

|

||||

else:

|

||||

self.show_message(message['content'], True, f"\n\n<small>{message['model']}\t|\t{message['date']}</small>")

|

||||

self.add_code_blocks()

|

||||

self.bot_message = None

|

||||

|

||||

def closing_connection_dialog_response(self, dialog, task):

|

||||

result = dialog.choose_finish(task)

|

||||

if result == "cancel": return

|

||||

if result == "save":

|

||||

self.ollama_url = self.connection_url_entry.get_text()

|

||||

elif result == "discard" and self.ollama_url is None: self.destroy()

|

||||

self.connection_dialog.force_close()

|

||||

if self.ollama_url is None or self.verify_connection() == False:

|

||||

self.show_connection_dialog(True)

|

||||

self.show_toast("error", 1, self.connection_overlay)

|

||||

|

||||

|

||||

def closing_connection_dialog(self, dialog):

|

||||

if self.ollama_url is None: self.destroy()

|

||||

if self.ollama_url == self.connection_url_entry.get_text():

|

||||

self.connection_dialog.force_close()

|

||||

if self.ollama_url is None or self.verify_connection() == False:

|

||||

self.show_connection_dialog(True)

|

||||

self.show_toast("error", 1, self.connection_overlay)

|

||||

return

|

||||

dialog = Adw.AlertDialog(

|

||||

heading=f"Save Changes?",

|

||||

body=f"Do you want to save the URL change?",

|

||||

close_response="cancel"

|

||||

)

|

||||

dialog.add_response("cancel", "Cancel")

|

||||

dialog.add_response("discard", "Discard")

|

||||

dialog.add_response("save", "Save")

|

||||

dialog.set_response_appearance("discard", Adw.ResponseAppearance.DESTRUCTIVE)

|

||||

dialog.set_response_appearance("save", Adw.ResponseAppearance.SUGGESTED)

|

||||

dialog.choose(

|

||||

parent = self,

|

||||

cancellable = None,

|

||||

callback = self.closing_connection_dialog_response

|

||||

)

|

||||

|

||||

def load_image(self, file_dialog, result):

|

||||

try: file = file_dialog.open_finish(result)

|

||||

except: return

|

||||

try:

|

||||

self.attached_image["path"] = file.get_path()

|

||||

'''with open(self.attached_image["path"], "rb") as image_file:

|

||||

self.attached_image["base64"] = base64.b64encode(image_file.read()).decode("utf-8")'''

|

||||

with Image.open(self.attached_image["path"]) as img:

|

||||

width, height = img.size

|

||||

max_size = 240

|

||||

if width > height:

|

||||

new_width = max_size

|

||||

new_height = int((max_size / width) * height)

|

||||

else:

|

||||

new_height = max_size

|

||||

new_width = int((max_size / height) * width)

|

||||

resized_img = img.resize((new_width, new_height), Image.LANCZOS)

|

||||

with BytesIO() as output:

|

||||

resized_img.save(output, format="JPEG")

|

||||

image_data = output.getvalue()

|

||||

self.attached_image["base64"] = base64.b64encode(image_data).decode("utf-8")

|

||||

|

||||

self.image_button.set_css_classes(["destructive-action"])

|

||||

self.image_button.get_child().set_icon_name("edit-delete-symbolic")

|

||||

except Exception as e:

|

||||

print(e)

|

||||

self.show_toast("error", 5, self.main_overlay)

|

||||

|

||||

def remove_image(self, dialog, task):

|

||||

if dialog.choose_finish(task) == 'remove':

|

||||

self.image_button.set_css_classes([])

|

||||

self.image_button.get_child().set_icon_name("image-x-generic-symbolic")

|

||||

self.attached_image = {"path": None, "base64": None}

|

||||

|

||||

def open_image(self, button):

|

||||

if "destructive-action" in button.get_css_classes():

|

||||

dialog = Adw.AlertDialog(

|

||||

heading=f"Remove Image?",

|

||||

body=f"Are you sure you want to remove image?",

|

||||

close_response="cancel"

|

||||

)

|

||||

dialog.add_response("cancel", "Cancel")

|

||||

dialog.add_response("remove", "Remove")

|

||||

dialog.set_response_appearance("remove", Adw.ResponseAppearance.DESTRUCTIVE)

|

||||

dialog.choose(

|

||||

parent = self,

|

||||

cancellable = None,

|

||||

callback = self.remove_image

|

||||

)

|

||||

else:

|

||||

file_dialog = Gtk.FileDialog(default_filter=self.file_filter_image)

|

||||

file_dialog.open(self, None, self.load_image)

|

||||

|

||||

def __init__(self, **kwargs):

|

||||

super().__init__(**kwargs)

|

||||

GtkSource.init()

|

||||

self.manage_models_button.connect("clicked", self.manage_models_button_activate)

|

||||

self.send_button.connect("clicked", self.send_button_activate)

|

||||

self.send_button.connect("clicked", self.send_message)

|

||||

self.image_button.connect("clicked", self.open_image)

|

||||

self.set_default_widget(self.send_button)

|

||||

self.message_entry.set_activates_default(self.send_button)

|

||||

self.message_entry.set_text("Hi") #FOR TESTING PURPOSES

|

||||

self.show_connection_dialog()

|

||||

self.model_drop_down.connect("notify", self.verify_if_image_can_be_used)

|

||||

#self.message_text_view.set_activates_default(self.send_button)

|

||||

self.connection_carousel.connect("page-changed", self.connection_carousel_page_changed)

|

||||

self.connection_previous_button.connect("clicked", self.connection_previous_button_activate)

|

||||

self.connection_next_button.connect("clicked", self.connection_next_button_activate)

|

||||

self.connection_url_entry.connect("changed", lambda entry: entry.set_css_classes([]))

|

||||

self.connection_dialog.connect("close-attempt", self.closing_connection_dialog)

|

||||

self.load_history()

|

||||

if os.path.exists(os.path.join(self.config_dir, "server.conf")):

|

||||

with open(os.path.join(self.config_dir, "server.conf"), "r") as f:

|

||||

self.ollama_url = f.read()

|

||||

if self.verify_connection() is False: self.show_connection_dialog(True)

|

||||

else: self.connection_dialog.present(self)

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

296

src/window.ui

296

src/window.ui

@@ -5,7 +5,7 @@

|

||||

<template class="AlpacaWindow" parent="AdwApplicationWindow">

|

||||

<property name="resizable">True</property>

|

||||

<property name="content">

|

||||

<object class="AdwToastOverlay" id="overlay">

|

||||

<object class="AdwToastOverlay" id="main_overlay">

|

||||

<child>

|

||||

<object class="AdwToolbarView">

|

||||

<child type="top">

|

||||

@@ -36,7 +36,6 @@

|

||||

</object>

|

||||

</child>

|

||||

</object>

|

||||

|

||||

</property>

|

||||

<child type="end">

|

||||

<object class="GtkMenuButton">

|

||||

@@ -68,8 +67,6 @@

|

||||

<property name="kinetic-scrolling">1</property>

|

||||

<property name="vexpand">true</property>

|

||||

<style>

|

||||

<class name="undershoot-top"/>

|

||||

<class name="undershoot-bottom"/>

|

||||

<class name="card"/>

|

||||

</style>

|

||||

<child>

|

||||

@@ -93,19 +90,54 @@

|

||||

<property name="orientation">0</property>

|

||||

<property name="spacing">12</property>

|

||||

<child>

|

||||

<object class="GtkEntry" id="message_entry">

|

||||

<property name="hexpand">true</property>

|

||||

</object>

|

||||

</child>

|

||||

<child>

|

||||

<object class="GtkButton" id="send_button">

|

||||

<object class="GtkScrolledWindow">

|

||||

<style>

|

||||

<class name="suggested-action"/>

|

||||

<class name="card"/>

|

||||

<class name="view"/>

|

||||

</style>

|

||||

<child>

|

||||

<object class="AdwButtonContent">

|

||||

<property name="label" translatable="true">Send</property>

|

||||

<property name="icon-name">send-to-symbolic</property>

|

||||

<object class="GtkTextView" id="message_text_view">

|

||||

<property name="wrap-mode">word</property>

|

||||

<property name="margin-top">6</property>

|

||||

<property name="margin-bottom">6</property>

|

||||

<property name="margin-start">6</property>

|

||||

<property name="margin-end">6</property>

|

||||

<property name="hexpand">true</property>

|

||||

<style>

|

||||

<class name="view"/>

|

||||

</style>

|

||||

</object>

|

||||

</child>

|

||||

</object>

|

||||

|

||||

</child>

|

||||

<child>

|

||||

<object class="GtkBox">

|

||||

<property name="orientation">1</property>

|

||||

<property name="spacing">12</property>

|

||||

<child>

|

||||

<object class="GtkButton" id="send_button">

|

||||

<style>

|

||||

<class name="suggested-action"/>

|

||||

</style>

|

||||

<child>

|

||||

<object class="AdwButtonContent">

|

||||

<property name="label" translatable="true">Send</property>

|

||||

<property name="icon-name">send-to-symbolic</property>

|

||||

</object>

|

||||

</child>

|

||||

</object>

|

||||

</child>

|

||||

<child>

|

||||

<object class="GtkButton" id="image_button">

|

||||

<property name="sensitive">false</property>

|

||||

<property name="tooltip-text" translatable="true">Requires model 'llava' to be selected</property>

|

||||

<child>

|

||||

<object class="AdwButtonContent">

|

||||

<property name="label" translatable="true">Image</property>

|

||||

<property name="icon-name">image-x-generic-symbolic</property>

|

||||

</object>

|

||||

</child>

|

||||

</object>

|

||||

</child>

|

||||

</object>

|

||||

@@ -119,63 +151,26 @@

|

||||

</child>

|

||||

</object>

|

||||

</property>

|

||||

|

||||

<object class="AdwDialog" id="pull_model_dialog">

|

||||

<property name="can-close">false</property>

|

||||

<property name="width-request">400</property>

|

||||

<child>

|

||||

<object class="AdwToolbarView">

|

||||

<object class="AdwToastOverlay" id="pull_overlay">

|

||||

<child>

|

||||

<object class="AdwStatusPage" id="pull_model_status_page">

|

||||

<property name="hexpand">true</property>

|

||||

<property name="vexpand">true</property>

|

||||

<property name="margin-top">24</property>

|

||||

<property name="margin-bottom">24</property>

|

||||

<property name="margin-start">24</property>

|

||||

<property name="margin-end">24</property>

|

||||

<property name="title" translatable="yes">Pulling Model</property>

|

||||

<object class="AdwToolbarView">

|

||||

<child>

|

||||

<object class="GtkProgressBar" id="pull_model_progress_bar">

|

||||

<property name="show-text">true</property>

|

||||

</object>

|

||||

</child>

|

||||

</object>

|

||||

</child>

|

||||

</object>

|

||||

</child>

|

||||

</object>

|

||||

<object class="AdwDialog" id="manage_models_dialog">

|

||||

<property name="can-close">true</property>

|

||||

<property name="width-request">400</property>

|

||||

<property name="height-request">600</property>

|

||||

<child>

|

||||

<object class="AdwToolbarView">

|

||||