Compare commits

328 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

83db9fd9d4 | ||

|

|

6cb49cfc98 | ||

|

|

a928d2c074 | ||

|

|

4d35cea229 | ||

|

|

51d2326dee | ||

|

|

80dcae194b | ||

|

|

50759adb8e | ||

|

|

f46d16d257 | ||

|

|

5a9eeefaa7 | ||

|

|

f4f91d9aa1 | ||

|

|

ad5f73e985 | ||

|

|

d06ac2c2eb | ||

|

|

343411cd8c | ||

|

|

e14750db44 | ||

|

|

3be3b21f93 | ||

|

|

5c28245ff3 | ||

|

|

b433091e90 | ||

|

|

fe22f34a3d | ||

|

|

ef395a27a3 | ||

|

|

362e62ee36 | ||

|

|

80aabcb805 | ||

|

|

c283f3f1d2 | ||

|

|

603fdb8150 | ||

|

|

6e0ae393a4 | ||

|

|

abbdbf1abe | ||

|

|

a68973ece6 | ||

|

|

50aad8cb6d | ||

|

|

79a7840f24 | ||

|

|

19a8aade60 | ||

|

|

a591270d58 | ||

|

|

1087d3e336 | ||

|

|

fb7393fe5c | ||

|

|

d3159ae6ea | ||

|

|

c2c047d8b7 | ||

|

|

e897d6c931 | ||

|

|

3ceb25ccc6 | ||

|

|

9d740a7db9 | ||

|

|

4e77898487 | ||

|

|

c913a25679 | ||

|

|

758e055f1c | ||

|

|

a08be7351c | ||

|

|

08c0c54b98 | ||

|

|

687b99f9ab | ||

|

|

5801d43af9 | ||

|

|

5a28a16119 | ||

|

|

47e58d2ccd | ||

|

|

d33e9c53d8 | ||

|

|

8869296aed | ||

|

|

da4dd3341a | ||

|

|

15aa7fb844 | ||

|

|

d74f535968 | ||

|

|

521a95fc6b | ||

|

|

9548e2ec40 | ||

|

|

f1a3c7136f | ||

|

|

4542f26bb7 | ||

|

|

4926cb157e | ||

|

|

0d3b544a73 | ||

|

|

daf56c2de4 | ||

|

|

46e3921585 | ||

|

|

707984e20d | ||

|

|

cfb79a70be | ||

|

|

c32d0acfd4 | ||

|

|

38de4ac18e | ||

|

|

923c0e52e2 | ||

|

|

e753591b45 | ||

|

|

12e754e4bc | ||

|

|

eb3919ad63 | ||

|

|

1b94864422 | ||

|

|

51a90e0b79 | ||

|

|

7264902199 | ||

|

|

f08b03308e | ||

|

|

f831466d87 | ||

|

|

a54ec6fa9d | ||

|

|

ed3136cffd | ||

|

|

e37c5acbf9 | ||

|

|

9d2ad2eb3a | ||

|

|

d1a0d6375b | ||

|

|

ebf3af38c8 | ||

|

|

80b433e7a4 | ||

|

|

5da5c2c702 | ||

|

|

fe8626f650 | ||

|

|

ee998d978f | ||

|

|

cee360d5a2 | ||

|

|

5098babfd2 | ||

|

|

7026655116 | ||

|

|

01ba38a23b | ||

|

|

ef2c2650b0 | ||

|

|

a7d955a5bf | ||

|

|

129677d27c | ||

|

|

9ae011a31b | ||

|

|

abf1253980 | ||

|

|

e0c7e9c771 | ||

|

|

6a4c98ef18 | ||

|

|

809e23fb9c | ||

|

|

69eaa56240 | ||

|

|

df2a9d7b26 | ||

|

|

da97a7c6ee | ||

|

|

bb7a8b659a | ||

|

|

0999a64356 | ||

|

|

4647e1ba47 | ||

|

|

ea0caf03d6 | ||

|

|

1c9ce2a117 | ||

|

|

01b38fa37a | ||

|

|

80d1149932 | ||

|

|

cc1500f007 | ||

|

|

d0735de129 | ||

|

|

bbf678cb75 | ||

|

|

a44a7b5044 | ||

|

|

427ecd4499 | ||

|

|

0a5bb0b97f | ||

|

|

252b76e7eb | ||

|

|

5cf2be2b7d | ||

|

|

7f9a5eb516 | ||

|

|

9ecfbe8c3f | ||

|

|

377adc8699 | ||

|

|

8598f73be7 | ||

|

|

6f9b3b7c02 | ||

|

|

db198e10c0 | ||

|

|

d2271a7ade | ||

|

|

8d3d650ecf | ||

|

|

4545f5a1b2 | ||

|

|

62c1354f8b | ||

|

|

2bd99860f2 | ||

|

|

8026550f7a | ||

|

|

68c03176d4 | ||

|

|

ed54b2846a | ||

|

|

ff927d6c77 | ||

|

|

bd006da0c1 | ||

|

|

a409800279 | ||

|

|

5d89ccc729 | ||

|

|

fef3926ce3 | ||

|

|

c95be9611d | ||

|

|

1c4fc4341e | ||

|

|

3ddc172437 | ||

|

|

0d65cf1cbc | ||

|

|

cddcf496b2 | ||

|

|

9333b31444 | ||

|

|

cbd3e90073 | ||

|

|

a7b6e6bbce | ||

|

|

801c10fb77 | ||

|

|

50520b8474 | ||

|

|

b66e2102d3 | ||

|

|

8c0f1fd4d5 | ||

|

|

8b851d1b56 | ||

|

|

f36d6e1b29 | ||

|

|

eecac162ef | ||

|

|

82e7a3a9e1 | ||

|

|

f0505a0242 | ||

|

|

11dd13b430 | ||

|

|

b8fe222052 | ||

|

|

47d19a58aa | ||

|

|

fd67afbf33 | ||

|

|

d06e08a64e | ||

|

|

77b08d9e52 | ||

|

|

9451bf88d0 | ||

|

|

82bb50d663 | ||

|

|

edc3053774 | ||

|

|

1320ddb7d4 | ||

|

|

d95f06a230 | ||

|

|

938ace91c1 | ||

|

|

175cfad81c | ||

|

|

2f399dbb64 | ||

|

|

27558b85af | ||

|

|

bcc1f3fa65 | ||

|

|

fd92a86c5e | ||

|

|

3b95d369b8 | ||

|

|

a12920d801 | ||

|

|

2dd63df533 | ||

|

|

cea1aa5028 | ||

|

|

54b96d4e3a | ||

|

|

a470136476 | ||

|

|

7d35cb08dd | ||

|

|

1f03f1032e | ||

|

|

9e2b55a249 | ||

|

|

0fbb94cd72 | ||

|

|

004b3f8574 | ||

|

|

7d1931dd17 | ||

|

|

8b7f41afa7 | ||

|

|

4bc0832865 | ||

|

|

a66c6d5f40 | ||

|

|

33b7cae24d | ||

|

|

47f5c88ef2 | ||

|

|

ffe382aee2 | ||

|

|

919f71ee78 | ||

|

|

404d4476ae | ||

|

|

f2b243cd5f | ||

|

|

c2fae41355 | ||

|

|

8fda2cde9e | ||

|

|

930380cdce | ||

|

|

5b788ffe15 | ||

|

|

521c2bdde5 | ||

|

|

eee73b1218 | ||

|

|

87d6da26c9 | ||

|

|

2029cd5cd2 | ||

|

|

36be752ee6 | ||

|

|

5b3586789f | ||

|

|

6ce670e643 | ||

|

|

dd70e8139c | ||

|

|

3ac0936d1a | ||

|

|

1477bacf6a | ||

|

|

d339a18901 | ||

|

|

f9460416d9 | ||

|

|

a9112cf3da | ||

|

|

c873b49700 | ||

|

|

3c553e37d8 | ||

|

|

0c47fbb1f7 | ||

|

|

476138ef53 | ||

|

|

385ca4f0fa | ||

|

|

46fd642789 | ||

|

|

e48249c7c9 | ||

|

|

9e8535e97e | ||

|

|

a794c63a5a | ||

|

|

f3610a46a2 | ||

|

|

20fd2cf6e3 | ||

|

|

7bf345d09d | ||

|

|

17e9560449 | ||

|

|

c02e6a565e | ||

|

|

7fbc9b9bde | ||

|

|

416e97d488 | ||

|

|

753060d9f3 | ||

|

|

972c53000c | ||

|

|

2b948a49a0 | ||

|

|

7999548738 | ||

|

|

d4d13b793f | ||

|

|

210b6f0d89 | ||

|

|

7f5894b274 | ||

|

|

2dc24ab945 | ||

|

|

2f153c9974 | ||

|

|

fa22647acd | ||

|

|

dd5d82fe7a | ||

|

|

98b179aeb5 | ||

|

|

e1f1c005a0 | ||

|

|

6e226c5a4f | ||

|

|

7440fa5a37 | ||

|

|

4fe204605a | ||

|

|

4446b42b82 | ||

|

|

4b6cd17d0a | ||

|

|

1a6e74271c | ||

|

|

6ba3719031 | ||

|

|

dd95e3df7e | ||

|

|

69fd7853c8 | ||

|

|

c01c478ffe | ||

|

|

f8be1da83a | ||

|

|

3a7625486e | ||

|

|

fdc3b6c573 | ||

|

|

76939ed51f | ||

|

|

b9cf761f4a | ||

|

|

4c515ba541 | ||

|

|

d7c3595bf1 | ||

|

|

1fbd6a0824 | ||

|

|

ccb59c7f02 | ||

|

|

04bef3e82a | ||

|

|

17105b98ed | ||

|

|

4bff1515a9 | ||

|

|

0a75893346 | ||

|

|

2ed92467f9 | ||

|

|

634ac122d9 | ||

|

|

44640b7e53 | ||

|

|

47e7b22a7e | ||

|

|

918928d4bb | ||

|

|

69fc172779 | ||

|

|

d84dabbe4d | ||

|

|

23114210c4 | ||

|

|

ea80e5a223 | ||

|

|

6087f31d41 | ||

|

|

30ee292a32 | ||

|

|

705a9319f5 | ||

|

|

c789d9d87c | ||

|

|

a7681b5505 | ||

|

|

9e74d8af0b | ||

|

|

b52061f849 | ||

|

|

01b875c283 | ||

|

|

4cc3b78321 | ||

|

|

6205db87e6 | ||

|

|

518633b153 | ||

|

|

988ee7b7e7 | ||

|

|

cdadde60ce | ||

|

|

4bb01d86d9 | ||

|

|

4cac43520f | ||

|

|

d6dddd16f1 | ||

|

|

c0da054635 | ||

|

|

2b4d94ca55 | ||

|

|

e8e564738a | ||

|

|

d48fbd8b62 | ||

|

|

c1f80f209e | ||

|

|

ed6b32c827 | ||

|

|

fc436fd352 | ||

|

|

ee6fdb1ca1 | ||

|

|

988db30355 | ||

|

|

ea98ee5e99 | ||

|

|

b8d1d43822 | ||

|

|

0d017c6d14 | ||

|

|

2825e9a003 | ||

|

|

6e9ddfcbf2 | ||

|

|

378689be39 | ||

|

|

31858fad12 | ||

|

|

60351d629d | ||

|

|

715a97159a | ||

|

|

b48ce28b35 | ||

|

|

7ab0448cd3 | ||

|

|

5f6642fa63 | ||

|

|

5a0d1ed408 | ||

|

|

131e8fb6be | ||

|

|

1c7fb8ef93 | ||

|

|

8c0ec3957f | ||

|

|

72063a15d9 | ||

|

|

0d1b15aafc | ||

|

|

ca10369bdc | ||

|

|

42af75d8d2 | ||

|

|

a02871dd28 | ||

|

|

e65a8bc648 | ||

|

|

b373b6a34f | ||

|

|

6d6a0255e2 | ||

|

|

003d6a3d5f | ||

|

|

77a2c60fe5 | ||

|

|

ac3bd699ee | ||

|

|

596498c81e | ||

|

|

c95f764c77 | ||

|

|

5c5be05843 | ||

|

|

3fb26ec49e | ||

|

|

3f767d22e9 | ||

|

|

7f3fb0d82d | ||

|

|

d56c132459 | ||

|

|

acdce762c9 | ||

|

|

bd557d9652 | ||

|

|

3363d13fa0 | ||

|

|

52ba44e260 | ||

|

|

f06c2dae23 |

8

.github/ISSUE_TEMPLATE/bug_report.md

vendored

8

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -6,7 +6,7 @@ labels: bug

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

<!--Please be aware that GNOME Code of Conduct applies to Alpaca, https://conduct.gnome.org/-->

|

||||

**Describe the bug**

|

||||

A clear and concise description of what the bug is.

|

||||

|

||||

@@ -16,5 +16,7 @@ A clear and concise description of what you expected to happen.

|

||||

**Screenshots**

|

||||

If applicable, add screenshots to help explain your problem.

|

||||

|

||||

**Additional context**

|

||||

Add any other context about the problem here.

|

||||

**Debugging information**

|

||||

```

|

||||

Please paste here the debugging information available at 'About Alpaca' > 'Troubleshooting' > 'Debugging Information'

|

||||

```

|

||||

|

||||

2

.github/ISSUE_TEMPLATE/feature_request.md

vendored

2

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@@ -6,7 +6,7 @@ labels: enhancement

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

<!--Please be aware that GNOME Code of Conduct applies to Alpaca, https://conduct.gnome.org/-->

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

||||

|

||||

|

||||

18

.github/workflows/flatpak-builder.yml

vendored

Normal file

18

.github/workflows/flatpak-builder.yml

vendored

Normal file

@@ -0,0 +1,18 @@

|

||||

# .github/workflows/flatpak-build.yml

|

||||

on:

|

||||

workflow_dispatch:

|

||||

name: Flatpak Build

|

||||

jobs:

|

||||

flatpak:

|

||||

name: "Flatpak"

|

||||

runs-on: ubuntu-latest

|

||||

container:

|

||||

image: bilelmoussaoui/flatpak-github-actions:gnome-46

|

||||

options: --privileged

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: flatpak/flatpak-github-actions/flatpak-builder@v6

|

||||

with:

|

||||

bundle: com.jeffser.Alpaca.flatpak

|

||||

manifest-path: com.jeffser.Alpaca.json

|

||||

cache-key: flatpak-builder-${{ github.sha }}

|

||||

24

.github/workflows/pylint.yml

vendored

Normal file

24

.github/workflows/pylint.yml

vendored

Normal file

@@ -0,0 +1,24 @@

|

||||

name: Pylint

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: ["3.11"]

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v3

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install pylint

|

||||

- name: Analysing the code with pylint

|

||||

run: |

|

||||

pylint --rcfile=.pylintrc $(git ls-files '*.py' | grep -v 'src/available_models_descriptions.py')

|

||||

14

.pylintrc

Normal file

14

.pylintrc

Normal file

@@ -0,0 +1,14 @@

|

||||

[MASTER]

|

||||

|

||||

[MESSAGES CONTROL]

|

||||

disable=undefined-variable, line-too-long, missing-function-docstring, consider-using-f-string, import-error

|

||||

|

||||

[FORMAT]

|

||||

max-line-length=200

|

||||

|

||||

# Reasons for removing some checks:

|

||||

# undefined-variable: _() is used by the translator on build time but it is not defined on the scripts

|

||||

# line-too-long: I... I'm too lazy to make the lines shorter, maybe later

|

||||

# missing-function-docstring I'm not adding a docstring to all the functions, most are self explanatory

|

||||

# consider-using-f-string I can't use f-string because of the translator

|

||||

# import-error The linter doesn't have access to all the libraries that the project itself does

|

||||

34

Alpaca.doap

Normal file

34

Alpaca.doap

Normal file

@@ -0,0 +1,34 @@

|

||||

<Project xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

|

||||

xmlns:rdfs="http://www.w3.org/2000/01/rdf-schema#"

|

||||

xmlns:foaf="http://xmlns.com/foaf/0.1/"

|

||||

xmlns:gnome="http://api.gnome.org/doap-extensions#"

|

||||

xmlns="http://usefulinc.com/ns/doap#">

|

||||

|

||||

<name xml:lang="en">Alpaca</name>

|

||||

<shortdesc xml:lang="en">An Ollama client made with GTK4 and Adwaita</shortdesc>

|

||||

<homepage rdf:resource="https://jeffser.com/alpaca" />

|

||||

<bug-database rdf:resource="https://github.com/Jeffser/Alpaca/issues"/>

|

||||

<programming-language>Python</programming-language>

|

||||

|

||||

<platform>GTK 4</platform>

|

||||

<platform>Libadwaita</platform>

|

||||

|

||||

<maintainer>

|

||||

<foaf:Person>

|

||||

<foaf:name>Jeffry Samuel</foaf:name>

|

||||

<foaf:mbox rdf:resource="mailto:jeffrysamuer@gmail.com"/>

|

||||

<foaf:account>

|

||||

<foaf:OnlineAccount>

|

||||

<foaf:accountServiceHomepage rdf:resource="https://github.com"/>

|

||||

<foaf:accountName>jeffser</foaf:accountName>

|

||||

</foaf:OnlineAccount>

|

||||

</foaf:account>

|

||||

<foaf:account>

|

||||

<foaf:OnlineAccount>

|

||||

<foaf:accountServiceHomepage rdf:resource="https://gitlab.gnome.org"/>

|

||||

<foaf:accountName>jeffser</foaf:accountName>

|

||||

</foaf:OnlineAccount>

|

||||

</foaf:account>

|

||||

</foaf:Person>

|

||||

</maintainer>

|

||||

</Project>

|

||||

4

CODE_OF_CONDUCT.md

Normal file

4

CODE_OF_CONDUCT.md

Normal file

@@ -0,0 +1,4 @@

|

||||

Alpaca follows [GNOME's code of conduct](https://conduct.gnome.org/), please make sure to read it before interacting in any way with this repository.

|

||||

To report any misconduct please reach out via private message on

|

||||

- X (formally Twitter): [@jeffrysamuer](https://x.com/jeffrysamuer)

|

||||

- Mastodon: [@jeffser@floss.social](https://floss.social/@jeffser)

|

||||

30

CONTRIBUTING.md

Normal file

30

CONTRIBUTING.md

Normal file

@@ -0,0 +1,30 @@

|

||||

# Contributing Rules

|

||||

|

||||

## Translations

|

||||

|

||||

If you want to translate or contribute on existing translations please read [this discussion](https://github.com/Jeffser/Alpaca/discussions/153).

|

||||

|

||||

## Code

|

||||

|

||||

1) Before contributing code make sure there's an open [issue](https://github.com/Jeffser/Alpaca/issues) for that particular problem or feature.

|

||||

2) Ask to contribute on the responses to the issue.

|

||||

3) Wait for [my](https://github.com/Jeffser) approval, I might have already started working on that issue.

|

||||

4) Test your code before submitting a pull request.

|

||||

|

||||

## Q&A

|

||||

|

||||

### Do I need to comment my code?

|

||||

|

||||

There's no need to add comments if the code is easy to read by itself.

|

||||

|

||||

### What if I need help or I don't understand the existing code?

|

||||

|

||||

You can reach out on the issue, I'll try to answer as soon as possible.

|

||||

|

||||

### What IDE should I use?

|

||||

|

||||

I use Gnome Builder but you can use whatever you want.

|

||||

|

||||

### Can I be credited?

|

||||

|

||||

You might be credited on the GitHub repository in the [thanks](https://github.com/Jeffser/Alpaca/blob/main/README.md#thanks) section of the README.

|

||||

77

README.md

77

README.md

@@ -8,10 +8,17 @@ Alpaca is an [Ollama](https://github.com/ollama/ollama) client where you can man

|

||||

|

||||

---

|

||||

|

||||

> [!NOTE]

|

||||

> Please checkout [this discussion](https://github.com/Jeffser/Alpaca/discussions/292), I want to start developing a new app alongside Alpaca but I need some suggestions, thanks!

|

||||

|

||||

> [!WARNING]

|

||||

> This project is not affiliated at all with Ollama, I'm not responsible for any damages to your device or software caused by running code given by any AI models.

|

||||

|

||||

> [!IMPORTANT]

|

||||

> Please be aware that [GNOME Code of Conduct](https://conduct.gnome.org) applies to Alpaca before interacting with this repository.

|

||||

|

||||

## Features!

|

||||

|

||||

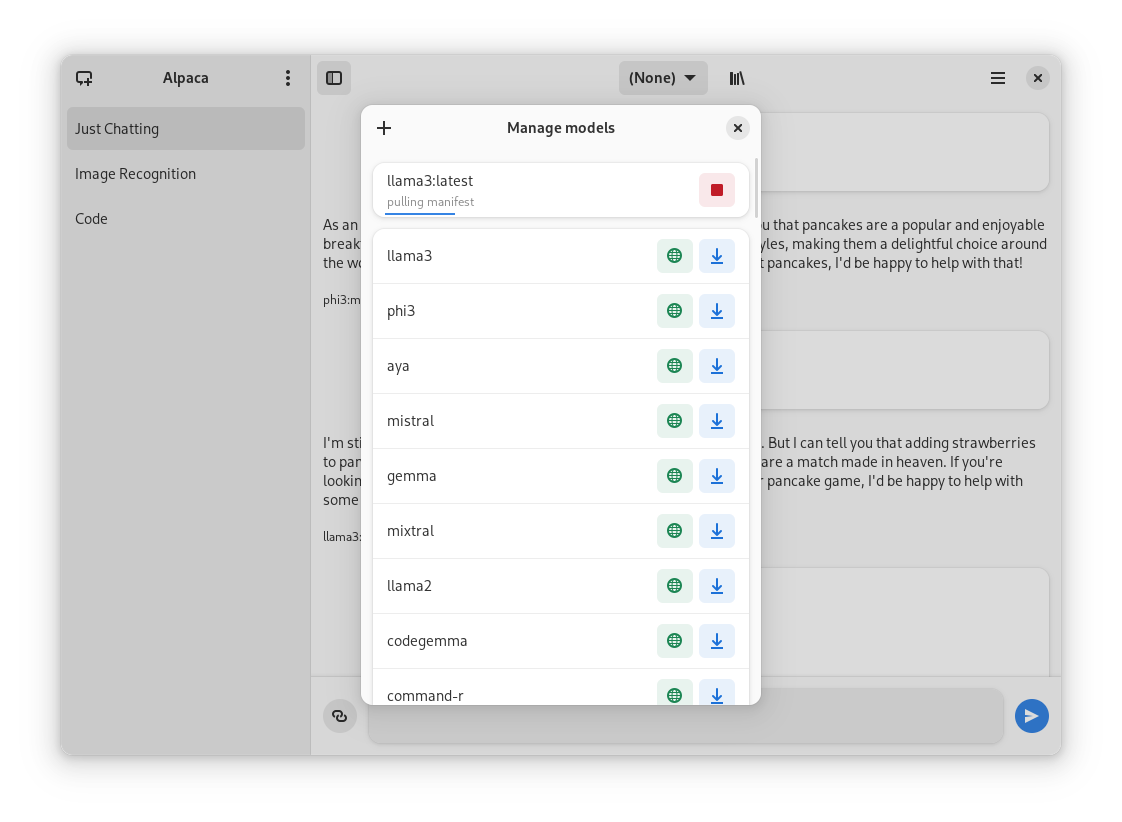

- Talk to multiple models in the same conversation

|

||||

- Pull and delete models from the app

|

||||

- Image recognition

|

||||

@@ -21,47 +28,61 @@ Alpaca is an [Ollama](https://github.com/ollama/ollama) client where you can man

|

||||

- Notifications

|

||||

- Import / Export chats

|

||||

- Delete / Edit messages

|

||||

- Regenerate messages

|

||||

- YouTube recognition (Ask questions about a YouTube video using the transcript)

|

||||

- Website recognition (Ask questions about a certain website by parsing the url)

|

||||

|

||||

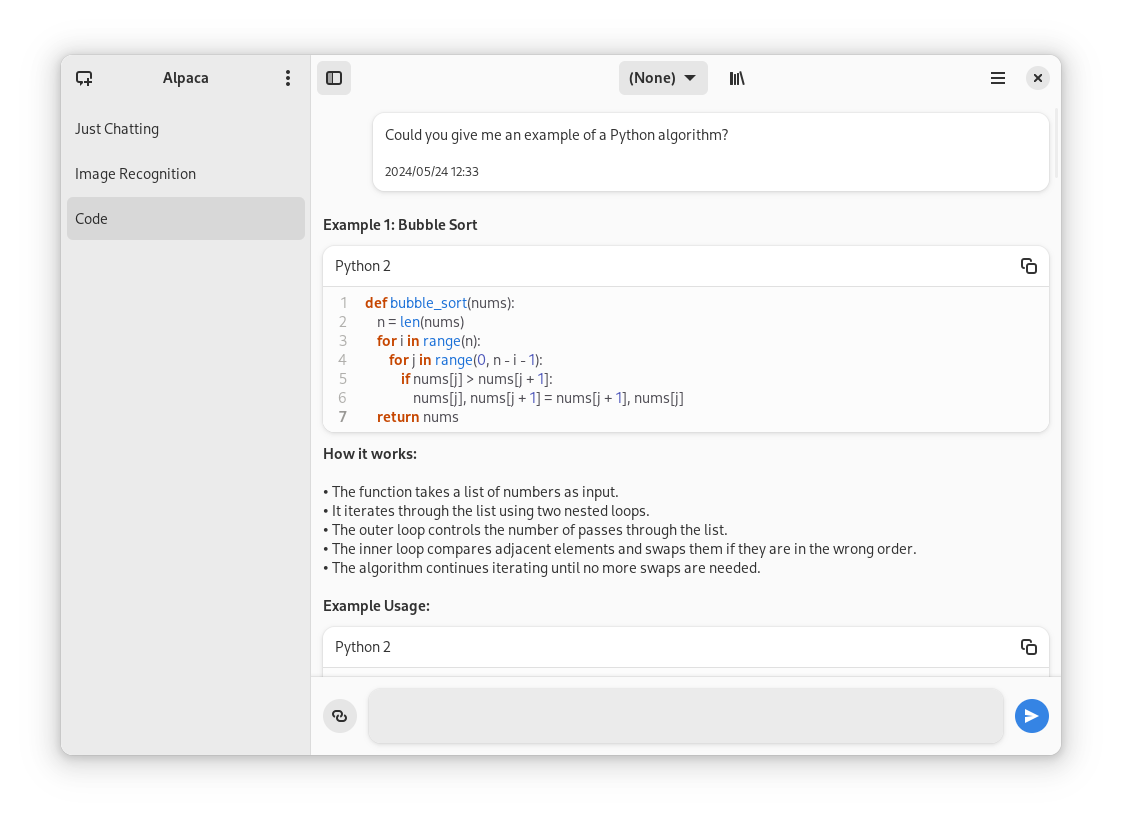

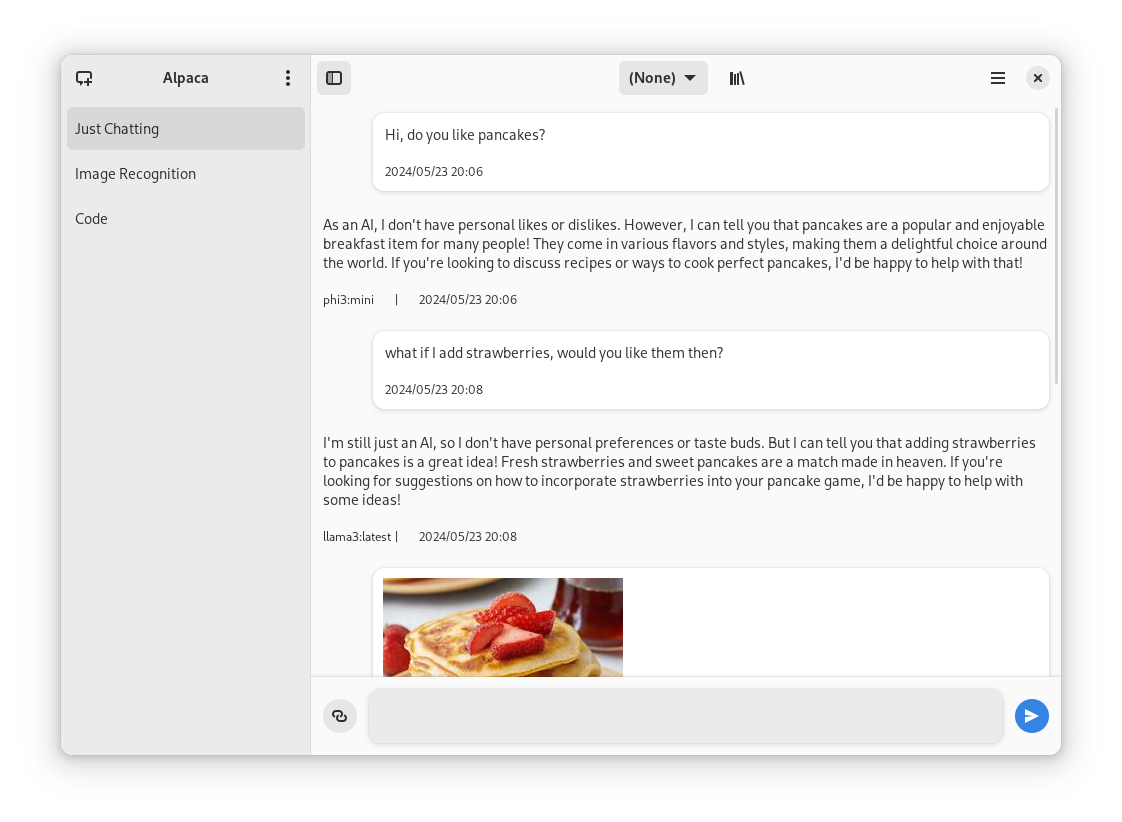

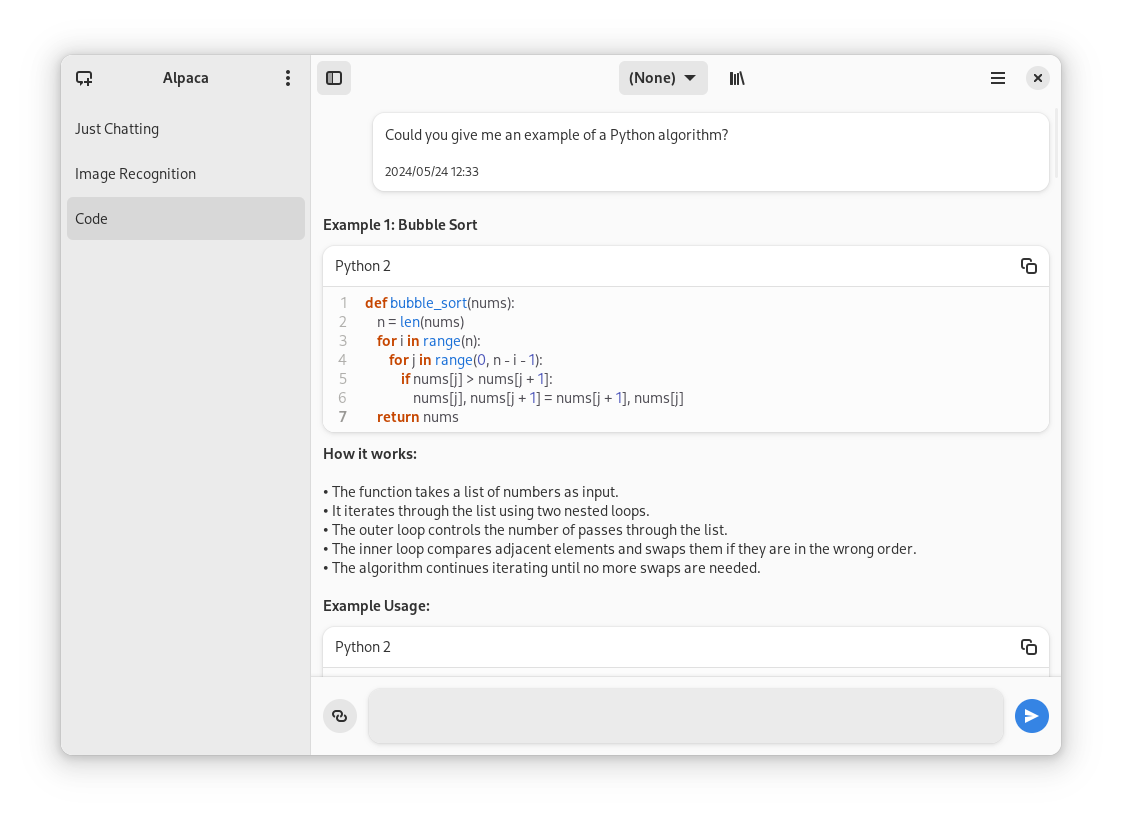

## Screenies

|

||||

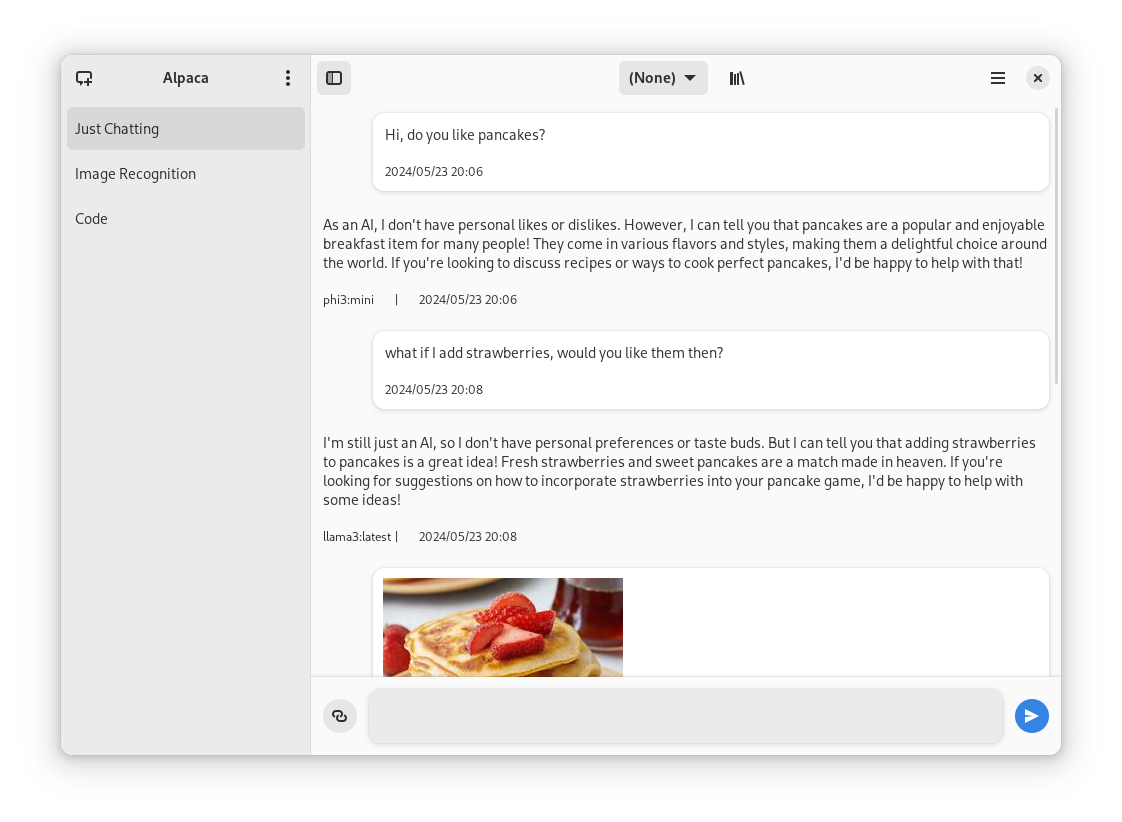

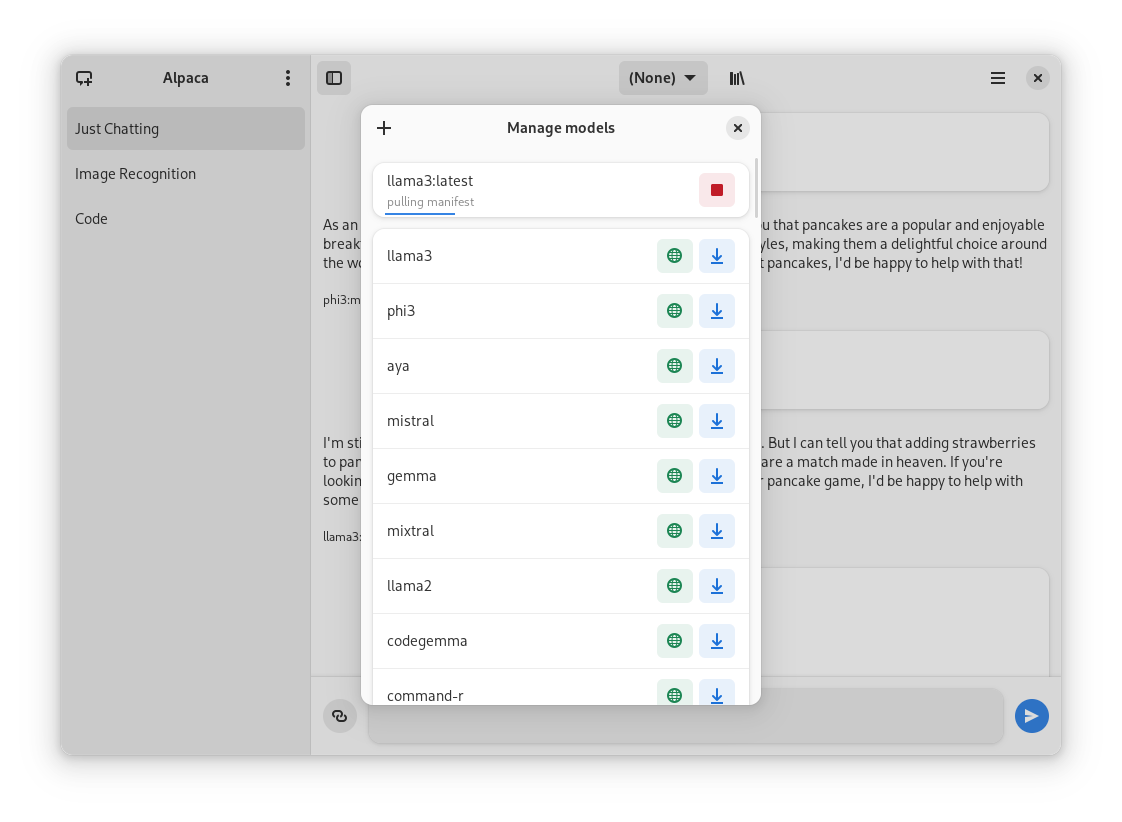

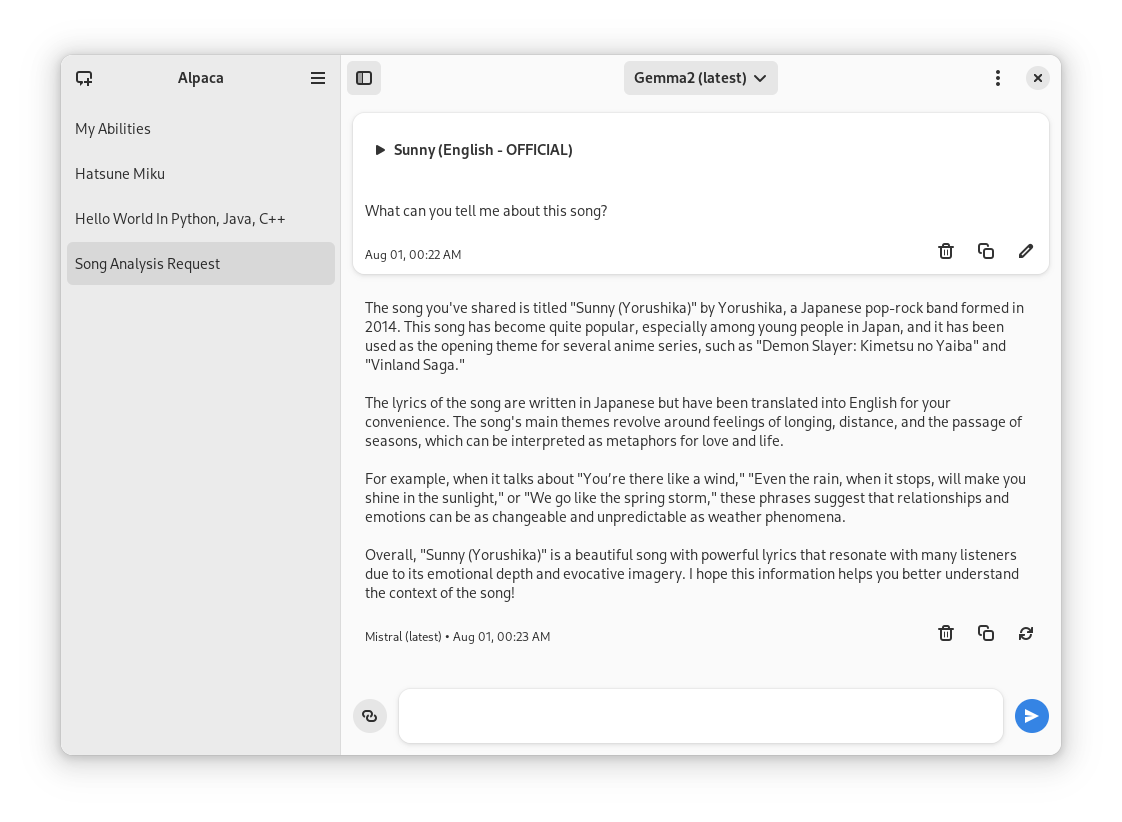

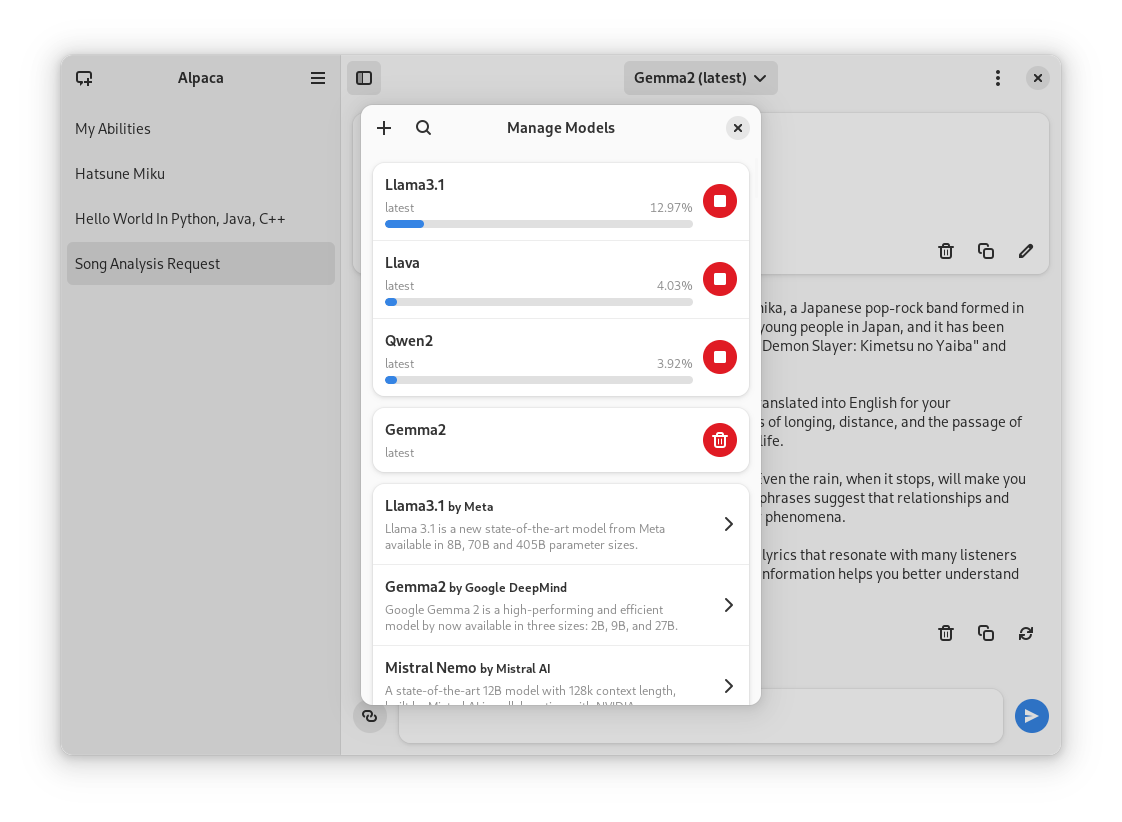

Chatting with a model | Image recognition | Code highlighting

|

||||

:--------------------:|:-----------------:|:----------------------:

|

||||

|  |

|

||||

|

||||

## Preview

|

||||

1. Clone repo using Gnome Builder

|

||||

2. Press the `run` button

|

||||

Normal conversation | Image recognition | Code highlighting | YouTube transcription | Model management

|

||||

:------------------:|:-----------------:|:-----------------:|:---------------------:|:----------------:

|

||||

|  |  |  |

|

||||

|

||||

## Instalation

|

||||

1. Go to the `releases` page

|

||||

2. Download the latest flatpak package

|

||||

3. Open it

|

||||

## Installation

|

||||

|

||||

## Ollama session tips

|

||||

### Flathub

|

||||

|

||||

### Change the port of the integrated Ollama instance

|

||||

Go to `~/.var/app/com.jeffser.Alpaca/config/server.json` and change the `"local_port"` value, by default it is `11435`.

|

||||

You can find the latest stable version of the app on [Flathub](https://flathub.org/apps/com.jeffser.Alpaca)

|

||||

|

||||

### Backup all the chats

|

||||

The chat data is located in `~/.var/app/com.jeffser.Alpaca/data/chats` you can copy that directory wherever you want to.

|

||||

### Flatpak Package

|

||||

|

||||

### Force showing the welcome dialog

|

||||

To do that you just need to delete the file `~/.var/app/com.jeffser.Alpaca/config/server.json`, this won't affect your saved chats or models.

|

||||

Everytime a new version is published they become available on the [releases page](https://github.com/Jeffser/Alpaca/releases) of the repository

|

||||

|

||||

### Add/Change environment variables for Ollama

|

||||

You can change anything except `$HOME` and `$OLLAMA_HOST`, to do this go to `~/.var/app/com.jeffser.Alpaca/config/server.json` and change `ollama_overrides` accordingly, some overrides are available to change on the GUI.

|

||||

### Building Git Version

|

||||

|

||||

Note: This is not recommended since the prerelease versions of the app often present errors and general instability.

|

||||

|

||||

1. Clone the project

|

||||

2. Open with Gnome Builder

|

||||

3. Press the run button (or export if you want to build a Flatpak package)

|

||||

|

||||

## Translators

|

||||

|

||||

Language | Contributors

|

||||

:----------------------|:-----------

|

||||

🇷🇺 Russian | [Alex K](https://github.com/alexkdeveloper)

|

||||

🇪🇸 Spanish | [Jeffry Samuel](https://github.com/jeffser)

|

||||

🇫🇷 French | [Louis Chauvet-Villaret](https://github.com/loulou64490) , [Théo FORTIN](https://github.com/topiga)

|

||||

🇧🇷 Brazilian Portuguese | [Daimar Stein](https://github.com/not-a-dev-stein)

|

||||

🇳🇴 Norwegian | [CounterFlow64](https://github.com/CounterFlow64)

|

||||

🇮🇳 Bengali | [Aritra Saha](https://github.com/olumolu)

|

||||

🇨🇳 Simplified Chinese | [Yuehao Sui](https://github.com/8ar10der) , [Aleksana](https://github.com/Aleksanaa)

|

||||

🇮🇳 Hindi | [Aritra Saha](https://github.com/olumolu)

|

||||

🇹🇷 Turkish | [YusaBecerikli](https://github.com/YusaBecerikli)

|

||||

🇺🇦 Ukrainian | [Simon](https://github.com/OriginalSimon)

|

||||

🇩🇪 German | [Marcel Margenberg](https://github.com/MehrzweckMandala)

|

||||

|

||||

Want to add a language? Visit [this discussion](https://github.com/Jeffser/Alpaca/discussions/153) to get started!

|

||||

|

||||

---

|

||||

|

||||

## Thanks

|

||||

- [not-a-dev-stein](https://github.com/not-a-dev-stein) for their help with requesting a new icon, bug reports and the translation to Brazilian Portuguese

|

||||

- [TylerLaBree](https://github.com/TylerLaBree) for their requests and ideas

|

||||

- [Alexkdeveloper](https://github.com/alexkdeveloper) for their help translating the app to Russian

|

||||

- [Imbev](https://github.com/imbev) for their reports and suggestions

|

||||

- [Nokse](https://github.com/Nokse22) for their contributions to the UI

|

||||

- [Louis Chauvet-Villaret](https://github.com/loulou64490) for their suggestions and help translating the app to French

|

||||

- [CounterFlow64](https://github.com/CounterFlow64) for their help translating the app to Norwegian

|

||||

|

||||

## About forks

|

||||

If you want to fork this... I mean, I think it would be better if you start from scratch, my code isn't well documented at all, but if you really want to, please give me some credit, that's all I ask for... And maybe a donation (joke)

|

||||

- [not-a-dev-stein](https://github.com/not-a-dev-stein) for their help with requesting a new icon and bug reports

|

||||

- [TylerLaBree](https://github.com/TylerLaBree) for their requests and ideas

|

||||

- [Imbev](https://github.com/imbev) for their reports and suggestions

|

||||

- [Nokse](https://github.com/Nokse22) for their contributions to the UI and table rendering

|

||||

- [Louis Chauvet-Villaret](https://github.com/loulou64490) for their suggestions

|

||||

- [Aleksana](https://github.com/Aleksanaa) for her help with better handling of directories

|

||||

- Sponsors for giving me enough money to be able to take a ride to my campus every time I need to <3

|

||||

- Everyone that has shared kind words of encouragement!

|

||||

|

||||

12

SECURITY.md

Normal file

12

SECURITY.md

Normal file

@@ -0,0 +1,12 @@

|

||||

# Security Policy

|

||||

|

||||

## Supported Packaging

|

||||

|

||||

Alpaca only supports [Flatpak](https://flatpak.org/) packaging officially, any other packaging methods might not behave as expected.

|

||||

|

||||

## Official Versions

|

||||

|

||||

The only ways Alpaca is being distributed officially are:

|

||||

|

||||

- [Alpaca's GitHub Repository Releases Page](https://github.com/Jeffser/Alpaca/releases)

|

||||

- [Flathub](https://flathub.org/apps/com.jeffser.Alpaca)

|

||||

@@ -8,9 +8,20 @@

|

||||

"--share=network",

|

||||

"--share=ipc",

|

||||

"--socket=fallback-x11",

|

||||

"--device=dri",

|

||||

"--socket=wayland"

|

||||

"--device=all",

|

||||

"--socket=wayland",

|

||||

"--filesystem=/sys/module/amdgpu:ro",

|

||||

"--env=LD_LIBRARY_PATH=/app/lib:/usr/lib/x86_64-linux-gnu/GL/default/lib:/usr/lib/x86_64-linux-gnu/openh264/extra:/usr/lib/x86_64-linux-gnu/openh264/extra:/usr/lib/sdk/llvm15/lib:/usr/lib/x86_64-linux-gnu/GL/default/lib:/usr/lib/ollama:/app/plugins/AMD/lib/ollama"

|

||||

],

|

||||

"add-extensions": {

|

||||

"com.jeffser.Alpaca.Plugins": {

|

||||

"add-ld-path": "/app/plugins/AMD/lib/ollama",

|

||||

"directory": "plugins",

|

||||

"no-autodownload": true,

|

||||

"autodelete": true,

|

||||

"subdirectories": true

|

||||

}

|

||||

},

|

||||

"cleanup" : [

|

||||

"/include",

|

||||

"/lib/pkgconfig",

|

||||

@@ -117,27 +128,44 @@

|

||||

"name": "ollama",

|

||||

"buildsystem": "simple",

|

||||

"build-commands": [

|

||||

"install -Dm0755 ollama* ${FLATPAK_DEST}/bin/ollama"

|

||||

"cp -r --remove-destination * ${FLATPAK_DEST}/",

|

||||

"mkdir ${FLATPAK_DEST}/plugins"

|

||||

],

|

||||

"sources": [

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://github.com/ollama/ollama/releases/download/v0.3.0/ollama-linux-amd64",

|

||||

"sha256": "b8817c34882c7ac138565836ac1995a2c61261a79315a13a0aebbfe5435da855",

|

||||

"type": "archive",

|

||||

"url": "https://github.com/ollama/ollama/releases/download/v0.3.9/ollama-linux-amd64.tgz",

|

||||

"sha256": "b0062fbccd46134818d9d59cfa3867ad6849163653cb1171bc852c5f379b0851",

|

||||

"only-arches": [

|

||||

"x86_64"

|

||||

]

|

||||

},

|

||||

{

|

||||

"type": "file",

|

||||

"url": "https://github.com/ollama/ollama/releases/download/v0.3.0/ollama-linux-arm64",

|

||||

"sha256": "64be908749212052146f1008dd3867359c776ac1766e8d86291886f53d294d4d",

|

||||

"type": "archive",

|

||||

"url": "https://github.com/ollama/ollama/releases/download/v0.3.9/ollama-linux-arm64.tgz",

|

||||

"sha256": "8979484bcb1448ab9b45107fbcb3b9f43c2af46f961487449b9ebf3518cd70eb",

|

||||

"only-arches": [

|

||||

"aarch64"

|

||||

]

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "libnuma",

|

||||

"buildsystem": "autotools",

|

||||

"build-commands": [

|

||||

"autoreconf -i",

|

||||

"make",

|

||||

"make install"

|

||||

],

|

||||

"sources": [

|

||||

{

|

||||

"type": "archive",

|

||||

"url": "https://github.com/numactl/numactl/releases/download/v2.0.18/numactl-2.0.18.tar.gz",

|

||||

"sha256": "b4fc0956317680579992d7815bc43d0538960dc73aa1dd8ca7e3806e30bc1274"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name" : "alpaca",

|

||||

"builddir" : true,

|

||||

@@ -145,7 +173,7 @@

|

||||

"sources" : [

|

||||

{

|

||||

"type" : "git",

|

||||

"url" : "file:///home/tentri/Documents/Alpaca",

|

||||

"url": "https://github.com/Jeffser/Alpaca.git",

|

||||

"branch" : "main"

|

||||

}

|

||||

]

|

||||

|

||||

@@ -5,5 +5,6 @@ Icon=com.jeffser.Alpaca

|

||||

Terminal=false

|

||||

Type=Application

|

||||

Categories=Utility;Development;Chat;

|

||||

Keywords=ai;ollama;llm

|

||||

StartupNotify=true

|

||||

X-Purism-FormFactor=Workstation;Mobile;

|

||||

X-Purism-FormFactor=Workstation;Mobile;

|

||||

|

||||

@@ -70,9 +70,7 @@

|

||||

<caption>Multiple models being downloaded</caption>

|

||||

</screenshot>

|

||||

</screenshots>

|

||||

<content_rating type="oars-1.1">

|

||||

<content_attribute id="money-purchasing">mild</content_attribute>

|

||||

</content_rating>

|

||||

<content_rating type="oars-1.1" />

|

||||

<url type="bugtracker">https://github.com/Jeffser/Alpaca/issues</url>

|

||||

<url type="homepage">https://jeffser.com/alpaca/</url>

|

||||

<url type="donation">https://github.com/sponsors/Jeffser</url>

|

||||

@@ -80,6 +78,154 @@

|

||||

<url type="contribute">https://github.com/Jeffser/Alpaca/discussions/154</url>

|

||||

<url type="vcs-browser">https://github.com/Jeffser/Alpaca</url>

|

||||

<releases>

|

||||

<release version="2.0.1" date="2024-09-11">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.1</url>

|

||||

<description>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Fixed 'clear chat' option</li>

|

||||

<li>Fixed welcome dialog causing the local instance to not launch</li>

|

||||

<li>Fixed support for AMD GPUs</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="2.0.0" date="2024-09-01">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/2.0.0</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Model, message and chat systems have been rewritten</li>

|

||||

<li>New models are available</li>

|

||||

<li>Ollama updated to v0.3.9</li>

|

||||

<li>Added support for multiple chat generations simultaneously</li>

|

||||

<li>Added experimental AMD GPU support</li>

|

||||

<li>Added message loading spinner and new message indicator to chat tab</li>

|

||||

<li>Added animations</li>

|

||||

<li>Changed model manager / model selector appearance</li>

|

||||

<li>Changed message appearance</li>

|

||||

<li>Added markdown and code blocks to user messages</li>

|

||||

<li>Added loading dialog at launch so the app opens faster</li>

|

||||

<li>Added warning when device is on 'battery saver' mode</li>

|

||||

<li>Added inactivity timer to integrated instance</li>

|

||||

</ul>

|

||||

<ul>

|

||||

<li>The chat is now scrolled to the bottom when it's changed</li>

|

||||

<li>Better handling of focus on messages</li>

|

||||

<li>Better general performance on the app</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.1.1" date="2024-08-12">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.1.1</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>New duplicate chat option</li>

|

||||

<li>Changed model selector appearance</li>

|

||||

<li>Message entry is focused on launch and chat change</li>

|

||||

<li>Message is focused when it's being edited</li>

|

||||

<li>Added loading spinner when regenerating a message</li>

|

||||

<li>Added Ollama debugging to 'About Alpaca' dialog</li>

|

||||

<li>Changed YouTube transcription dialog appearance and behavior</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>CTRL+W and CTRL+Q stops local instance before closing the app</li>

|

||||

<li>Changed appearance of 'Open Model Manager' button on welcome screen</li>

|

||||

<li>Fixed message generation not working consistently</li>

|

||||

<li>Fixed message edition not working consistently</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.1.0" date="2024-08-10">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.1.0</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Model manager opens faster</li>

|

||||

<li>Delete chat option in secondary menu</li>

|

||||

<li>New model selector popup</li>

|

||||

<li>Standard shortcuts</li>

|

||||

<li>Model manager is navigable with keyboard</li>

|

||||

<li>Changed sidebar collapsing behavior</li>

|

||||

<li>Focus indicators on messages</li>

|

||||

<li>Welcome screen</li>

|

||||

<li>Give message entry focus at launch</li>

|

||||

<li>Generally better code</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Better width for dialogs</li>

|

||||

<li>Better compatibility with screen readers</li>

|

||||

<li>Fixed message regenerator</li>

|

||||

<li>Removed 'Featured models' from welcome dialog</li>

|

||||

<li>Added default buttons to dialogs</li>

|

||||

<li>Fixed import / export of chats</li>

|

||||

<li>Changed Python2 title to Python on code blocks</li>

|

||||

<li>Prevent regeneration of title when the user changed it to a custom title</li>

|

||||

<li>Show date on stopped messages</li>

|

||||

<li>Fix clear chat error</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.0.6" date="2024-08-04">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.0.6</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Changed shortcuts to standards</li>

|

||||

<li>Moved 'Manage Models' button to primary menu</li>

|

||||

<li>Stable support for GGUF model files</li>

|

||||

<li>General optimizations</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Better handling of enter key (important for Japanese input)</li>

|

||||

<li>Removed sponsor dialog</li>

|

||||

<li>Added sponsor link in about dialog</li>

|

||||

<li>Changed window and elements dimensions</li>

|

||||

<li>Selected model changes when entering model manager</li>

|

||||

<li>Better image tooltips</li>

|

||||

<li>GGUF Support</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.0.5" date="2024-08-02">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.0.5</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Regenerate any response, even if they are incomplete</li>

|

||||

<li>Support for pulling models by name:tag</li>

|

||||

<li>Stable support for GGUF model files</li>

|

||||

<li>Restored sidebar toggle button</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Reverted back to standard styles</li>

|

||||

<li>Fixed generated titles having "'S" for some reason</li>

|

||||

<li>Changed min width for model dropdown</li>

|

||||

<li>Changed message entry shadow</li>

|

||||

<li>The last model used is now restored when the user changes chat</li>

|

||||

<li>Better check for message finishing</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.0.4" date="2024-08-01">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.0.4</url>

|

||||

<description>

|

||||

<p>New</p>

|

||||

<ul>

|

||||

<li>Added table rendering (Thanks Nokse)</li>

|

||||

</ul>

|

||||

<p>Fixes</p>

|

||||

<ul>

|

||||

<li>Made support dialog more common</li>

|

||||

<li>Dialog title on tag chooser when downloading models didn't display properly</li>

|

||||

<li>Prevent chat generation from generating a title with multiple lines</li>

|

||||

</ul>

|

||||

</description>

|

||||

</release>

|

||||

<release version="1.0.3" date="2024-08-01">

|

||||

<url type="details">https://github.com/Jeffser/Alpaca/releases/tag/1.0.3</url>

|

||||

<description>

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

project('Alpaca', 'c',

|

||||

version: '1.0.3',

|

||||

version: '2.0.1',

|

||||

meson_version: '>= 0.62.0',

|

||||

default_options: [ 'warning_level=2', 'werror=false', ],

|

||||

)

|

||||

|

||||

@@ -4,4 +4,8 @@ pt_BR

|

||||

fr

|

||||

nb_NO

|

||||

bn

|

||||

zh_CN

|

||||

zh_Hans

|

||||

hi

|

||||

tr

|

||||

uk

|

||||

de

|

||||

|

||||

@@ -7,3 +7,7 @@ src/available_models_descriptions.py

|

||||

src/connection_handler.py

|

||||

src/dialogs.py

|

||||

src/window.ui

|

||||

src/custom_widgets/chat_widget.py

|

||||

src/custom_widgets/message_widget.py

|

||||

src/custom_widgets/model_widget.py

|

||||

src/custom_widgets/table_widget.py

|

||||

2057

po/alpaca.pot

2057

po/alpaca.pot

File diff suppressed because it is too large

Load Diff

File diff suppressed because it is too large

Load Diff

2336

po/nb_NO.po

2336

po/nb_NO.po

File diff suppressed because it is too large

Load Diff

2122

po/pt_BR.po

2122

po/pt_BR.po

File diff suppressed because it is too large

Load Diff

2704

po/zh_Hans.po

Normal file

2704

po/zh_Hans.po

Normal file

File diff suppressed because it is too large

Load Diff

@@ -28,6 +28,9 @@

|

||||

<file alias="icons/scalable/status/edit-find-symbolic.svg">icons/edit-find-symbolic.svg</file>

|

||||

<file alias="icons/scalable/status/edit-symbolic.svg">icons/edit-symbolic.svg</file>

|

||||

<file alias="icons/scalable/status/image-missing-symbolic.svg">icons/image-missing-symbolic.svg</file>

|

||||

<file alias="icons/scalable/status/update-symbolic.svg">icons/update-symbolic.svg</file>

|

||||

<file alias="icons/scalable/status/down-symbolic.svg">icons/down-symbolic.svg</file>

|

||||

<file alias="icons/scalable/status/chat-bubble-text-symbolic.svg">icons/chat-bubble-text-symbolic.svg</file>

|

||||

<file preprocess="xml-stripblanks">window.ui</file>

|

||||

<file preprocess="xml-stripblanks">gtk/help-overlay.ui</file>

|

||||

</gresource>

|

||||

|

||||

File diff suppressed because it is too large

Load Diff

@@ -1,6 +1,6 @@

|

||||

descriptions = {

|

||||

'llama3.1': _("Llama 3.1 is a new state-of-the-art model from Meta available in 8B, 70B and 405B parameter sizes."),

|

||||

'gemma2': _("Google Gemma 2 is now available in 2 sizes, 9B and 27B."),

|

||||

'gemma2': _("Google Gemma 2 is a high-performing and efficient model by now available in three sizes: 2B, 9B, and 27B."),

|

||||

'mistral-nemo': _("A state-of-the-art 12B model with 128k context length, built by Mistral AI in collaboration with NVIDIA."),

|

||||

'mistral-large': _("Mistral Large 2 is Mistral's new flagship model that is significantly more capable in code generation, mathematics, and reasoning with 128k context window and support for dozens of languages."),

|

||||

'qwen2': _("Qwen2 is a new series of large language models from Alibaba group"),

|

||||

@@ -17,89 +17,96 @@ descriptions = {

|

||||

'qwen': _("Qwen 1.5 is a series of large language models by Alibaba Cloud spanning from 0.5B to 110B parameters"),

|

||||

'llama2': _("Llama 2 is a collection of foundation language models ranging from 7B to 70B parameters."),

|

||||

'codellama': _("A large language model that can use text prompts to generate and discuss code."),

|

||||

'dolphin-mixtral': _("Uncensored, 8x7b and 8x22b fine-tuned models based on the Mixtral mixture of experts models that excels at coding tasks. Created by Eric Hartford."),

|

||||

'nomic-embed-text': _("A high-performing open embedding model with a large token context window."),

|

||||

'llama2-uncensored': _("Uncensored Llama 2 model by George Sung and Jarrad Hope."),

|

||||

'dolphin-mixtral': _("Uncensored, 8x7b and 8x22b fine-tuned models based on the Mixtral mixture of experts models that excels at coding tasks. Created by Eric Hartford."),

|

||||

'phi': _("Phi-2: a 2.7B language model by Microsoft Research that demonstrates outstanding reasoning and language understanding capabilities."),

|

||||

'llama2-uncensored': _("Uncensored Llama 2 model by George Sung and Jarrad Hope."),

|

||||

'deepseek-coder': _("DeepSeek Coder is a capable coding model trained on two trillion code and natural language tokens."),

|

||||

'mxbai-embed-large': _("State-of-the-art large embedding model from mixedbread.ai"),

|

||||

'zephyr': _("Zephyr is a series of fine-tuned versions of the Mistral and Mixtral models that are trained to act as helpful assistants."),

|

||||

'dolphin-mistral': _("The uncensored Dolphin model based on Mistral that excels at coding tasks. Updated to version 2.8."),

|

||||

'starcoder2': _("StarCoder2 is the next generation of transparently trained open code LLMs that comes in three sizes: 3B, 7B and 15B parameters."),

|

||||

'orca-mini': _("A general-purpose model ranging from 3 billion parameters to 70 billion, suitable for entry-level hardware."),

|

||||

'dolphin-llama3': _("Dolphin 2.9 is a new model with 8B and 70B sizes by Eric Hartford based on Llama 3 that has a variety of instruction, conversational, and coding skills."),

|

||||

'mxbai-embed-large': _("State-of-the-art large embedding model from mixedbread.ai"),

|

||||

'starcoder2': _("StarCoder2 is the next generation of transparently trained open code LLMs that comes in three sizes: 3B, 7B and 15B parameters."),

|

||||

'mistral-openorca': _("Mistral OpenOrca is a 7 billion parameter model, fine-tuned on top of the Mistral 7B model using the OpenOrca dataset."),

|

||||

'yi': _("Yi 1.5 is a high-performing, bilingual language model."),

|

||||

'zephyr': _("Zephyr is a series of fine-tuned versions of the Mistral and Mixtral models that are trained to act as helpful assistants."),

|

||||

'llama2-chinese': _("Llama 2 based model fine tuned to improve Chinese dialogue ability."),

|

||||

'mistral-openorca': _("Mistral OpenOrca is a 7 billion parameter model, fine-tuned on top of the Mistral 7B model using the OpenOrca dataset."),

|

||||

'llava-llama3': _("A LLaVA model fine-tuned from Llama 3 Instruct with better scores in several benchmarks."),

|

||||

'vicuna': _("General use chat model based on Llama and Llama 2 with 2K to 16K context sizes."),

|

||||

'nous-hermes2': _("The powerful family of models by Nous Research that excels at scientific discussion and coding tasks."),

|

||||

'tinyllama': _("The TinyLlama project is an open endeavor to train a compact 1.1B Llama model on 3 trillion tokens."),

|

||||

'wizard-vicuna-uncensored': _("Wizard Vicuna Uncensored is a 7B, 13B, and 30B parameter model based on Llama 2 uncensored by Eric Hartford."),

|

||||

'codestral': _("Codestral is Mistral AI’s first-ever code model designed for code generation tasks."),

|

||||

'starcoder': _("StarCoder is a code generation model trained on 80+ programming languages."),

|

||||

'wizardlm2': _("State of the art large language model from Microsoft AI with improved performance on complex chat, multilingual, reasoning and agent use cases."),

|

||||

'llama2-chinese': _("Llama 2 based model fine tuned to improve Chinese dialogue ability."),

|

||||

'vicuna': _("General use chat model based on Llama and Llama 2 with 2K to 16K context sizes."),

|

||||

'tinyllama': _("The TinyLlama project is an open endeavor to train a compact 1.1B Llama model on 3 trillion tokens."),

|

||||

'codestral': _("Codestral is Mistral AI’s first-ever code model designed for code generation tasks."),

|

||||

'wizard-vicuna-uncensored': _("Wizard Vicuna Uncensored is a 7B, 13B, and 30B parameter model based on Llama 2 uncensored by Eric Hartford."),

|

||||

'nous-hermes2': _("The powerful family of models by Nous Research that excels at scientific discussion and coding tasks."),

|

||||

'openchat': _("A family of open-source models trained on a wide variety of data, surpassing ChatGPT on various benchmarks. Updated to version 3.5-0106."),

|

||||

'aya': _("Aya 23, released by Cohere, is a new family of state-of-the-art, multilingual models that support 23 languages."),

|

||||

'wizardlm2': _("State of the art large language model from Microsoft AI with improved performance on complex chat, multilingual, reasoning and agent use cases."),

|

||||

'tinydolphin': _("An experimental 1.1B parameter model trained on the new Dolphin 2.8 dataset by Eric Hartford and based on TinyLlama."),

|

||||

'openhermes': _("OpenHermes 2.5 is a 7B model fine-tuned by Teknium on Mistral with fully open datasets."),

|

||||

'granite-code': _("A family of open foundation models by IBM for Code Intelligence"),

|

||||

'wizardcoder': _("State-of-the-art code generation model"),

|

||||

'stable-code': _("Stable Code 3B is a coding model with instruct and code completion variants on par with models such as Code Llama 7B that are 2.5x larger."),

|

||||

'openhermes': _("OpenHermes 2.5 is a 7B model fine-tuned by Teknium on Mistral with fully open datasets."),

|

||||

'all-minilm': _("Embedding models on very large sentence level datasets."),

|

||||

'codeqwen': _("CodeQwen1.5 is a large language model pretrained on a large amount of code data."),

|

||||

'stablelm2': _("Stable LM 2 is a state-of-the-art 1.6B and 12B parameter language model trained on multilingual data in English, Spanish, German, Italian, French, Portuguese, and Dutch."),

|

||||

'wizard-math': _("Model focused on math and logic problems"),

|

||||

'neural-chat': _("A fine-tuned model based on Mistral with good coverage of domain and language."),

|

||||

'stablelm2': _("Stable LM 2 is a state-of-the-art 1.6B and 12B parameter language model trained on multilingual data in English, Spanish, German, Italian, French, Portuguese, and Dutch."),

|

||||

'granite-code': _("A family of open foundation models by IBM for Code Intelligence"),

|

||||

'all-minilm': _("Embedding models on very large sentence level datasets."),

|

||||

'phind-codellama': _("Code generation model based on Code Llama."),

|

||||

'dolphincoder': _("A 7B and 15B uncensored variant of the Dolphin model family that excels at coding, based on StarCoder2."),

|

||||

'nous-hermes': _("General use models based on Llama and Llama 2 from Nous Research."),

|

||||

'sqlcoder': _("SQLCoder is a code completion model fined-tuned on StarCoder for SQL generation tasks"),

|

||||

'llama3-gradient': _("This model extends LLama-3 8B's context length from 8k to over 1m tokens."),

|

||||

'starling-lm': _("Starling is a large language model trained by reinforcement learning from AI feedback focused on improving chatbot helpfulness."),

|

||||

'yarn-llama2': _("An extension of Llama 2 that supports a context of up to 128k tokens."),

|

||||

'phind-codellama': _("Code generation model based on Code Llama."),

|

||||

'nous-hermes': _("General use models based on Llama and Llama 2 from Nous Research."),

|

||||

'dolphincoder': _("A 7B and 15B uncensored variant of the Dolphin model family that excels at coding, based on StarCoder2."),

|

||||

'sqlcoder': _("SQLCoder is a code completion model fined-tuned on StarCoder for SQL generation tasks"),

|

||||

'xwinlm': _("Conversational model based on Llama 2 that performs competitively on various benchmarks."),

|

||||

'deepseek-llm': _("An advanced language model crafted with 2 trillion bilingual tokens."),

|

||||

'yarn-llama2': _("An extension of Llama 2 that supports a context of up to 128k tokens."),

|

||||

'llama3-chatqa': _("A model from NVIDIA based on Llama 3 that excels at conversational question answering (QA) and retrieval-augmented generation (RAG)."),

|

||||

'orca2': _("Orca 2 is built by Microsoft research, and are a fine-tuned version of Meta's Llama 2 models. The model is designed to excel particularly in reasoning."),

|

||||

'wizardlm': _("General use model based on Llama 2."),

|

||||

'starling-lm': _("Starling is a large language model trained by reinforcement learning from AI feedback focused on improving chatbot helpfulness."),

|

||||

'codegeex4': _("A versatile model for AI software development scenarios, including code completion."),

|

||||

'snowflake-arctic-embed': _("A suite of text embedding models by Snowflake, optimized for performance."),

|

||||

'orca2': _("Orca 2 is built by Microsoft research, and are a fine-tuned version of Meta's Llama 2 models. The model is designed to excel particularly in reasoning."),

|

||||

'solar': _("A compact, yet powerful 10.7B large language model designed for single-turn conversation."),

|

||||

'samantha-mistral': _("A companion assistant trained in philosophy, psychology, and personal relationships. Based on Mistral."),

|

||||

'dolphin-phi': _("2.7B uncensored Dolphin model by Eric Hartford, based on the Phi language model by Microsoft Research."),

|

||||

'stable-beluga': _("Llama 2 based model fine tuned on an Orca-style dataset. Originally called Free Willy."),

|

||||

'moondream': _("moondream2 is a small vision language model designed to run efficiently on edge devices."),

|

||||

'bakllava': _("BakLLaVA is a multimodal model consisting of the Mistral 7B base model augmented with the LLaVA architecture."),

|

||||

'wizardlm-uncensored': _("Uncensored version of Wizard LM model"),

|

||||

'snowflake-arctic-embed': _("A suite of text embedding models by Snowflake, optimized for performance."),

|

||||

'smollm': _("🪐 A family of small models with 135M, 360M, and 1.7B parameters, trained on a new high-quality dataset."),

|

||||

'stable-beluga': _("🪐 A family of small models with 135M, 360M, and 1.7B parameters, trained on a new high-quality dataset."),

|

||||

'qwen2-math': _("Qwen2 Math is a series of specialized math language models built upon the Qwen2 LLMs, which significantly outperforms the mathematical capabilities of open-source models and even closed-source models (e.g., GPT4o)."),

|

||||

'dolphin-phi': _("2.7B uncensored Dolphin model by Eric Hartford, based on the Phi language model by Microsoft Research."),

|

||||

'deepseek-v2': _("A strong, economical, and efficient Mixture-of-Experts language model."),

|

||||

'medllama2': _("Fine-tuned Llama 2 model to answer medical questions based on an open source medical dataset."),

|

||||

'yarn-mistral': _("An extension of Mistral to support context windows of 64K or 128K."),

|

||||

'llama-pro': _("An expansion of Llama 2 that specializes in integrating both general language understanding and domain-specific knowledge, particularly in programming and mathematics."),

|

||||

'nous-hermes2-mixtral': _("The Nous Hermes 2 model from Nous Research, now trained over Mixtral."),

|

||||

'meditron': _("Open-source medical large language model adapted from Llama 2 to the medical domain."),

|

||||

'codeup': _("Great code generation model based on Llama2."),

|

||||

'nexusraven': _("Nexus Raven is a 13B instruction tuned model for function calling tasks."),

|

||||

'everythinglm': _("Uncensored Llama2 based model with support for a 16K context window."),

|

||||

'llava-phi3': _("A new small LLaVA model fine-tuned from Phi 3 Mini."),

|

||||

'codegeex4': _("A versatile model for AI software development scenarios, including code completion."),

|

||||

'bakllava': _("BakLLaVA is a multimodal model consisting of the Mistral 7B base model augmented with the LLaVA architecture."),

|

||||

'glm4': _("A strong multi-lingual general language model with competitive performance to Llama 3."),

|

||||

'wizardlm-uncensored': _("Uncensored version of Wizard LM model"),

|

||||

'yarn-mistral': _("An extension of Mistral to support context windows of 64K or 128K."),

|

||||

'phi3.5': _("A lightweight AI model with 3.8 billion parameters with performance overtaking similarly and larger sized models."),

|

||||

'medllama2': _("Fine-tuned Llama 2 model to answer medical questions based on an open source medical dataset."),

|

||||

'llama-pro': _("An expansion of Llama 2 that specializes in integrating both general language understanding and domain-specific knowledge, particularly in programming and mathematics."),

|

||||

'llava-phi3': _("A new small LLaVA model fine-tuned from Phi 3 Mini."),

|

||||

'meditron': _("Open-source medical large language model adapted from Llama 2 to the medical domain."),

|

||||

'nous-hermes2-mixtral': _("The Nous Hermes 2 model from Nous Research, now trained over Mixtral."),

|

||||

'nexusraven': _("Nexus Raven is a 13B instruction tuned model for function calling tasks."),

|

||||

'codeup': _("Great code generation model based on Llama2."),

|

||||

'everythinglm': _("Uncensored Llama2 based model with support for a 16K context window."),

|

||||

'hermes3': _("Hermes 3 is the latest version of the flagship Hermes series of LLMs by Nous Research"),

|

||||

'internlm2': _("InternLM2.5 is a 7B parameter model tailored for practical scenarios with outstanding reasoning capability."),

|

||||

'magicoder': _("🎩 Magicoder is a family of 7B parameter models trained on 75K synthetic instruction data using OSS-Instruct, a novel approach to enlightening LLMs with open-source code snippets."),

|

||||

'stablelm-zephyr': _("A lightweight chat model allowing accurate, and responsive output without requiring high-end hardware."),

|

||||

'codebooga': _("A high-performing code instruct model created by merging two existing code models."),

|

||||

'mistrallite': _("MistralLite is a fine-tuned model based on Mistral with enhanced capabilities of processing long contexts."),

|

||||

'llama3-groq-tool-use': _("A series of models from Groq that represent a significant advancement in open-source AI capabilities for tool use/function calling."),

|

||||

'falcon2': _("Falcon2 is an 11B parameters causal decoder-only model built by TII and trained over 5T tokens."),

|

||||

'wizard-vicuna': _("Wizard Vicuna is a 13B parameter model based on Llama 2 trained by MelodysDreamj."),

|

||||

'duckdb-nsql': _("7B parameter text-to-SQL model made by MotherDuck and Numbers Station."),

|

||||

'megadolphin': _("MegaDolphin-2.2-120b is a transformation of Dolphin-2.2-70b created by interleaving the model with itself."),

|

||||

'goliath': _("A language model created by combining two fine-tuned Llama 2 70B models into one."),

|

||||

'notux': _("A top-performing mixture of experts model, fine-tuned with high-quality data."),

|

||||

'goliath': _("A language model created by combining two fine-tuned Llama 2 70B models into one."),

|

||||

'open-orca-platypus2': _("Merge of the Open Orca OpenChat model and the Garage-bAInd Platypus 2 model. Designed for chat and code generation."),

|

||||

'falcon2': _("Falcon2 is an 11B parameters causal decoder-only model built by TII and trained over 5T tokens."),

|

||||

'notus': _("A 7B chat model fine-tuned with high-quality data and based on Zephyr."),

|

||||

'dbrx': _("DBRX is an open, general-purpose LLM created by Databricks."),

|

||||

'internlm2': _("InternLM2.5 is a 7B parameter model tailored for practical scenarios with outstanding reasoning capability."),

|

||||

'alfred': _("A robust conversational model designed to be used for both chat and instruct use cases."),

|

||||

'llama3-groq-tool-use': _("A series of models from Groq that represent a significant advancement in open-source AI capabilities for tool use/function calling."),

|

||||

'mathstral': _("MathΣtral: a 7B model designed for math reasoning and scientific discovery by Mistral AI."),

|

||||

'bge-m3': _("BGE-M3 is a new model from BAAI distinguished for its versatility in Multi-Functionality, Multi-Linguality, and Multi-Granularity."),

|

||||

'alfred': _("A robust conversational model designed to be used for both chat and instruct use cases."),

|

||||

'firefunction-v2': _("An open weights function calling model based on Llama 3, competitive with GPT-4o function calling capabilities."),

|

||||

'nuextract': _("A 3.8B model fine-tuned on a private high-quality synthetic dataset for information extraction, based on Phi-3."),

|

||||

'bge-large': _("Embedding model from BAAI mapping texts to vectors."),

|

||||

'paraphrase-multilingual': _("Sentence-transformers model that can be used for tasks like clustering or semantic search."),

|

||||

}

|

||||

@@ -1,30 +1,140 @@

|

||||

# connection_handler.py

|

||||

import json, requests

|

||||

#OK=200 response.status_code

|

||||

url = None

|

||||

bearer_token = None

|

||||

"""

|

||||

Handles requests to remote and integrated instances of Ollama

|

||||

"""

|

||||

import json, os, requests, subprocess, threading, shutil

|

||||

from .internal import data_dir, cache_dir

|

||||

from logging import getLogger

|

||||

from time import sleep

|

||||

|

||||

def get_headers(include_json:bool) -> dict:

|

||||

headers = {}

|

||||

if include_json:

|

||||

headers["Content-Type"] = "application/json"

|

||||

if bearer_token:

|

||||

headers["Authorization"] = "Bearer {}".format(bearer_token)

|

||||

return headers if len(headers.keys()) > 0 else None

|

||||

logger = getLogger(__name__)

|

||||

|

||||

def simple_get(connection_url:str) -> dict:

|

||||

return requests.get(connection_url, headers=get_headers(False))

|

||||

window = None

|

||||

|

||||

def simple_post(connection_url:str, data) -> dict:

|

||||

return requests.post(connection_url, headers=get_headers(True), data=data, stream=False)

|

||||

def log_output(pipe):

|

||||

with open(os.path.join(data_dir, 'tmp.log'), 'a') as f:

|

||||

with pipe:

|

||||

try:

|

||||

for line in iter(pipe.readline, ''):

|

||||

print(line, end='')

|

||||

f.write(line)

|

||||

f.flush()

|

||||

except:

|

||||

pass

|

||||

|

||||

def simple_delete(connection_url:str, data) -> dict:

|

||||

return requests.delete(connection_url, headers=get_headers(False), json=data)

|

||||

class instance():

|

||||

|

||||

def stream_post(connection_url:str, data, callback:callable) -> dict:

|

||||

response = requests.post(connection_url, headers=get_headers(True), data=data, stream=True)

|

||||

if response.status_code == 200:

|

||||

for line in response.iter_lines():

|

||||

if line:

|

||||

callback(json.loads(line.decode("utf-8")))

|

||||

return response

|

||||

def __init__(self, local_port:int, remote_url:str, remote:bool, tweaks:dict, overrides:dict, bearer_token:str, idle_timer_delay:int):

|

||||

self.local_port=local_port

|

||||

self.remote_url=remote_url

|

||||

self.remote=remote

|

||||

self.tweaks=tweaks

|

||||

self.overrides=overrides

|

||||

self.bearer_token=bearer_token

|

||||

self.idle_timer_delay=idle_timer_delay

|

||||

self.idle_timer_stop_event=threading.Event()

|

||||

self.idle_timer=None

|

||||

self.instance=None

|

||||

self.busy=0

|

||||

if not self.remote:

|

||||

self.start()

|

||||

|

||||

def get_headers(self, include_json:bool) -> dict:

|

||||

headers = {}

|

||||

if include_json:

|

||||

headers["Content-Type"] = "application/json"

|

||||

if self.bearer_token and self.remote:

|

||||

headers["Authorization"] = "Bearer " + self.bearer_token

|

||||

return headers if len(headers.keys()) > 0 else None

|

||||

|

||||

def request(self, connection_type:str, connection_url:str, data:dict=None, callback:callable=None) -> requests.models.Response:

|

||||

self.busy += 1

|

||||

if self.idle_timer and not self.remote:

|

||||

self.idle_timer_stop_event.set()

|

||||

self.idle_timer=None

|

||||

if not self.instance and not self.remote:

|

||||

self.start()

|

||||

connection_url = '{}/{}'.format(self.remote_url if self.remote else 'http://127.0.0.1:{}'.format(self.local_port), connection_url)

|

||||

logger.info('{} : {}'.format(connection_type, connection_url))

|

||||

response = None

|

||||

match connection_type:

|

||||

case "GET":

|

||||

response = requests.get(connection_url, headers=self.get_headers(False))

|

||||

case "POST":

|

||||

if callback:

|

||||

response = requests.post(connection_url, headers=self.get_headers(True), data=data, stream=True)

|

||||

if response.status_code == 200:

|

||||

for line in response.iter_lines():

|

||||

if line:

|

||||

callback(json.loads(line.decode("utf-8")))

|

||||

else:

|

||||

response = requests.post(connection_url, headers=self.get_headers(True), data=data, stream=False)

|

||||

case "DELETE":

|

||||

response = requests.delete(connection_url, headers=self.get_headers(False), data=data)

|

||||

self.busy -= 1

|

||||

if not self.idle_timer and not self.remote:

|

||||

self.start_timer()

|

||||

return response

|

||||

|

||||

def run_timer(self):

|

||||

if not self.idle_timer_stop_event.wait(self.idle_timer_delay*60):

|

||||

window.show_toast(_("Ollama instance was shut down due to inactivity"), window.main_overlay)

|

||||

self.stop()

|

||||

|

||||

def start_timer(self):

|

||||

if self.busy == 0:

|

||||

if self.idle_timer:

|

||||

self.idle_timer_stop_event.set()

|

||||

self.idle_timer=None

|

||||

if self.idle_timer_delay > 0 and self.busy == 0:

|

||||

self.idle_timer_stop_event.clear()

|

||||

self.idle_timer = threading.Thread(target=self.run_timer)

|

||||

self.idle_timer.start()

|

||||

|

||||

def start(self):

|

||||

if shutil.which('ollama'):

|

||||

if not os.path.isdir(os.path.join(cache_dir, 'tmp/ollama')):

|

||||

os.mkdir(os.path.join(cache_dir, 'tmp/ollama'))

|

||||

self.instance = None

|

||||

params = self.overrides.copy()

|

||||

params["OLLAMA_DEBUG"] = "1"

|

||||

params["OLLAMA_HOST"] = f"127.0.0.1:{self.local_port}" # You can't change this directly sorry :3

|

||||

params["HOME"] = data_dir

|

||||

params["TMPDIR"] = os.path.join(cache_dir, 'tmp/ollama')

|

||||

instance = subprocess.Popen(["ollama", "serve"], env={**os.environ, **params}, stderr=subprocess.PIPE, stdout=subprocess.PIPE, text=True)

|

||||

threading.Thread(target=log_output, args=(instance.stdout,)).start()

|

||||

threading.Thread(target=log_output, args=(instance.stderr,)).start()

|

||||

logger.info("Starting Alpaca's Ollama instance...")

|

||||